请我喝杯咖啡☕

*我的帖子解释了 places365。

places365() 可以使用 places365 数据集,如下所示:

*备忘录:

- 第一个参数是 root(必需类型:str 或 pathlib.path)。 *绝对或相对路径都是可能的。

- 第二个参数是 split(可选-默认:“train-standard”-类型:str)。 *可以设置“train-standard”(1,803,460张图像)、“train-challenge”(8,026,628张图像)或“val”(36,500张图像)。不支持“test”(328,500 张图像),因此我在 github 上请求了该功能。

- 第三个参数很小(可选-默认:false-类型:bool)。

- 第四个参数是 download(可选-默认:false-类型:bool):

*备注:

- 如果为 true,则从互联网下载数据集并解压(解压)到根目录。

- 如果为 true 并且数据集已下载,则将其提取。

- 如果为 true 并且数据集已下载并提取,则会发生错误,因为提取的文件夹存在。 *删除解压的文件夹不会出错。

- 如果数据集已经下载并提取,则应该为 false,以免出现错误。

- 从这里开始:

- 对于split="train-standard"和small=false,您可以手动下载并提取数据集filelist_places365-standard.tar和train_large_places365standard.tar分别到data/和data/data_large_standard/

- 对于split="train-standard"和small=true,您可以手动下载并提取数据集filelist_places365-standard.tar和train_256_places365standard.tar分别到data/和data/data_256_standard/

- 对于split="train-challenge"和small=false,您可以手动下载并提取数据集filelist_places365-challenge.tar和train_large_places365challenge.tar分别到data/和data/data_large/

- 对于split="train-challenge"和small=true,您可以手动下载并提取数据集filelist_places365-challenge.tar和train_256_places365challenge.tar分别到data/和data/data_256_challenge/。

- 对于split="val" 和small=false,您可以手动下载数据集filelist_places365-standard.tar 和val_large.tar 并分别解压到data/ 和data/val_large/。

- 对于split="val" 和small=true,您可以手动下载数据集filelist_places365-standard.tar 和val_large.tar 并分别解压到data/ 和data/val_256/

- 第五个参数是transform(optional-default:none-type:callable)。

- 第 6 个参数是 target_transform(optional-default:none-type:callable)。

- 第 7 个参数是 loader(可选-默认:torchvision.datasets.folder.default_loader-type:callable)。

- 关于“火车标准”图像索引类的标签,airfield(0) 为 0~4999,airplane_cabin(1) 为 5000~9999,airport_terminal(2) 为 10000~14999, 壁龛(3)为15000~19999,小巷(4)为20000~24999,露天剧场(5)为25000~29999,amusement_arcade(6) 是30000~34999,游乐园(7)为35000~39999,公寓/户外(8)为40000~44999,水族馆(9)为45000~49999 ,等等

- 关于“火车挑战”图像索引类的标签,airfield(0) 为 0~38566,airplane_cabin(1) 为 38567~47890,airport_terminal(2) 是47891~74901,壁龛(3)为74902~98482,小巷(4)为98483~137662,露天剧场(5)为137663~150034, 游乐园(6) 为 150035~161051,游乐园(7) 为 161052~201051,公寓楼/户外(8) 为 201052~227872, 水族馆(9)是227873~267872等

from torchvision.datasets import Places365

from torchvision.datasets.folder import default_loader

trainstd_large_data = Places365(

root="data"

)

trainstd_large_data = Places365(

root="data",

split="train-standard",

small=False,

download=False,

transform=None,

target_transform=None,

loader=default_loader

)

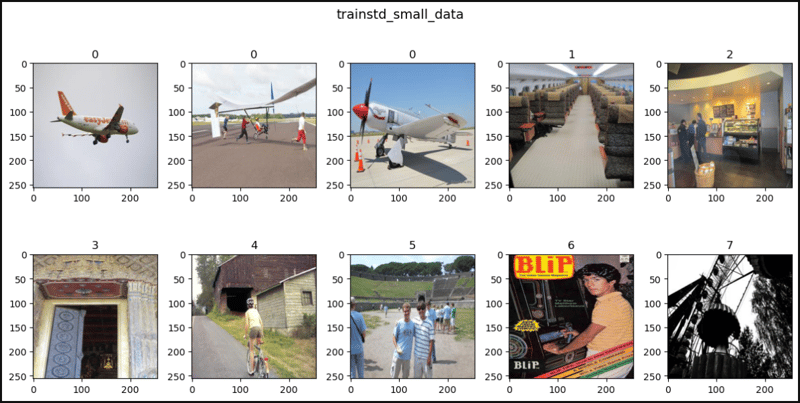

trainstd_small_data = Places365(

root="data",

split="train-standard",

small=True

)

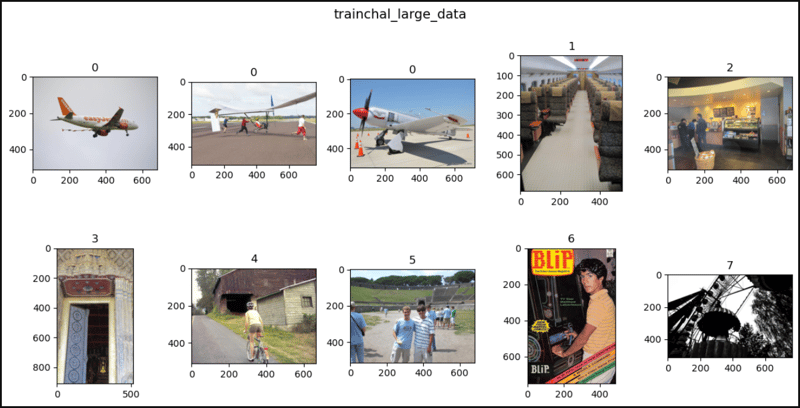

trainchal_large_data = Places365(

root="data",

split="train-challenge",

small=False

)

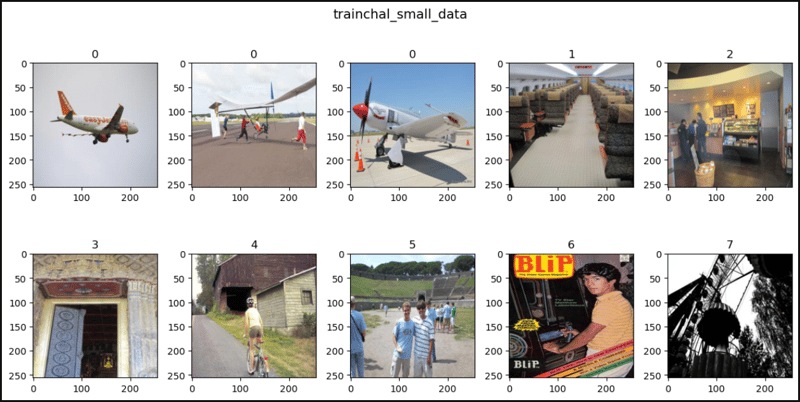

trainchal_small_data = Places365(

root="data",

split="train-challenge",

small=True

)

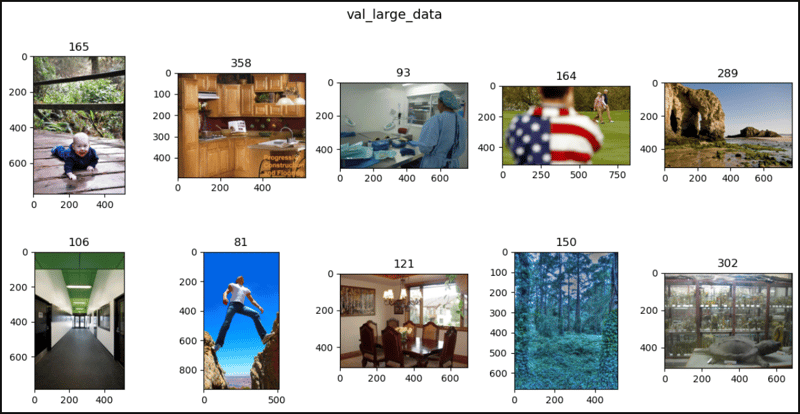

val_large_data = Places365(

root="data",

split="val",

small=False

)

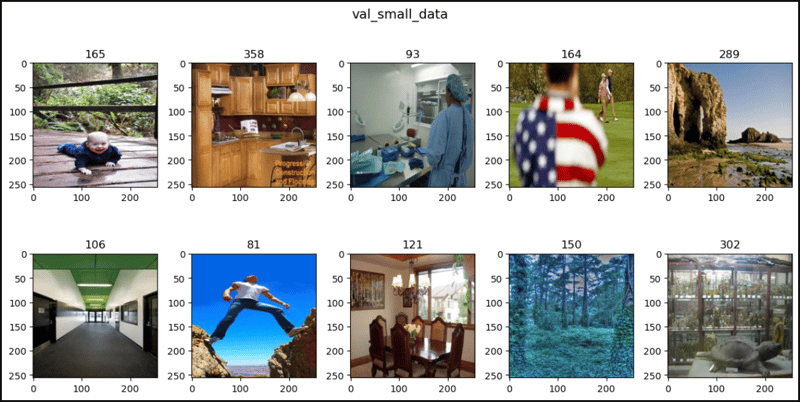

val_small_data = Places365(

root="data",

split="val",

small=True

)

len(trainstd_large_data), len(trainstd_small_data)

# (1803460, 1803460)

len(trainchal_large_data), len(trainchal_small_data)

# (8026628, 8026628)

len(val_large_data), len(val_small_data)

# (36500, 36500)

trainstd_large_data

# Dataset Places365

# Number of datapoints: 1803460

# Root location: data

# Split: train-standard

# Small: False

trainstd_large_data.root

# 'data'

trainstd_large_data.split

# 'train-standard'

trainstd_large_data.small

# False

trainstd_large_data.download_devkit

trainstd_large_data.download_images

#

print(trainstd_large_data.transform)

# None

print(trainstd_large_data.target_transform)

# None

trainstd_large_data.loader

# Any>

len(trainstd_large_data.classes), trainstd_large_data.classes

# (365,

# ['/a/airfield', '/a/airplane_cabin', '/a/airport_terminal',

# '/a/alcove', '/a/alley', '/a/amphitheater', '/a/amusement_arcade',

# '/a/amusement_park', '/a/apartment_building/outdoor',

# '/a/aquarium', '/a/aqueduct', '/a/arcade', '/a/arch',

# '/a/archaelogical_excavation', ..., '/y/youth_hostel', '/z/zen_garden'])

trainstd_large_data[0]

# (, 0)

trainstd_large_data[1]

# (, 0)

trainstd_large_data[2]

# (, 0)

trainstd_large_data[5000]

# (, 1)

trainstd_large_data[10000]

# (, 2)

trainstd_small_data[0]

# (, 0)

trainstd_small_data[1]

# (, 0)

trainstd_small_data[2]

# (, 0)

trainstd_small_data[5000]

# (, 1)

trainstd_small_data[10000]

# (, 2)

trainchal_large_data[0]

# (, 0)

trainchal_large_data[1]

# (, 0)

trainchal_large_data[2]

# (, 0)

trainchal_large_data[38567]

# (, 1)

trainchal_large_data[47891]

# (, 2)

trainchal_small_data[0]

# (, 0)

trainchal_small_data[1]

# (, 0)

trainchal_small_data[2]

# (, 0)

trainchal_small_data[38567]

# (, 1)

trainchal_small_data[47891]

# (, 2)

val_large_data[0]

# (, 165)

val_large_data[1]

# (, 358)

val_large_data[2]

# (, 93)

val_large_data[3]

# (, 164)

val_large_data[4]

# (, 289)

val_small_data[0]

# (, 165)

val_small_data[1]

# (, 358)

val_small_data[2]

# (, 93)

val_small_data[3]

# (, 164)

val_small_data[4]

# (, 289)

import matplotlib.pyplot as plt

def show_images(data, ims, main_title=None):

plt.figure(figsize=(12, 6))

plt.suptitle(t=main_title, y=1.0, fontsize=14)

for i, j in enumerate(iterable=ims, start=1):

plt.subplot(2, 5, i)

im, lab = data[j]

plt.imshow(X=im)

plt.title(label=lab)

plt.tight_layout(h_pad=3.0)

plt.show()

trainstd_ims = (0, 1, 2, 5000, 10000, 15000, 20000, 25000, 30000, 35000)

trainchal_ims = (0, 1, 2, 38567, 47891, 74902, 98483, 137663, 150035, 161052)

val_ims = (0, 1, 2, 3, 4, 5, 6, 7, 8, 9)

show_images(data=trainstd_large_data, ims=trainstd_ims,

main_title="trainstd_large_data")

show_images(data=trainstd_small_data, ims=trainstd_ims,

main_title="trainstd_small_data")

show_images(data=trainchal_large_data, ims=trainchal_ims,

main_title="trainchal_large_data")

show_images(data=trainchal_small_data, ims=trainchal_ims,

main_title="trainchal_small_data")

show_images(data=val_large_data, ims=val_ims,

main_title="val_large_data")

show_images(data=val_small_data, ims=val_ims,

main_title="val_small_data")