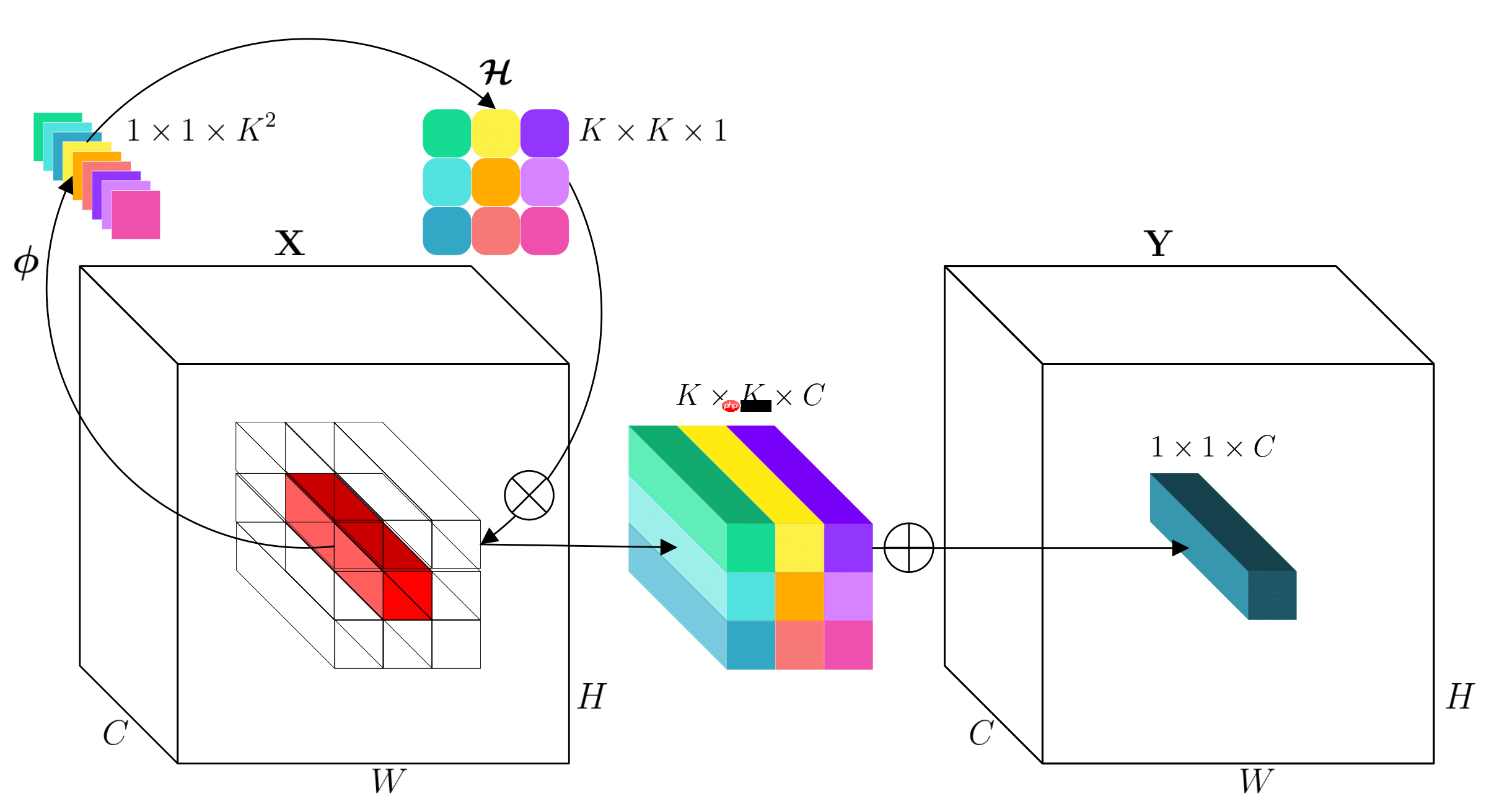

本文介绍用Layer方式搭建involution算子,以此魔改ResNet打造RedNet模型,已加入【Paddle-Image-Models】项目,含转换后的官方预训练参数,精度基本对齐。还展示了算子和模型的搭建代码、测试情况及精度验证结果,RedNet性能和效率优于ResNet等模型。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

论文:【Involution: Inverting the Inherence of Convolution for Visual Recognition】

代码:【d-li14/involution】

论文概要

import paddleimport paddle.nn as nnfrom paddle.vision.models import resnet

class involution(nn.Layer):

def __init__(self,

channels,

kernel_size,

stride):

super(involution, self).__init__()

self.kernel_size = kernel_size

self.stride = stride

self.channels = channels

reduction_ratio = 4

self.group_channels = 16

self.groups = self.channels // self.group_channels

self.conv1 = nn.Sequential(

('conv', nn.Conv2D(

in_channels=channels,

out_channels=channels // reduction_ratio,

kernel_size=1,

bias_attr=False

)),

('bn', nn.BatchNorm2D(channels // reduction_ratio)),

('activate', nn.ReLU())

)

self.conv2 = nn.Sequential(

('conv', nn.Conv2D(

in_channels=channels // reduction_ratio,

out_channels=kernel_size**2 * self.groups,

kernel_size=1,

stride=1))

) if stride > 1:

self.avgpool = nn.AvgPool2D(stride, stride) def forward(self, x):

weight = self.conv2(self.conv1(

x if self.stride == 1 else self.avgpool(x)))

b, c, h, w = weight.shape

weight = weight.reshape((

b, self.groups, self.kernel_size**2, h, w)).unsqueeze(2)

out = nn.functional.unfold(

x, self.kernel_size, strides=self.stride, paddings=(self.kernel_size-1)//2, dilations=1)

out = out.reshape(

(b, self.groups, self.group_channels, self.kernel_size**2, h, w))

out = (weight * out).sum(axis=3).reshape((b, self.channels, h, w)) return outinv = involution(128, 7, 1) paddle.summary(inv, (1, 128, 64, 64)) out = inv(paddle.randn((1, 128, 64, 64)))print(out.shape)

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[1, 128, 64, 64]] [1, 32, 64, 64] 4,096

BatchNorm2D-1 [[1, 32, 64, 64]] [1, 32, 64, 64] 128

ReLU-1 [[1, 32, 64, 64]] [1, 32, 64, 64] 0

Conv2D-2 [[1, 32, 64, 64]] [1, 392, 64, 64] 12,936

===========================================================================

Total params: 17,160

Trainable params: 17,032

Non-trainable params: 128

---------------------------------------------------------------------------

Input size (MB): 2.00

Forward/backward pass size (MB): 15.25

Params size (MB): 0.07

Estimated Total Size (MB): 17.32

---------------------------------------------------------------------------

[1, 128, 64, 64]/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:648: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance.")

| Model | Params(M) | FLOPs(G) | Top-1 (%) | Top-5 (%) |

|---|---|---|---|---|

| RedNet-26 | 9.23(32.8%↓) | 1.73(29.2%↓) | 75.96 | 93.19 |

| RedNet-38 | 12.39(36.7%↓) | 2.22(31.3%↓) | 77.48 | 93.57 |

| RedNet-50 | 15.54(39.5%↓) | 2.71(34.1%↓) | 78.35 | 94.13 |

| RedNet-101 | 25.65(42.6%↓) | 4.74(40.5%↓) | 78.92 | 94.35 |

| RedNet-152 | 33.99(43.5%↓) | 6.79(41.4%↓) | 79.12 | 94.38 |

class BottleneckBlock(resnet.BottleneckBlock):

def __init__(self,

inplanes,

planes,

stride=1,

downsample=None,

groups=1,

base_width=64,

dilation=1,

norm_layer=None):

super(BottleneckBlock, self).__init__(inplanes, planes, stride,

downsample, groups, base_width, dilation, norm_layer)

width = int(planes * (base_width / 64.)) * groups

self.conv2 = involution(width, 7, stride)

class RedNet(resnet.ResNet):

def __init__(self, block, depth, num_classes=1000, with_pool=True):

super(RedNet, self).__init__(block=block, depth=50,

num_classes=num_classes, with_pool=with_pool)

layer_cfg = { 26: [1, 2, 4, 1], 38: [2, 3, 5, 2], 50: [3, 4, 6, 3], 101: [3, 4, 23, 3], 152: [3, 8, 36, 3]

}

layers = layer_cfg[depth]

self.conv1 = None

self.bn1 = None

self.relu = None

self.inplanes = 64

self.stem = nn.Sequential(

nn.Sequential(

('conv', nn.Conv2D(

in_channels=3,

out_channels=self.inplanes // 2,

kernel_size=3,

stride=2,

padding=1,

bias_attr=False

)),

('bn', nn.BatchNorm2D(self.inplanes // 2)),

('activate', nn.ReLU())

),

involution(self.inplanes // 2, 3, 1),

nn.BatchNorm2D(self.inplanes // 2),

nn.ReLU(),

nn.Sequential(

('conv', nn.Conv2D(

in_channels=self.inplanes // 2,

out_channels=self.inplanes,

kernel_size=3,

stride=1,

padding=1,

bias_attr=False

)),

('bn', nn.BatchNorm2D(self.inplanes)),

('activate', nn.ReLU())

)

)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2) def forward(self, x):

x = self.stem(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x) if self.with_pool:

x = self.avgpool(x) if self.num_classes > 0:

x = paddle.flatten(x, 1)

x = self.fc(x) return xmodel = RedNet(BottleneckBlock, 26) paddle.summary(model, (1, 3, 224, 224)) out = model(paddle.randn((1, 3, 224, 224)))print(out.shape)

-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

Conv2D-88 [[1, 3, 224, 224]] [1, 32, 112, 112] 864

BatchNorm2D-71 [[1, 32, 112, 112]] [1, 32, 112, 112] 128

ReLU-35 [[1, 32, 112, 112]] [1, 32, 112, 112] 0

Conv2D-89 [[1, 32, 112, 112]] [1, 8, 112, 112] 256

BatchNorm2D-72 [[1, 8, 112, 112]] [1, 8, 112, 112] 32

ReLU-36 [[1, 8, 112, 112]] [1, 8, 112, 112] 0

Conv2D-90 [[1, 8, 112, 112]] [1, 18, 112, 112] 162

involution-18 [[1, 32, 112, 112]] [1, 32, 112, 112] 0

BatchNorm2D-73 [[1, 32, 112, 112]] [1, 32, 112, 112] 128

ReLU-37 [[1, 32, 112, 112]] [1, 32, 112, 112] 0

Conv2D-91 [[1, 32, 112, 112]] [1, 64, 112, 112] 18,432

BatchNorm2D-74 [[1, 64, 112, 112]] [1, 64, 112, 112] 256

ReLU-38 [[1, 64, 112, 112]] [1, 64, 112, 112] 0

MaxPool2D-1 [[1, 64, 112, 112]] [1, 64, 56, 56] 0

Conv2D-93 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-76 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-39 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-96 [[1, 64, 56, 56]] [1, 16, 56, 56] 1,024

BatchNorm2D-79 [[1, 16, 56, 56]] [1, 16, 56, 56] 64

ReLU-40 [[1, 16, 56, 56]] [1, 16, 56, 56] 0

Conv2D-97 [[1, 16, 56, 56]] [1, 196, 56, 56] 3,332

involution-19 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

BatchNorm2D-77 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-95 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-78 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Conv2D-92 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-75 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

BottleneckBlock-17 [[1, 64, 56, 56]] [1, 256, 56, 56] 0

Conv2D-99 [[1, 256, 56, 56]] [1, 128, 56, 56] 32,768

BatchNorm2D-81 [[1, 128, 56, 56]] [1, 128, 56, 56] 512

ReLU-41 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

AvgPool2D-4 [[1, 128, 56, 56]] [1, 128, 28, 28] 0

Conv2D-102 [[1, 128, 28, 28]] [1, 32, 28, 28] 4,096

BatchNorm2D-84 [[1, 32, 28, 28]] [1, 32, 28, 28] 128

ReLU-42 [[1, 32, 28, 28]] [1, 32, 28, 28] 0

Conv2D-103 [[1, 32, 28, 28]] [1, 392, 28, 28] 12,936

involution-20 [[1, 128, 56, 56]] [1, 128, 28, 28] 0

BatchNorm2D-82 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-101 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-83 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Conv2D-98 [[1, 256, 56, 56]] [1, 512, 28, 28] 131,072

BatchNorm2D-80 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-18 [[1, 256, 56, 56]] [1, 512, 28, 28] 0

Conv2D-104 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-85 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-43 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-107 [[1, 128, 28, 28]] [1, 32, 28, 28] 4,096

BatchNorm2D-88 [[1, 32, 28, 28]] [1, 32, 28, 28] 128

ReLU-44 [[1, 32, 28, 28]] [1, 32, 28, 28] 0

Conv2D-108 [[1, 32, 28, 28]] [1, 392, 28, 28] 12,936

involution-21 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

BatchNorm2D-86 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-106 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-87 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-19 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-110 [[1, 512, 28, 28]] [1, 256, 28, 28] 131,072

BatchNorm2D-90 [[1, 256, 28, 28]] [1, 256, 28, 28] 1,024

ReLU-45 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

AvgPool2D-5 [[1, 256, 28, 28]] [1, 256, 14, 14] 0

Conv2D-113 [[1, 256, 14, 14]] [1, 64, 14, 14] 16,384

BatchNorm2D-93 [[1, 64, 14, 14]] [1, 64, 14, 14] 256

ReLU-46 [[1, 64, 14, 14]] [1, 64, 14, 14] 0

Conv2D-114 [[1, 64, 14, 14]] [1, 784, 14, 14] 50,960

involution-22 [[1, 256, 28, 28]] [1, 256, 14, 14] 0

BatchNorm2D-91 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-112 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-92 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Conv2D-109 [[1, 512, 28, 28]] [1, 1024, 14, 14] 524,288

BatchNorm2D-89 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-20 [[1, 512, 28, 28]] [1, 1024, 14, 14] 0

Conv2D-115 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-94 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-47 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-118 [[1, 256, 14, 14]] [1, 64, 14, 14] 16,384

BatchNorm2D-97 [[1, 64, 14, 14]] [1, 64, 14, 14] 256

ReLU-48 [[1, 64, 14, 14]] [1, 64, 14, 14] 0

Conv2D-119 [[1, 64, 14, 14]] [1, 784, 14, 14] 50,960

involution-23 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-95 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-117 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-96 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-21 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-120 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-98 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-49 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-123 [[1, 256, 14, 14]] [1, 64, 14, 14] 16,384

BatchNorm2D-101 [[1, 64, 14, 14]] [1, 64, 14, 14] 256

ReLU-50 [[1, 64, 14, 14]] [1, 64, 14, 14] 0

Conv2D-124 [[1, 64, 14, 14]] [1, 784, 14, 14] 50,960

involution-24 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-99 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-122 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-100 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-22 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-125 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-102 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-51 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-128 [[1, 256, 14, 14]] [1, 64, 14, 14] 16,384

BatchNorm2D-105 [[1, 64, 14, 14]] [1, 64, 14, 14] 256

ReLU-52 [[1, 64, 14, 14]] [1, 64, 14, 14] 0

Conv2D-129 [[1, 64, 14, 14]] [1, 784, 14, 14] 50,960

involution-25 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-103 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-127 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-104 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-23 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-131 [[1, 1024, 14, 14]] [1, 512, 14, 14] 524,288

BatchNorm2D-107 [[1, 512, 14, 14]] [1, 512, 14, 14] 2,048

ReLU-53 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

AvgPool2D-6 [[1, 512, 14, 14]] [1, 512, 7, 7] 0

Conv2D-134 [[1, 512, 7, 7]] [1, 128, 7, 7] 65,536

BatchNorm2D-110 [[1, 128, 7, 7]] [1, 128, 7, 7] 512

ReLU-54 [[1, 128, 7, 7]] [1, 128, 7, 7] 0

Conv2D-135 [[1, 128, 7, 7]] [1, 1568, 7, 7] 202,272

involution-26 [[1, 512, 14, 14]] [1, 512, 7, 7] 0

BatchNorm2D-108 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-133 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-109 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Conv2D-130 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 2,097,152

BatchNorm2D-106 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

BottleneckBlock-24 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 0

AdaptiveAvgPool2D-1 [[1, 2048, 7, 7]] [1, 2048, 1, 1] 0

Linear-1 [[1, 2048]] [1, 1000] 2,049,000

===============================================================================

Total params: 9,264,318

Trainable params: 9,202,014

Non-trainable params: 62,304

-------------------------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 188.62

Params size (MB): 35.34

Estimated Total Size (MB): 224.53

-------------------------------------------------------------------------------

[1, 1000]!pip install ppim==1.0.1 -i https://pypi.python.org/pypi

# 解压数据集!mkdir ~/data/ILSVRC2012 !tar -xf ~/data/data68594/ILSVRC2012_img_val.tar -C ~/data/ILSVRC2012

import osimport cv2import numpy as npimport paddleimport paddle.vision.transforms as Tfrom ppim import rednet26, rednet38, rednet50, rednet101, rednet152# 构建数据集# backend cv2class ILSVRC2012(paddle.io.Dataset):

def __init__(self, root, label_list, transform):

self.transform = transform

self.root = root

self.label_list = label_list

self.load_datas() def load_datas(self):

self.imgs = []

self.labels = [] with open(self.label_list, 'r') as f: for line in f:

img, label = line[:-1].split(' ')

self.imgs.append(os.path.join(self.root, img))

self.labels.append(int(label)) def __getitem__(self, idx):

label = self.labels[idx]

image = self.imgs[idx]

image = cv2.imread(image)

image = self.transform(image) return image.astype('float32'), np.array(label).astype('int64') def __len__(self):

return len(self.imgs)# 配置模型model, val_transforms = rednet26(pretrained=True)

model = paddle.Model(model)

model.prepare(metrics=paddle.metric.Accuracy(topk=(1, 5)))# 配置数据集val_dataset = ILSVRC2012('data/ILSVRC2012', transform=val_transforms, label_list='data/data68594/val_list.txt')# 模型验证model.evaluate(val_dataset, batch_size=16){'acc_top1': 0.75956, 'acc_top5': 0.9319}以上就是involution:大家一起内卷起来吧的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号