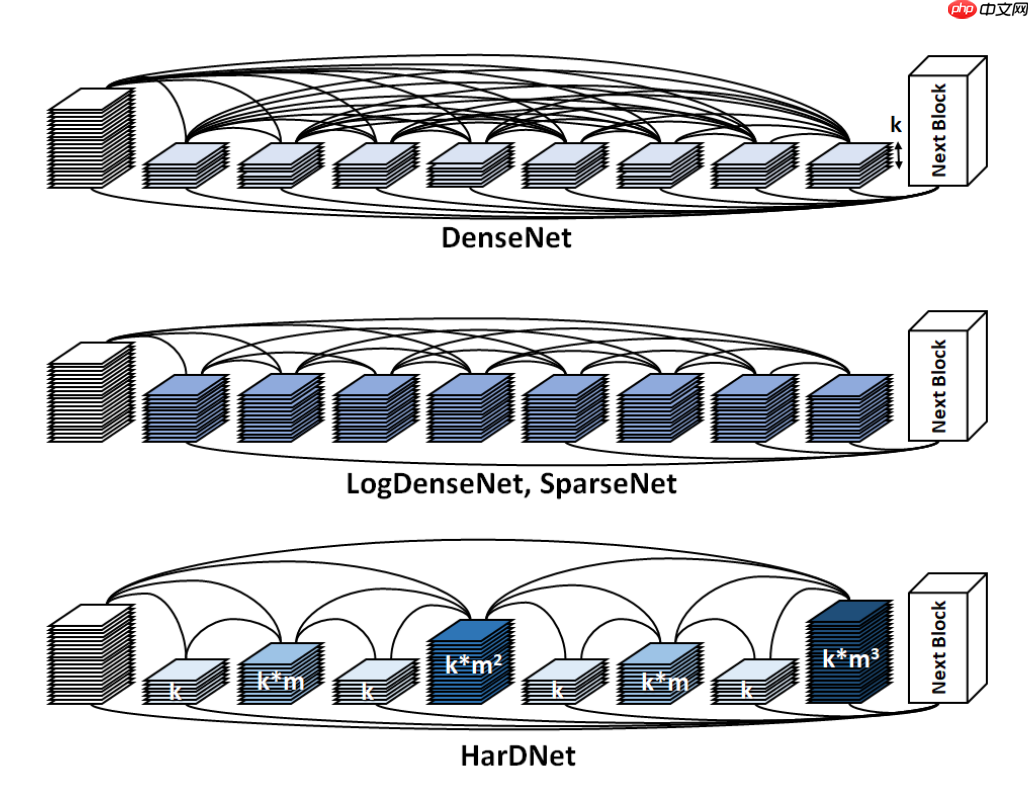

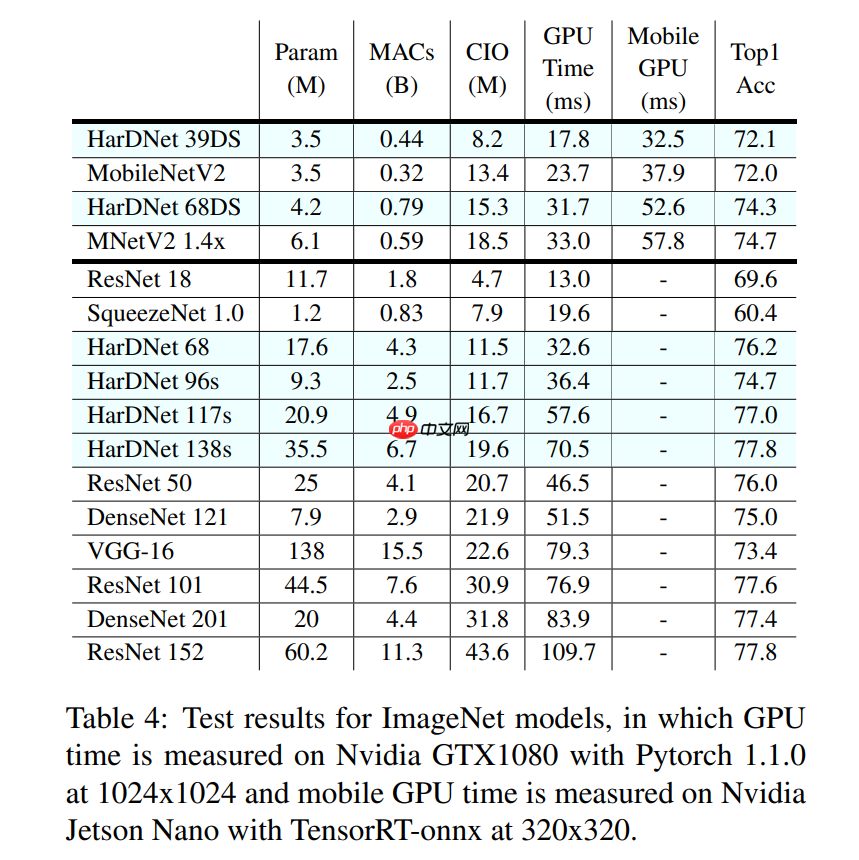

HarDNet是低内存流量网络,以Harmonic Dense Blocks为特色,推理时间较多种主流模型减少30%-45%。项目迁移其代码和预训练模型,前向计算结果、精度与官方一致。含多种型号,参数3.5M-36.7M,Top-1精度72.08%-78.04%。验证集用特定数据处理,还演示了Cifar100上的训练。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

论文:HarDNet: A Low Memory Traffic Network

官方实现:PingoLH/Pytorch-HarDNet

验证集数据处理:

# backend: pil# input_size: 224x224transforms = T.Compose([

T.Resize(256),

T.CenterCrop(224),

T.ToTensor(),

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])模型细节:

| Model | Model Name | Params (M) | FLOPs (G) | Top-1 (%) | Top-5 (%) | Pretrained Model |

|---|---|---|---|---|---|---|

| HarDNet-68 | hardnet_68 | 17.6 | 4.3 | 76.48 | 93.01 | Download |

| HarDNet-85 | hardnet_85 | 36.7 | 9.1 | 78.04 | 93.89 | Download |

| HarDNet-39-ds | hardnet_39_ds | 3.5 | 0.4 | 72.08 | 90.43 | Download |

| HarDNet-68-ds | hardnet_68_ds | 4.2 | 0.8 | 74.29 | 91.87 | Download |

import paddleimport paddle.nn as nn# ConvBN 层(卷积 + 归一化 + 激活函数)def ConvLayer(in_channels, out_channels, kernel_size=3, stride=1, bias_attr=False):

layer = nn.Sequential(

('conv', nn.Conv2D(in_channels, out_channels, kernel_size=kernel_size,

stride=stride, padding=kernel_size//2, groups=1, bias_attr=bias_attr)),

('norm', nn.BatchNorm2D(out_channels)),

('relu', nn.ReLU6())

) return layer# dwConvBN 层(深度可分离卷积 + 归一化)def DWConvLayer(in_channels, out_channels, kernel_size=3, stride=1, bias_attr=False):

layer = nn.Sequential(

('dwconv', nn.Conv2D(in_channels, out_channels, kernel_size=kernel_size,

stride=stride, padding=1, groups=out_channels, bias_attr=bias_attr)),

('norm', nn.BatchNorm2D(out_channels))

) return layer# 合并卷积层(ConvBN + dwConvBN)def CombConvLayer(in_channels, out_channels, kernel_size=1, stride=1):

layer = nn.Sequential(

('layer1', ConvLayer(in_channels, out_channels, kernel_size=kernel_size)),

('layer2', DWConvLayer(out_channels, out_channels, stride=stride))

) return layer# HarD Block class HarDBlock(nn.Layer):

def __init__(self, in_channels, growth_rate, grmul, n_layers, keepBase=False, residual_out=False, dwconv=False):

super().__init__()

self.keepBase = keepBase

self.links = []

layers_ = []

self.out_channels = 0

for i in range(n_layers):

outch, inch, link = self.get_link(

i+1, in_channels, growth_rate, grmul)

self.links.append(link)

use_relu = residual_out if dwconv:

layers_.append(CombConvLayer(inch, outch)) else:

layers_.append(ConvLayer(inch, outch)) if (i % 2 == 0) or (i == n_layers - 1):

self.out_channels += outch

self.layers = nn.LayerList(layers_) def get_link(self, layer, base_ch, growth_rate, grmul):

if layer == 0: return base_ch, 0, []

out_channels = growth_rate

link = [] for i in range(10):

dv = 2 ** i if layer % dv == 0:

k = layer - dv

link.append(k) if i > 0:

out_channels *= grmul

out_channels = int(int(out_channels + 1) / 2) * 2

in_channels = 0

for i in link:

ch, _, _ = self.get_link(i, base_ch, growth_rate, grmul)

in_channels += ch return out_channels, in_channels, link def forward(self, x):

layers_ = [x] for layer in range(len(self.layers)):

link = self.links[layer]

tin = [] for i in link:

tin.append(layers_[i]) if len(tin) > 1:

x = paddle.concat(tin, 1) else:

x = tin[0]

out = self.layers[layer](x)

layers_.append(out)

t = len(layers_)

out_ = [] for i in range(t): if (i == 0 and self.keepBase) or \

(i == t-1) or (i % 2 == 1):

out_.append(layers_[i])

out = paddle.concat(out_, 1) return out# HarDNetclass HarDNet(nn.Layer):

def __init__(self, depth_wise=False, arch=85, class_dim=1000, with_pool=True):

super().__init__()

first_ch = [32, 64]

second_kernel = 3

max_pool = True

grmul = 1.7

drop_rate = 0.1

# HarDNet68

ch_list = [128, 256, 320, 640, 1024]

gr = [14, 16, 20, 40, 160]

n_layers = [8, 16, 16, 16, 4]

downSamp = [1, 0, 1, 1, 0] if arch == 85: # HarDNet85

first_ch = [48, 96]

ch_list = [192, 256, 320, 480, 720, 1280]

gr = [24, 24, 28, 36, 48, 256]

n_layers = [8, 16, 16, 16, 16, 4]

downSamp = [1, 0, 1, 0, 1, 0]

drop_rate = 0.2

elif arch == 39: # HarDNet39

first_ch = [24, 48]

ch_list = [96, 320, 640, 1024]

grmul = 1.6

gr = [16, 20, 64, 160]

n_layers = [4, 16, 8, 4]

downSamp = [1, 1, 1, 0] if depth_wise:

second_kernel = 1

max_pool = False

drop_rate = 0.05

blks = len(n_layers)

self.base = nn.LayerList([]) # First Layer: Standard Conv3x3, Stride=2

self.base.append(

ConvLayer(in_channels=3, out_channels=first_ch[0], kernel_size=3,

stride=2, bias_attr=False)) # Second Layer

self.base.append(

ConvLayer(first_ch[0], first_ch[1], kernel_size=second_kernel)) # Maxpooling or DWConv3x3 downsampling

if max_pool:

self.base.append(nn.MaxPool2D(kernel_size=3, stride=2, padding=1)) else:

self.base.append(DWConvLayer(first_ch[1], first_ch[1], stride=2)) # Build all HarDNet blocks

ch = first_ch[1] for i in range(blks):

blk = HarDBlock(ch, gr[i], grmul, n_layers[i], dwconv=depth_wise)

ch = blk.out_channels

self.base.append(blk) if i == blks-1 and arch == 85:

self.base.append(nn.Dropout(0.1))

self.base.append(ConvLayer(ch, ch_list[i], kernel_size=1))

ch = ch_list[i] if downSamp[i] == 1: if max_pool:

self.base.append(nn.MaxPool2D(kernel_size=2, stride=2)) else:

self.base.append(DWConvLayer(ch, ch, stride=2))

ch = ch_list[blks-1]

layers = [] if with_pool:

layers.append(nn.AdaptiveAvgPool2D((1, 1))) if class_dim > 0:

layers.append(nn.Flatten())

layers.append(nn.Dropout(drop_rate))

layers.append(nn.Linear(ch, class_dim))

self.base.append(nn.Sequential(*layers)) def forward(self, x):

for layer in self.base:

x = layer(x) return xdef hardnet_39_ds(pretrained=False, **kwargs):

model = HarDNet(arch=39, depth_wise=True, **kwargs) if pretrained:

params = paddle.load('/home/aistudio/data/data78450/hardnet_39_ds.pdparams')

model.set_dict(params) return modeldef hardnet_68(pretrained=False, **kwargs):

model = HarDNet(arch=68, **kwargs) if pretrained:

params = paddle.load('/home/aistudio/data/data78450/hardnet_68.pdparams')

model.set_dict(params) return modeldef hardnet_68_ds(pretrained=False, **kwargs):

model = HarDNet(arch=68, depth_wise=True, **kwargs) if pretrained:

params = paddle.load('/home/aistudio/data/data78450/hardnet_68_ds.pdparams')

model.set_dict(params) return modeldef hardnet_85(pretrained=False, **kwargs):

model = HarDNet(arch=85, **kwargs) if pretrained:

params = paddle.load('/home/aistudio/data/data78450/hardnet_85.pdparams')

model.set_dict(params) return model# 解压数据集!mkdir ~/data/ILSVRC2012 !tar -xf ~/data/data68594/ILSVRC2012_img_val.tar -C ~/data/ILSVRC2012

import osimport cv2import numpy as npimport paddleimport paddle.vision.transforms as Tfrom PIL import Image# 构建数据集class ILSVRC2012(paddle.io.Dataset):

def __init__(self, root, label_list, transform, backend='pil'):

self.transform = transform

self.root = root

self.label_list = label_list

self.backend = backend

self.load_datas() def load_datas(self):

self.imgs = []

self.labels = [] with open(self.label_list, 'r') as f: for line in f:

img, label = line[:-1].split(' ')

self.imgs.append(os.path.join(self.root, img))

self.labels.append(int(label)) def __getitem__(self, idx):

label = self.labels[idx]

image = self.imgs[idx] if self.backend=='cv2':

image = cv2.imread(image) else:

image = Image.open(image).convert('RGB')

image = self.transform(image) return image.astype('float32'), np.array(label).astype('int64') def __len__(self):

return len(self.imgs)# 配置模型val_transforms = T.Compose([

T.Resize(256),

T.CenterCrop(224),

T.ToTensor(),

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

model = hardnet_68(pretrained=True)

model = paddle.Model(model)

model.prepare(metrics=paddle.metric.Accuracy(topk=(1, 5)))# 配置数据集val_dataset = ILSVRC2012('data/ILSVRC2012', transform=val_transforms, label_list='data/data68594/val_list.txt', backend='pil')# 模型验证acc = model.evaluate(val_dataset, batch_size=512, num_workers=0, verbose=1)print(acc)Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step.

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/tensor/creation.py:143: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations if data.dtype == np.object: /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dataloader/dataloader_iter.py:89: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations if isinstance(slot[0], (np.ndarray, np.bool, numbers.Number)): /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/utils.py:77: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working return (isinstance(seq, collections.Sequence) and

step 98/98 [==============================] - acc_top1: 0.7648 - acc_top5: 0.9301 - 6s/step

Eval samples: 50000

{'acc_top1': 0.76484, 'acc_top5': 0.9301}# 导入 Paddleimport paddleimport paddle.nn as nnimport paddle.vision.transforms as Tfrom paddle.vision import Cifar100# 加载模型model = hardnet_68(pretrained=True, class_dim=100)# 使用高层 API 进行模型封装model = paddle.Model(model)# 配置优化器opt = paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters())# 配置损失函数loss = nn.CrossEntropyLoss()# 配置评价指标metric = paddle.metric.Accuracy(topk=(1, 5))# 模型准备model.prepare(optimizer=opt, loss=loss, metrics=metric)# 配置数据增广train_transforms = T.Compose([

T.Resize(256),

T.RandomCrop(224),

T.ToTensor(),

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

val_transforms = T.Compose([

T.Resize(256),

T.CenterCrop(224),

T.ToTensor(),

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])# 配置数据集train_dataset = Cifar100(mode='train', transform=train_transforms, backend='pil')

val_dataset = Cifar100(mode='test', transform=val_transforms, backend='pil')# 模型微调model.fit(

train_data=train_dataset,

eval_data=val_dataset,

batch_size=256,

epochs=1,

eval_freq=1,

log_freq=1,

save_dir='save_models',

save_freq=1,

verbose=1,

drop_last=False,

shuffle=True,

num_workers=0)/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1303: UserWarning: Skip loading for base.16.3.weight. base.16.3.weight receives a shape [1024, 1000], but the expected shape is [1024, 100].

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1303: UserWarning: Skip loading for base.16.3.bias. base.16.3.bias receives a shape [1000], but the expected shape is [100].

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

Cache file /home/aistudio/.cache/paddle/dataset/cifar/cifar-100-python.tar.gz not found, downloading https://dataset.bj.bcebos.com/cifar/cifar-100-python.tar.gz

Begin to download

Download finishedThe loss value printed in the log is the current step, and the metric is the average value of previous step. Epoch 1/1

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:648: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance.")

step 196/196 [==============================] - loss: 1.3977 - acc_top1: 0.5135 - acc_top5: 0.8123 - 2s/step save checkpoint at /home/aistudio/save_models/0 Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 40/40 [==============================] - loss: 1.1718 - acc_top1: 0.6054 - acc_top5: 0.8826 - 1s/step Eval samples: 10000 save checkpoint at /home/aistudio/save_models/final

以上就是基于 Paddle2.0 实现 HarDNet 模型的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号