DeepMind的MuZero算法继AlphaFold后走红,无需人类知识和规则,能通过分析环境与未知条件博弈。其极简实现含三个模型,通过强化学习训练。在CartPole-v0环境测试,经2000轮训练,模型可完美掌握游戏,展现出超越前代的潜力,未来计划在更多环境复现。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

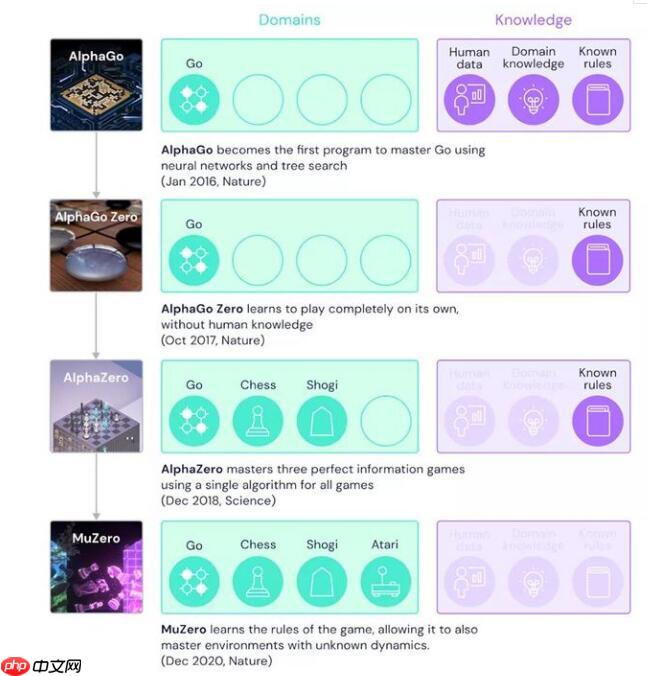

继AlphaFold 大火之后,DeepMind 又一款算法蹿红。12 月 23 日,DeepMind 在官网发表博文 MuZero: Mastering Go, chess, shogi and Atari without rules,并详细介绍了这款名为 MuZero 的 AI 算法。

本项目是一个极简的MuZero的实现,没有使用MCTS方法,模型由Representation_model、Dynamics_Model、Prediction_Model构成:

import gymimport numpy as npimport paddleimport paddle.nn as nn

import paddle.optimizer as optimimport paddle.nn.functional as Fimport copyimport randomfrom tqdm import tqdmfrom collections import deque

env = gym.make('CartPole-v0')

hidden_dims = 128o_dim = env.observation_space.shape[0]

act_dim = env.action_space.n

Representation_model= paddle.nn.Sequential(

paddle.nn.Linear(

o_dim, 128),

paddle.nn.ELU(),

paddle.nn.Linear(128, hidden_dims),

)# paddle.summary(h, (50,4))class Dynamics_Model(paddle.nn.Layer):

# action encoding - one hot

def __init__(self, num_hidden, num_actions):

super().__init__()

self.num_hidden = num_hidden

self.num_actions = num_actions

network = [

nn.Linear(self.num_hidden+self.num_actions, self.num_hidden),

nn.ELU(),

nn.Linear(self.num_hidden,128),

]

self.network = nn.Sequential(*network)

self.hs = nn.Linear(128,self.num_hidden)

self.r = nn.Linear(128,1) def forward(self, hs,a):

out = paddle.concat(x=[hs, a], axis=-1)

out = self.network(out)

hidden =self.hs(out)

reward = self.r(out)

return hidden, reward# D = Dynamics_Model(hidden_dims,act_dim)# paddle.summary(D, [(2,hidden_dims),(2,2)])class Prediction_Model(paddle.nn.Layer):

def __init__(self, num_hidden, num_actions):

super().__init__()

self.num_actions = num_actions

self.num_hidden = num_hidden

network = [

nn.Linear(num_hidden, 128),

nn.ELU(),

nn.Linear(128, 128),

nn.ELU(),

]

self.network = nn.Sequential(*network)

self.pi = nn.Linear(128,self.num_actions)

self.soft = nn.Softmax()

self.v = nn.Linear(128,1) def forward(self, x):

out = self.network(x)

p = self.pi(out)

p =self.soft(p)

v = self.v(out)

return v, p

# P= Prediction_Model(hidden_dims,act_dim)# paddle.summary(P, [(32,hidden_dims)])class MuZero_Agent(paddle.nn.Layer):

def __init__(self,num_hidden ,num_actions):

super().__init__()

self.num_actions = num_actions

self.num_hidden = num_hidden

self.representation_model = Representation_model

self.dynamics_model = Dynamics_Model(self.num_hidden,self.num_actions)

self.prediction_model = Prediction_Model(self.num_hidden,self.num_actions)

def forward(self, s,a):

s_0 = self.representation_model(s)

s_1 ,r_1 = self.dynamics_model(s_0 , a)

value , p = self.prediction_model(s_1)

return r_1, value ,p

mu = MuZero_Agent(128,2)

mu.train()buffer = deque(maxlen=500)def choose_action(env, evaluate=False):

values = [] # mu.eval()

for a in range(env.action_space.n):

e = copy.deepcopy(env)

o, r, d, _ = e.step(a)

act = np.zeros(env.action_space.n); act[a] = 1

state = paddle.to_tensor(list(e.state), dtype='float32')

action = paddle.to_tensor(act, dtype='float32') # print(state,action)

rew, v, pi = mu(state, action)

v = v.numpy()[0]

values.append(v) # mu.train()

if evaluate: return np.argmax(values) else: for i in range(len(values)): if values[i] < 0:

values[i] = 0

s = sum(values) if s == 0: return np.random.choice(values) for i in range(len(values)):

values[i] /= s # print(values)

return np.random.choice(range(env.action_space.n), p=values)

gamma = 0.997batch_size = 64 ##64evaluate = Falsescores = []

avg_scores = []

epochs = 2_000optim = paddle.optimizer.Adam(learning_rate=1e-3,parameters=mu.parameters())

mse_loss = nn.MSELoss()for episode in tqdm(range(epochs)):

obs = env.reset()

done = False

score = 0

while not done:

a = choose_action(env, evaluate=evaluate)

a_pi = np.zeros((env.action_space.n)); a_pi[a] = 1

obs_, r, done, _ = env.step(a)

score += r

buffer.append([obs, None, a_pi, r/200])

obs = obs_ #print(f'score: {score}')

scores.append(score) if len(scores) >= 100:

avg_scores.append(np.mean(scores[-100:])) else:

avg_scores.append(np.mean(scores))

cnt = score for i in range(len(buffer)): if buffer[i][1] == None:

buffer[i][1] = cnt / 200

cnt -= 1

assert(cnt == 0)

if len(buffer) >= batch_size:

batch = []

indexes = np.random.choice(len(buffer), batch_size, replace=False) for i in range(batch_size):

batch.append(buffer[indexes[i]])

states = paddle.to_tensor([transition[0] for transition in batch], dtype='float32')

values = paddle.to_tensor([transition[1] for transition in batch], dtype='float32')

values = paddle.reshape(values,[batch_size,-1])

policies = paddle.to_tensor([transition[2] for transition in batch], dtype='float32')

rewards = paddle.to_tensor([transition[3] for transition in batch], dtype='float32')

rewards = paddle.reshape(rewards,[batch_size,-1]) for _ in range(2): # mu.train_on_batch([states, policies], [rewards, values, policies])

rew, v, pi = mu(states, policies) # print("----rew---{}----v---{}----------pi---{}".format(rew, v, pi))

# print("----rewards---{}----values---{}----------policies---{}".format(rewards, values, policies))

policy_loss = -paddle.mean(paddle.sum(policies*paddle.log(pi), axis=1))

mse1 = mse_loss(rew, rewards)

mse2 =mse_loss(v,values) # print(mse1,mse2 ,policy_loss)

loss = paddle.add_n([policy_loss,mse1,mse2]) # print(loss)

loss.backward()

optim.step()

optim.clear_grad()100%|██████████| 2000/2000 [07:18<00:00, 4.56it/s]

# 模型保存model_state_dict = mu.state_dict() paddle.save(model_state_dict, "mu.pdparams")

import matplotlib.pyplot as plt

plt.plot(scores)

plt.plot(avg_scores)

plt.xlabel('episode')

plt.legend(['scores', 'avg scores'])

plt.title('scores')

plt.ylim(0, 200)

plt.show()<Figure size 432x288 with 1 Axes>

# 模型测试 ,可以看到testing scores在100次测试中均为200,说明模型已经完全掌握了这个简单的游戏# 模型读取# model_state_dict = paddle.load("mu.pdparams")# mu.set_state_dict(model_state_dict)import matplotlib.pyplot as plt

tests = 100scores = []

mu.eval()for episode in range(tests):

obs = env.reset()

done = False

score = 0

while not done:

a = choose_action(env, evaluate=True)

obs_, r, done, _ = env.step(a)

score += r

obs = obs_

scores.append(score)

plt.plot(scores)

plt.title('testing scores')

plt.show()<Figure size 432x288 with 1 Axes>

写在最后:

以上就是极简MuZero算法实践——Paddle2.0版本的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号