本文介绍CartPole-V0环境中Actor-Critic方法的实现。该方法含Actor和Critic两个网络,前者输出动作概率,后者估计未来回报。训练时,通过交互收集数据,计算回报和优势,分别更新两个网络。实验显示,训练后代理能长时间保持杆子平衡,体现了该方法结合策略与值函数逼近、单步更新、高效利用数据的优势。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

这个脚本展示了CartPole-V0环境中Actor-Critic方法的一个实现。

当代理在环境中执行操作和移动时,它将学习将观察到的环境状态映射到两个可能的输出:

在无摩擦的轨道上,一根杆子系在一辆推车上。agent(代理)必须施加力才能移动推车。每走一步,杆子就保持直立,这是奖励。因此,agent(代理)必须学会防止杆子掉下来。

本教程基于Paddle 2.0 编写,如果您的环境不是本版本,请先参考官网安装 Paddle 2.0 。

import gym, osfrom itertools import countimport paddleimport paddle.nn as nnimport paddle.optimizer as optimimport paddle.nn.functional as Ffrom paddle.distribution import Categorical

device = paddle.get_device()

env = gym.make("CartPole-v0") ### 或者 env = gym.make("CartPole-v0").unwrapped 开启无锁定环境训练state_size = env.observation_space.shape[0]

action_size = env.action_space.n

lr = 0.001##定义“演员”网络class Actor(nn.Layer):

def __init__(self, state_size, action_size):

super(Actor, self).__init__()

self.state_size = state_size

self.action_size = action_size

self.linear1 = nn.Linear(self.state_size, 128)

self.linear2 = nn.Linear(128, 256)

self.linear3 = nn.Linear(256, self.action_size) def forward(self, state):

output = F.relu(self.linear1(state))

output = F.relu(self.linear2(output))

output = self.linear3(output)

distribution = Categorical(F.softmax(output, axis=-1)) return distribution##定义“评论家”网络class Critic(nn.Layer):

def __init__(self, state_size, action_size):

super(Critic, self).__init__()

self.state_size = state_size

self.action_size = action_size

self.linear1 = nn.Linear(self.state_size, 128)

self.linear2 = nn.Linear(128, 256)

self.linear3 = nn.Linear(256, 1) def forward(self, state):

output = F.relu(self.linear1(state))

output = F.relu(self.linear2(output))

value = self.linear3(output) return valuedef compute_returns(next_value, rewards, masks, gamma=0.99):

R = next_value

returns = [] for step in reversed(range(len(rewards))):

R = rewards[step] + gamma * R * masks[step]

returns.insert(0, R) return returns## 定义训练过程def trainIters(actor, critic, n_iters):

optimizerA = optim.Adam(lr, parameters=actor.parameters())

optimizerC = optim.Adam(lr, parameters=critic.parameters()) for iter in range(n_iters):

state = env.reset()

log_probs = []

values = []

rewards = []

masks = []

entropy = 0

env.reset() for i in count(): # env.render()

state = paddle.to_tensor(state,dtype="float32",place=device)

dist, value = actor(state), critic(state)

action = dist.sample([1])

next_state, reward, done, _ = env.step(action.cpu().squeeze(0).numpy()) #env.step(action.cpu().squeeze(0).numpy())

log_prob = dist.log_prob(action);

entropy += dist.entropy().mean()

log_probs.append(log_prob)

values.append(value)

rewards.append(paddle.to_tensor([reward], dtype="float32", place=device))

masks.append(paddle.to_tensor([1-done], dtype="float32", place=device))

state = next_state if done: if iter % 10 == 0: print('Iteration: {}, Score: {}'.format(iter, i)) break

next_state = paddle.to_tensor(next_state, dtype="float32", place=device)

next_value = critic(next_state)

returns = compute_returns(next_value, rewards, masks)

log_probs = paddle.concat(log_probs)

returns = paddle.concat(returns).detach()

values = paddle.concat(values)

advantage = returns - values

actor_loss = -(log_probs * advantage.detach()).mean()

critic_loss = advantage.pow(2).mean()

optimizerA.clear_grad()

optimizerC.clear_grad()

actor_loss.backward()

critic_loss.backward()

optimizerA.step()

optimizerC.step()

paddle.save(actor.state_dict(), 'model/actor.pdparams')

paddle.save(critic.state_dict(), 'model/critic.pdparams')

env.close()if __name__ == '__main__': if os.path.exists('model/actor.pdparams'):

actor = Actor(state_size, action_size)

model_state_dict = paddle.load('model/actor.pdparams')

actor.set_state_dict(model_state_dict ) print('Actor Model loaded') else:

actor = Actor(state_size, action_size) if os.path.exists('model/critic.pdparams'):

critic = Critic(state_size, action_size)

model_state_dict = paddle.load('model/critic.pdparams')

critic.set_state_dict(model_state_dict ) print('Critic Model loaded') else:

critic = Critic(state_size, action_size)

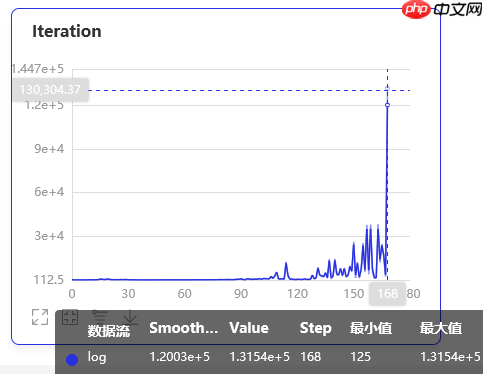

trainIters(actor, critic, n_iters=201)Iteration: 0, Score: 19 Iteration: 10, Score: 59 Iteration: 20, Score: 24 Iteration: 30, Score: 33 Iteration: 40, Score: 39 Iteration: 50, Score: 62 Iteration: 60, Score: 44 Iteration: 70, Score: 59 Iteration: 80, Score: 21 Iteration: 90, Score: 85 Iteration: 100, Score: 152 Iteration: 110, Score: 103 Iteration: 120, Score: 69 Iteration: 130, Score: 170 Iteration: 140, Score: 199 Iteration: 150, Score: 197 Iteration: 160, Score: 199 Iteration: 170, Score: 199 Iteration: 180, Score: 163 Iteration: 190, Score: 199 Iteration: 200, Score: 199

!python train.py

W0303 17:18:01.972481 3940 device_context.cc:362] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1 W0303 17:18:01.977756 3940 device_context.cc:372] device: 0, cuDNN Version: 7.6. Actor Model loaded Critic Model loaded Iteration: 0, Score: 325

在训练的早期

在训练的后期

以上就是强化学习——Actor Critic Method的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号