该方案针对中文新闻标题14分类任务,基于PaddleNLP,采用RoBERTa等预训练模型微调。分析THUCNews数据集,用AEDA、EDA做数据增强,处理数据后构建模型,以动态学习率和AdamW优化,经训练评估,最终对测试集预测生成结果。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

常规赛:中文新闻文本标题分类Baseline(PaddleNLP)

- 因为模型大小超过1G,没法作为公开项目保存,所以没有保存checkpoint

- 主要参考baseline:** https://aistudio.baidu.com/aistudio/projectdetail/2345384

一.方案介绍

1.1 赛题简介:

文本分类是借助计算机对文本集(或其他实体或物件)按照一定的分类体系或标准进行自动分类标记。本次比赛为新闻标题文本分类 ,选手需要根据提供的新闻标题文本和类别标签训练一个新闻分类模型,然后对测试集的新闻标题文本进行分类,评价指标上使用Accuracy = 分类正确数量 / 需要分类总数量。同时本次参赛选手需使用飞桨框架和飞桨文本领域核心开发库PaddleNLP,PaddleNLP具备简洁易用的文本领域全流程API、多场景的应用示例、非常丰富的预训练模型,深度适配飞桨框架2.x版本。

比赛传送门:常规赛:中文新闻文本标题分类

1.2 数据介绍:

THUCNews是根据新浪新闻RSS订阅频道2005~2011年间的历史数据筛选过滤生成,包含74万篇新闻文档(2.19 GB),均为UTF-8纯文本格式。本次比赛数据集在原始新浪新闻分类体系的基础上,重新整合划分出14个候选分类类别:财经、cai票、房产、股票、家居、教育、科技、社会、时尚、时政、体育、星座、游戏、娱乐。提供训练数据共832471条。

比赛提供数据集的格式:训练集和验证集格式:原文标题+\t+标签,测试集格式:原文标题。

1.3 Baseline思路:

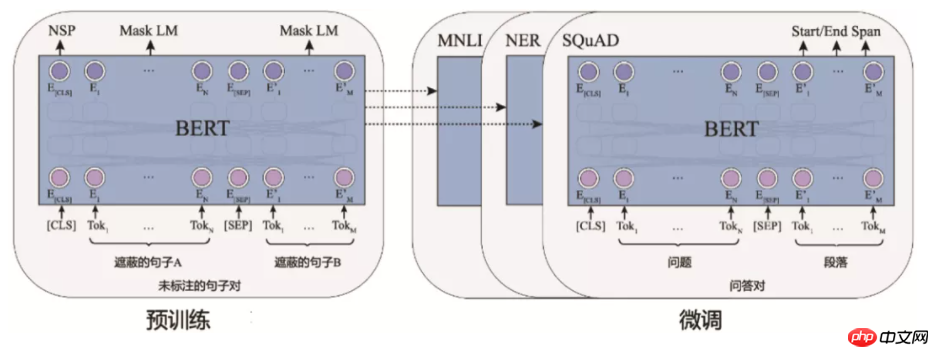

赛题为一道较常规的短文本多分类任务,本项目主要基于PaddleNLP通过预训练模型Robert在提供的训练数据上进行微调完成新闻14分类模型的训练与优化,最后利用训练好的模型对测试数据进行预测并生成提交结果文件。

注意本项目运行需要选择至尊版的GPU环境!若显存不足注意适当改小下batchsize!

BERT前置知识补充:【原理】经典的预训练模型-BERT

二.数据读取与分析

# 进入比赛数据集存放目录%cd /home/aistudio/data/data103654/

/home/aistudio/data/data103654

# 使用pandas读取数据集import pandas as pd

train = pd.read_table('train.txt', sep='\t',header=None) # 训练集dev = pd.read_table('dev.txt', sep='\t',header=None) # 验证集test = pd.read_table('test.txt', sep='\t',header=None) # 测试集train.columns = ["content",'label']

dev.columns = ["content",'label']

test.columns = ["content"]print(len(train))

752471

# 拼接训练和验证集,便于统计分析total = pd.concat([train,dev],axis=0)

# 总类别标签分布统计total['label'].value_counts()

科技 162245 股票 153949 体育 130982 娱乐 92228 时政 62867 社会 50541 教育 41680 财经 36963 家居 32363 游戏 24283 房产 19922 时尚 13335 cai票 7598 星座 3515 Name: label, dtype: int64

# 文本长度统计分析,通过分析可以看出文本较短,最长为48# total['text_a'].map(len).describe()total['content'].map(lambda x : len(x)).describe()# 这样得到最长为13

count 832471.000000 mean 19.388112 std 4.097139 min 2.000000 25% 17.000000 50% 20.000000 75% 23.000000 max 48.000000 Name: content, dtype: float64

# 对测试集的长度统计分析,可以看出在长度上分布与训练数据相近test['content'].map(len).describe()

count 83599.000000 mean 19.815022 std 3.883845 min 3.000000 25% 17.000000 50% 20.000000 75% 23.000000 max 84.000000 Name: content, dtype: float64

# import random# random.seed(0)# PUNCTUATIONS = ['.', ',', '!', '?', ';', ':']# NUM_AUGS = [1]# PUNC_RATIO = 0.3# # 由于数据集存放时无列名,因此手动添加列名便于对数据进行更好处理# train.columns = ["content",'label']# dev.columns = ["content",'label']# dev.to_csv('./dev.csv', sep='\t', index=False) # 保存验证集,格式为text_a,label,以\t分隔开# # 先暂存一个csv文件# train.to_csv("./edaData.csv", sep='\t', index=False)# # Insert punction words into a given sentence with the given ratio "punc_ratio"# def insert_punctuation_marks(sentence, punc_ratio=PUNC_RATIO):

# words = sentence.split(' ')# new_line = []# q = random.randint(1, int(punc_ratio * len(words) + 1))# qs = random.sample(range(0, len(words)), q)# for j, word in enumerate(words):# if j in qs:

# new_line.append(PUNCTUATIONS[random.randint(0, len(PUNCTUATIONS)-1)])# new_line.append(word)# else:# new_line.append(word)

# new_line = ' '.join(new_line)# return new_line# for aug in NUM_AUGS:# exContent = []# exLabel = []# train_df = pd.read_csv('./edaData.csv',sep='\t')

# for index,row in train_df.iterrows():# sentence_aug = insert_punctuation_marks(row['content'])# exContent.append(sentence_aug)# exLabel.append(row['label'])

# tmpDf = pd.DataFrame({# 'content': exContent,# 'label': exLabel# })# train_df = train_df.append(tmpDf)# print(len(train_df))# train_df.to_csv("./edaData.csv", sep='\t',index=False)

EDA

主要使用nlpcda包,做同义词替换和邻近词交换

!pip install nlpcda

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple Requirement already satisfied: nlpcda in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (2.5.8) Requirement already satisfied: requests in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from nlpcda) (2.24.0) Requirement already satisfied: jieba in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from nlpcda) (0.42.1) Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->nlpcda) (1.25.6) Requirement already satisfied: chardet<4,>=3.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->nlpcda) (3.0.4) Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->nlpcda) (2019.9.11) Requirement already satisfied: idna<3,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->nlpcda) (2.8)

# 添加列名便于对数据进行更好处理# dev.to_csv('./dev.csv', sep='\t', index=False) # 保存验证集,格式为text_a,label,以\t分隔开# # 先暂存一个csv文件# train.to_csv("./edaData.csv", sep='\t', index=False)# from nlpcda import Similarword# import random# import time# ######################################################################### # 同义词替换# ######################################################################### def synonym_replacement(sentences):# smw = Similarword(create_num=3,change_rate=0.3)# random.seed(int(round(time.time()*1000)))# rs = smw.replace(sentences)# pos = random.randint(0, len(rs)-1)# return rs[pos]# from nlpcda import CharPositionExchange# ######################################################################### # 随机交换# # 随机交换几次# ######################################################################### def random_swap(sentences):# smw = CharPositionExchange(create_num=3,change_rate=0.3,char_gram=3)# random.seed(int(round(time.time() * 1000)))# rs = smw.replace(sentences)# pos = random.randint(0,len(rs)-1)# return rs[pos]# def getEda(df, func):# exContent = []# exLabel = []# for index,row in df.iterrows():# content = func(row['content']) # 这里可以换随机插入,同义词替换,随机删除,随机交换的函数# exContent.append(content)# exLabel.append(row['label'])# return exContent, exLabel# train = pd.read_csv('./edaData.csv',sep='\t')# edaData = train# # # 划分数据# eda1 = edaData[0:int(len(train)/2)]# eda2 = edaData[int(len(train)/2):len(train)]# # 同义词替换# exContent, exLabel = getEda(eda1, synonym_replacement)# tmpDf = pd.DataFrame({# 'content': exContent,# 'label': exLabel# })# edaData = edaData.append(tmpDf)# # 随机插入同义词# exContent, exLabel = getEda(eda2, random_swap)# tmpDf = pd.DataFrame({# 'content': exContent,# 'label': exLabel# })# edaData = edaData.append(tmpDf)# print(len(edaData))# edaData.to_csv("./edaData.csv", sep='\t', index=False)

三.基于PaddleNLP构建基线模型

3.1 前置环境准备

# 导入所需的第三方库import mathimport numpy as npimport osimport collectionsfrom functools import partialimport randomimport timeimport inspectimport importlibfrom tqdm import tqdmimport paddleimport paddle.nn as nnimport paddle.nn.functional as Ffrom paddle.io import IterableDatasetfrom paddle.utils.download import get_path_from_url

# 下载最新版本的paddlenlp!pip install --upgrade paddlenlp

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple Requirement already up-to-date: paddlenlp in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (2.1.1) Requirement already satisfied, skipping upgrade: seqeval in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (1.2.2) Requirement already satisfied, skipping upgrade: h5py in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (2.9.0) Requirement already satisfied, skipping upgrade: multiprocess in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.70.11.1) Requirement already satisfied, skipping upgrade: jieba in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.42.1) Requirement already satisfied, skipping upgrade: paddlefsl==1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (1.0.0) Requirement already satisfied, skipping upgrade: colorlog in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (4.1.0) Requirement already satisfied, skipping upgrade: colorama in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.4.4) Requirement already satisfied, skipping upgrade: scikit-learn>=0.21.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from seqeval->paddlenlp) (0.24.2) Requirement already satisfied, skipping upgrade: numpy>=1.14.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from seqeval->paddlenlp) (1.19.5) Requirement already satisfied, skipping upgrade: six in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from h5py->paddlenlp) (1.15.0) Requirement already satisfied, skipping upgrade: dill>=0.3.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from multiprocess->paddlenlp) (0.3.3) Requirement already satisfied, skipping upgrade: tqdm~=4.27.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlefsl==1.0.0->paddlenlp) (4.27.0) Requirement already satisfied, skipping upgrade: requests~=2.24.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlefsl==1.0.0->paddlenlp) (2.24.0) Requirement already satisfied, skipping upgrade: pillow==8.2.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlefsl==1.0.0->paddlenlp) (8.2.0) Requirement already satisfied, skipping upgrade: threadpoolctl>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp) (2.1.0) Requirement already satisfied, skipping upgrade: joblib>=0.11 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp) (0.14.1) Requirement already satisfied, skipping upgrade: scipy>=0.19.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp) (1.6.3) Requirement already satisfied, skipping upgrade: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests~=2.24.0->paddlefsl==1.0.0->paddlenlp) (2019.9.11) Requirement already satisfied, skipping upgrade: idna<3,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests~=2.24.0->paddlefsl==1.0.0->paddlenlp) (2.8) Requirement already satisfied, skipping upgrade: chardet<4,>=3.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests~=2.24.0->paddlefsl==1.0.0->paddlenlp) (3.0.4) Requirement already satisfied, skipping upgrade: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests~=2.24.0->paddlefsl==1.0.0->paddlenlp) (1.25.6)

# 导入paddlenlp所需的相关包import paddlenlp as ppnlpfrom paddlenlp.data import JiebaTokenizer, Pad, Stack, Tuple, Vocabfrom paddlenlp.datasets import MapDatasetfrom paddle.dataset.common import md5filefrom paddlenlp.datasets import DatasetBuilder

3.2 定义要进行微调的预训练模型

# 此次使用在中文领域效果较优的roberta-wwm-ext-large模型MODEL_NAME = "roberta-wwm-ext-large"# # 只需指定想要使用的模型名称和文本分类的类别数即可完成Fine-tune网络定义,通过在预训练模型后拼接上一个全连接网络(Full Connected)进行分类model = ppnlp.transformers.RobertaForSequenceClassification.from_pretrained(MODEL_NAME, num_classes=14) # 此次分类任务为14分类任务,故num_classes设置为14# # 定义模型对应的tokenizer,tokenizer可以把原始输入文本转化成模型model可接受的输入数据格式。需注意tokenizer类要与选择的模型相对应,具体可以查看PaddleNLP相关文档tokenizer = ppnlp.transformers.RobertaTokenizer.from_pretrained(MODEL_NAME)

[2021-11-03 17:12:12,401] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/roberta-wwm-ext-large/roberta_chn_large.pdparams W1103 17:12:12.404340 377 device_context.cc:404] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1 W1103 17:12:12.409199 377 device_context.cc:422] device: 0, cuDNN Version: 7.6. [2021-11-03 17:12:22,233] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/roberta-wwm-ext-large/vocab.txt

# nezha-large-wwm-chinese模型# 指定模型名称,一键加载模型# model = ppnlp.transformers.NeZhaForSequenceClassification.from_pretrained('nezha-large-wwm-chinese', num_classes=14)# # 同样地,通过指定模型名称一键加载对应的Tokenizer,用于处理文本数据,如切分token,转token_id等# tokenizer = ppnlp.transformers.NeZhaTokenizer.from_pretrained('nezha-large-wwm-chinese')

# skep_ernie_1.0_large_ch模型# 指定模型名称,一键加载模型# model = ppnlp.transformers.SkepForSequenceClassification.from_pretrained(pretrained_model_name_or_path="skep_ernie_1.0_large_ch", num_classes=14)# # 同样地,通过指定模型名称一键加载对应的Tokenizer,用于处理文本数据,如切分token,转token_id等# tokenizer = ppnlp.transformers.SkepTokenizer.from_pretrained(pretrained_model_name_or_path="skep_ernie_1.0_large_ch")

PaddleNLP不仅支持RoBERTa预训练模型,还支持ERNIE、BERT、Electra等预训练模型。具体可以查看:PaddleNLP模型

下表汇总了目前PaddleNLP支持的各类预训练模型。用户可以使用PaddleNLP提供的模型,完成问答、序列分类、token分类等任务。同时还提供了22种预训练的参数权重供用户使用,其中包含了11种中文语言模型的预训练权重。

| Model | Tokenizer | Supported Task | Model Name |

|---|---|---|---|

| BERT | BertTokenizer | BertModel BertForQuestionAnswering BertForSequenceClassification BertForTokenClassification |

bert-base-uncased bert-large-uncased bert-base-multilingual-uncased bert-base-cased bert-base-chinese bert-base-multilingual-cased bert-large-cased bert-wwm-chinese bert-wwm-ext-chinese |

| ERNIE | ErnieTokenizer ErnieTinyTokenizer |

ErnieModel ErnieForQuestionAnswering ErnieForSequenceClassification ErnieForTokenClassification |

ernie-1.0 ernie-tiny ernie-2.0-en ernie-2.0-large-en |

| RoBERTa | RobertaTokenizer | RobertaModel RobertaForQuestionAnswering RobertaForSequenceClassification RobertaForTokenClassification |

roberta-wwm-ext roberta-wwm-ext-large rbt3 rbtl3 |

| ELECTRA | ElectraTokenizer | ElectraModel ElectraForSequenceClassification ElectraForTokenClassification |

electra-small electra-base electra-large chinese-electra-small chinese-electra-base |

注:其中中文的预训练模型有 bert-base-chinese, bert-wwm-chinese, bert-wwm-ext-chinese, ernie-1.0, ernie-tiny, roberta-wwm-ext, roberta-wwm-ext-large, rbt3, rbtl3, chinese-electra-base, chinese-electra-small 等。

label_list = list(train.label.unique())print(label_list)

['科技', '体育', '时政', '股票', '娱乐', '教育', '家居', '财经', '房产', '社会', '游戏', 'cai票', '星座', '时尚', nan]

3.3 数据读取和处理

# 定义数据集对应文件及其文件存储格式class MyDataset(DatasetBuilder):

SPLITS = { 'train': 'train.txt', # 训练集

'dev': 'dev.txt', # 验证集

}

def _get_data(self, mode, **kwargs):

filename = self.SPLITS[mode] return filename def _read(self, filename):

"""读取数据"""

with open(filename, 'r', encoding='utf-8') as f:

head = None

for line in f:

data = line.strip().split("\t") # 以'\t'分隔各列

if not head:

head = data else:

text_a, label = data yield {"content": text_a, "label": label} # 此次设置数据的格式为:content,label,可以根据具体情况进行修改

# 这个会自动转换成数字索引

def get_labels(self):

return label_list # 类别标签

# 定义数据集加载函数def load_dataset(name=None,

data_files=None,

splits=None,

lazy=None,

**kwargs):

reader_cls = MyDataset # 加载定义的数据集格式

if not name:

reader_instance = reader_cls(lazy=lazy, **kwargs) else:

reader_instance = reader_cls(lazy=lazy, name=name, **kwargs)

datasets = reader_instance.read_datasets(data_files=data_files, splits=splits) print(datasets) return datasets

# 加载训练和验证集train_ds, dev_ds = load_dataset(splits=["train", "dev"])

[, ]

# 定义数据加载和处理函数def convert_example(example, tokenizer, max_seq_length=128, is_test=False):

qtconcat = example["content"]

encoded_inputs = tokenizer(text=qtconcat, max_seq_len=max_seq_length) # tokenizer处理为模型可接受的格式

input_ids = encoded_inputs["input_ids"]

token_type_ids = encoded_inputs["token_type_ids"] if not is_test:

label = np.array([example["label"]], dtype="int64") return input_ids, token_type_ids, label else: return input_ids, token_type_ids# 定义数据加载函数dataloaderdef create_dataloader(dataset,

mode='train',

batch_size=1,

batchify_fn=None,

trans_fn=None):

if trans_fn:

dataset = dataset.map(trans_fn)

shuffle = True if mode == 'train' else False

# 训练数据集随机打乱,测试数据集不打乱

if mode == 'train':

batch_sampler = paddle.io.DistributedBatchSampler(

dataset, batch_size=batch_size, shuffle=shuffle) else:

batch_sampler = paddle.io.BatchSampler(

dataset, batch_size=batch_size, shuffle=shuffle) return paddle.io.DataLoader(

dataset=dataset,

batch_sampler=batch_sampler,

collate_fn=batchify_fn,

return_list=True)

# 参数设置:# 批处理大小,显存如若不足的话可以适当改小该值 batch_size = 256# 文本序列最大截断长度,需要根据文本具体长度进行确定,最长不超过512。 通过文本长度分析可以看出文本长度最大为48,故此处设置为48max_seq_length = 48

# 将数据处理成模型可读入的数据格式trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input_ids

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # token_type_ids

Stack() # labels): [data for data in fn(samples)]# 训练集迭代器train_data_loader = create_dataloader(

train_ds,

mode='train',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)# 验证集迭代器dev_data_loader = create_dataloader(

dev_ds,

mode='dev',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

3.4 设置Fine-Tune优化策略,接入评价指标

适用于BERT这类Transformer模型的学习率为warmup的动态学习率。

# 定义超参,loss,优化器等from paddlenlp.transformers import LinearDecayWithWarmup# 定义训练配置参数:# 定义训练过程中的最大学习率learning_rate = 4e-5# 训练轮次epochs = 2# 学习率预热比例warmup_proportion = 0.1# 权重衰减系数,类似模型正则项策略,避免模型过拟合weight_decay = 0.01num_training_steps = len(train_data_loader) * epochs

lr_scheduler = LinearDecayWithWarmup(learning_rate, num_training_steps, warmup_proportion)#想复现下pytorch里面常用到的差分学习率,貌似移植到paddle就有问题def get_parameters(model, model_init_lr, multiplier, classifier_lr):

parameters = []

lr = model_init_lr for layer in range(12, -1, -1): # 遍历模型的每一层

layer_params = { 'params': [p for n, p in model.named_parameters() if f'encoder.layer.{layer}.' in n], 'lr': lr

}

parameters.append(layer_params)

lr *= multiplier # 每一层的学习率*0.95的衰减因子

classifier_params = { 'params': [p for n, p in model.named_parameters() if 'layer_norm' in n or 'linear' in n or 'pooling' in n], 'lr': classifier_lr # 单独针对全连接层

}

parameters.append(classifier_params) return parameters

parameters = get_parameters(model, 2e-5, 0.95, 1e-4)# print(parameters)# AdamW优化器# optimizer = paddle.optimizer.AdamW(# learning_rate=lr_scheduler,# parameters=parameters,# weight_decay=weight_decay,# apply_decay_param_fun=lambda x: x in [# p.name for n, p in model.named_parameters()# if not any(nd in n for nd in ["bias", "norm"])# ])optimizer = paddle.optimizer.AdamW(

learning_rate=lr_scheduler,

parameters=model.parameters(),

weight_decay=weight_decay,

apply_decay_param_fun=lambda x: x in [

p.name for n, p in model.named_parameters() if not any(nd in n for nd in ["bias", "norm"])

])

criterion = paddle.nn.loss.CrossEntropyLoss() # 交叉熵损失函数metric = paddle.metric.Accuracy() # accuracy评价指标

3.5 模型训练与评估

ps:模型训练时,可以通过在终端输入nvidia-smi命令或者通过点击底部‘性能监控’选项查看显存的占用情况,适当调整好batchsize,防止出现显存不足意外暂停的情况。

# 定义模型训练验证评估函数@paddle.no_grad()def evaluate(model, criterion, metric, data_loader):

model.eval()

metric.reset()

losses = [] for batch in data_loader:

input_ids, token_type_ids, labels = batch

logits = model(input_ids, token_type_ids)

loss = criterion(logits, labels)

losses.append(loss.numpy())

correct = metric.compute(logits, labels)

metric.update(correct)

accu = metric.accumulate() print("eval loss: %.5f, accu: %.5f" % (np.mean(losses), accu)) # 输出验证集上评估效果

model.train()

metric.reset() return accu # 返回准确率

# 固定随机种子便于结果的复现seed = 1024random.seed(seed) np.random.seed(seed) paddle.seed(seed)

ps:模型训练时可以通过在终端输入nvidia-smi命令或通过底部右下的性能监控选项查看显存占用情况,显存不足的话要适当调整好batchsize的值。

# 模型训练:import paddle.nn.functional as F

pre_accu=0accu=0global_step = 0for epoch in range(1, epochs + 1): for step, batch in enumerate(train_data_loader, start=1):

input_ids, segment_ids, labels = batch

logits = model(input_ids, segment_ids)

loss = criterion(logits, labels)

probs = F.softmax(logits, axis=1)

correct = metric.compute(probs, labels)

metric.update(correct)

acc = metric.accumulate()

global_step += 1

if global_step % 10 == 0 : print("global step %d, epoch: %d, batch: %d, loss: %.5f, acc: %.5f" % (global_step, epoch, step, loss, acc))

loss.backward()

optimizer.step()

lr_scheduler.step()

optimizer.clear_grad() # 每轮结束对验证集进行评估

accu = evaluate(model, criterion, metric, dev_data_loader) print(accu) if accu > pre_accu: # 保存较上一轮效果更优的模型参数

save_param_path = os.path.join('work', 'nezha-large-wwm-chinese.pdparams') # 保存模型参数

paddle.save(model.state_dict(), save_param_path)

pre_accu=accu

global step 10, epoch: 1, batch: 10, loss: 2.71046, acc: 0.03945 global step 20, epoch: 1, batch: 20, loss: 2.62639, acc: 0.03906 global step 30, epoch: 1, batch: 30, loss: 2.50319, acc: 0.05104 global step 40, epoch: 1, batch: 40, loss: 2.30763, acc: 0.11348 global step 50, epoch: 1, batch: 50, loss: 2.08418, acc: 0.18438 global step 60, epoch: 1, batch: 60, loss: 1.75228, acc: 0.24238 global step 70, epoch: 1, batch: 70, loss: 1.36488, acc: 0.29375 global step 80, epoch: 1, batch: 80, loss: 1.05070, acc: 0.34575 global step 90, epoch: 1, batch: 90, loss: 0.78185, acc: 0.39266 global step 100, epoch: 1, batch: 100, loss: 0.58556, acc: 0.43926 global step 110, epoch: 1, batch: 110, loss: 0.57243, acc: 0.47905 global step 120, epoch: 1, batch: 120, loss: 0.49400, acc: 0.51403 global step 130, epoch: 1, batch: 130, loss: 0.40811, acc: 0.54318 global step 140, epoch: 1, batch: 140, loss: 0.37874, acc: 0.56892 global step 150, epoch: 1, batch: 150, loss: 0.34734, acc: 0.59135 global step 160, epoch: 1, batch: 160, loss: 0.36795, acc: 0.61145 global step 170, epoch: 1, batch: 170, loss: 0.36890, acc: 0.62925 global step 180, epoch: 1, batch: 180, loss: 0.21993, acc: 0.64553 global step 190, epoch: 1, batch: 190, loss: 0.35158, acc: 0.65989 global step 200, epoch: 1, batch: 200, loss: 0.21618, acc: 0.67318 global step 210, epoch: 1, batch: 210, loss: 0.37297, acc: 0.68508 global step 220, epoch: 1, batch: 220, loss: 0.28194, acc: 0.69625 global step 230, epoch: 1, batch: 230, loss: 0.26229, acc: 0.70608

# 加载在验证集上效果最优的一轮的模型参数import osimport paddle

params_path = 'work/nezha-large-wwm-chinese.pdparams'if params_path and os.path.isfile(params_path): # 加载模型参数

state_dict = paddle.load(params_path)

model.set_dict(state_dict) print("Loaded parameters from %s" % params_path)

3.6 模型预测

# 定义模型预测函数def predict(model, data, tokenizer, label_map, batch_size=1):

examples = [] # 将输入数据(list格式)处理为模型可接受的格式

for text in data:

input_ids, segment_ids = convert_example(

text,

tokenizer,

max_seq_length=128,

is_test=True)

examples.append((input_ids, segment_ids))

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input id

Pad(axis=0, pad_val=tokenizer.pad_token_id), # segment id

): fn(samples) # Seperates data into some batches.

batches = []

one_batch = [] for example in examples:

one_batch.append(example) if len(one_batch) == batch_size:

batches.append(one_batch)

one_batch = [] if one_batch: # The last batch whose size is less than the config batch_size setting.

batches.append(one_batch)

results = []

model.eval() for batch in batches:

input_ids, segment_ids = batchify_fn(batch)

input_ids = paddle.to_tensor(input_ids)

segment_ids = paddle.to_tensor(segment_ids)

logits = model(input_ids, segment_ids)

probs = F.softmax(logits, axis=1)

idx = paddle.argmax(probs, axis=1).numpy()

idx = idx.tolist()

labels = [label_map[i] for i in idx]

results.extend(labels) return results # 返回预测结果

# 定义要进行分类的类别label_list=list(train.label.unique())

label_map = {

idx: label_text for idx, label_text in enumerate(label_list)

}print(label_map)

# 定义对数据的预处理函数,处理为模型输入指定list格式def preprocess_prediction_data(data):

examples = [] for text_a in data:

examples.append({"content": text_a}) return examples

test_data = []with open('./test.txt',encoding='utf-8') as f: for line in f:

test_data.append(line.strip())# 对测试集数据进行格式处理examples = preprocess_prediction_data(test_data)print(len(examples))

!pwd

# 对测试集进行预测results = predict(model, examples, tokenizer, label_map, batch_size=16)

!cd /home/aistudio

# 将list格式的预测结果存储为txt文件,提交格式要求:每行一个类别def write_results(labels, file_path):

with open(file_path, "w", encoding="utf8") as f:

f.writelines("\n".join(labels))

write_results(results, "work/nezha-large-wwm-chinese.txt")

# 移动data目录下提交结果文件至主目录下,便于结果文件的保存# !cp -r /home/aistudio/data/data103654/work/roberta-wwm-ext-large-result.txt /home/aistudio/work/# !cp -r /home/aistudio/data/data103654/work/roberta-wwm-ext-large.pdparams /home/aistudio/work/