陆平在文中介绍基于PaddlePaddle2.0构建门控循环单元(GRU)模型的流程,GRU通过重置门与更新门选择性记忆时序信息,并给出相关公式。还以IMDB电影评论数据为例,构建模型进行情感倾向预测,经10轮训练,测试集准确率达84%至85%。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

作者:陆平

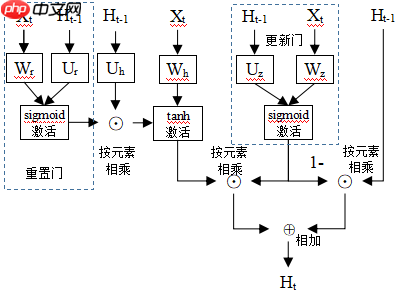

相比于长短期记忆模型,门控循环单元(GRU)的门控机制更加简单,通过重置门与更新门来选择性记忆时序信息。

门控循环单元模型整体结构如下:

重置门用来控制新记忆中包含上一时间步输出Ht−1的比例。给定一个大小为n的批量样本,输入特征数量为d,输出特征数量为q。时间步t的输入表示为Xt∈Rn×d,批量化的输入特征与权重Wt∈Rd×q相乘,再加上时间步t-1的输出特征Ht−1∈Rn×q与权重Ut∈Rq×q乘积,之后用sigmoid函数进行激活,得到输出rt∈Rn×q为:

rt=σ(XtWr+Ht−1Ur)

rt与Ht−1Uh按元素相乘可以得到上一时间步输出信息保留量,时间步t的输入特征Xt与权重Wh∈Rd×q相乘得到当前时间步输入的线性转化,两者相加后接tanh函数激活,得到输出H~t∈Rn×q,这代表新记忆。

H~t=tanh(rt⊙Ht−1Uh+XtWh)

更新门用来控制门控循环单元输出中包含上一时间步输出Ht−1的比例。时间步t的输入Xt∈Rn×d与权重Wz∈Rd×q相乘,再加上时间步t-1的输出Ht−1与权重Uz∈Rq×q乘积,之后用sigmoid函数进行激活,得到输出zt∈Rn×q为:

zt=σ(XtWz+Ht−1Uz)

时间步t的单元输出Ht是由新记忆H~t与上一时间步的输出特征Ht−1的加权求和,输出Ht∈Rn×q为:

Ht=(1−zt)⊙Ht−1+zt⊙H~t

基于PaddlePaddle2.0基础API构建门控循环神经网络模型,利用互联网电影资料库Imdb数据来进行电影评论情感倾向预测

import numpy as npimport paddle#准备数据#加载IMDB数据imdb_train = paddle.text.datasets.Imdb(mode='train') #训练数据集imdb_test = paddle.text.datasets.Imdb(mode='test') #测试数据集#获取字典word_dict = imdb_train.word_idx#在字典中增加一个<pad>字符串word_dict['<pad>'] = len(word_dict)

vocab_size = len(word_dict)

embedding_size = 256hidden_size = 256n_layers = 2dropout = 0.5seq_len = 200batch_size = 64epochs = 10pad_id = word_dict['<pad>']def padding(dataset):

padded_sents = []

labels = [] for batch_id, data in enumerate(dataset):

sent, label = data[0].astype('int64'), data[1].astype('int64')

padded_sent = np.concatenate([sent[:seq_len], [pad_id] * (seq_len - len(sent))]).astype('int64')

padded_sents.append(padded_sent)

labels.append(label) return np.array(padded_sents), np.array(labels)

train_x, train_y = padding(imdb_train)

test_x, test_y = padding(imdb_test)

class IMDBDataset(paddle.io.Dataset):

def __init__(self, sents, labels):

self.sents = sents

self.labels = labels def __getitem__(self, index):

data = self.sents[index]

label = self.labels[index] return data, label def __len__(self):

return len(self.sents)

train_dataset = IMDBDataset(train_x, train_y)

test_dataset = IMDBDataset(test_x, test_y)

train_loader = paddle.io.DataLoader(train_dataset, return_list=True, shuffle=True, batch_size=batch_size, drop_last=True)

test_loader = paddle.io.DataLoader(test_dataset, return_list=True, shuffle=True, batch_size=batch_size, drop_last=True)#构建模型class GRUModel(paddle.nn.Layer):

def __init__(self):

super(GRUModel, self).__init__()

self.embedding = paddle.nn.Embedding(vocab_size, embedding_size)

self.gru_layer = paddle.nn.GRU(embedding_size,

hidden_size,

num_layers=n_layers,

direction='bidirectional',

dropout=dropout)

self.linear = paddle.nn.Linear(in_features=hidden_size * 2, out_features=2)

self.dropout = paddle.nn.Dropout(dropout)

def forward(self, text):

#输入text形状大小为[batch_size, seq_len]

embedded = self.dropout(self.embedding(text)) #embedded形状大小为[batch_size, seq_len, embedding_size]

output, hidden = self.gru_layer(embedded) #output形状大小为[batch_size,seq_len,num_directions * hidden_size]

#hidden形状大小为[num_layers * num_directions, batch_size, hidden_size]

#把前向的hidden与后向的hidden合并在一起

hidden = paddle.concat((hidden[-2,:,:], hidden[-1,:,:]), axis = 1)

hidden = self.dropout(hidden) #hidden形状大小为[batch_size, hidden_size * num_directions]

return self.linear(hidden)

model = paddle.Model(GRUModel()) #PaddlePaddle2.0高层API,需要用Model封装模型#模型配置model.prepare(paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters()),

paddle.nn.CrossEntropyLoss(),

paddle.metric.Accuracy())#模型训练model.fit(train_loader,

test_loader,

epochs=epochs,

batch_size=batch_size,

verbose=1)Cache file /home/aistudio/.cache/paddle/dataset/imdb/imdb%2FaclImdb_v1.tar.gz not found, downloading https://dataset.bj.bcebos.com/imdb%2FaclImdb_v1.tar.gz Begin to download Download finished /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/distributed/parallel.py:119: UserWarning: Currently not a parallel execution environment, `paddle.distributed.init_parallel_env` will not do anything. "Currently not a parallel execution environment, `paddle.distributed.init_parallel_env` will not do anything." /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/utils.py:77: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working return (isinstance(seq, collections.Sequence) and

The loss value printed in the log is the current step, and the metric is the average value of previous step. Epoch 1/10 step 390/390 [==============================] - loss: 0.4013 - acc: 0.7027 - 46ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.3364 - acc: 0.8394 - 18ms/step Eval samples: 24960 Epoch 2/10 step 390/390 [==============================] - loss: 0.2342 - acc: 0.8760 - 45ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.3898 - acc: 0.8710 - 18ms/step Eval samples: 24960 Epoch 3/10 step 390/390 [==============================] - loss: 0.3563 - acc: 0.9151 - 45ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.3252 - acc: 0.8697 - 18ms/step Eval samples: 24960 Epoch 4/10 step 390/390 [==============================] - loss: 0.2071 - acc: 0.9355 - 45ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.5057 - acc: 0.8571 - 19ms/step Eval samples: 24960 Epoch 5/10 step 390/390 [==============================] - loss: 0.1606 - acc: 0.9505 - 45ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.4060 - acc: 0.8417 - 19ms/step Eval samples: 24960 Epoch 6/10 step 390/390 [==============================] - loss: 0.2904 - acc: 0.9646 - 45ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.4060 - acc: 0.8482 - 18ms/step Eval samples: 24960 Epoch 7/10 step 390/390 [==============================] - loss: 0.1081 - acc: 0.9702 - 45ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.5072 - acc: 0.8516 - 18ms/step Eval samples: 24960 Epoch 8/10 step 390/390 [==============================] - loss: 0.0677 - acc: 0.9764 - 45ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.3075 - acc: 0.8509 - 18ms/step Eval samples: 24960 Epoch 9/10 step 390/390 [==============================] - loss: 0.1687 - acc: 0.9797 - 44ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.6582 - acc: 0.8468 - 19ms/step Eval samples: 24960 Epoch 10/10 step 390/390 [==============================] - loss: 0.0149 - acc: 0.9835 - 45ms/step Eval begin... The loss value printed in the log is the current batch, and the metric is the average value of previous step. step 390/390 [==============================] - loss: 0.5828 - acc: 0.8450 - 18ms/step Eval samples: 24960

经过10轮epoch训练,模型在测试数据集上的准确率大约为84%至85%。

以上就是基于PaddlePaddle2.0-构建门控循环单元模型的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号