本文介绍基于PaddlePaddle复现DDRNet23-Slim的过程。该网络为高效道路场景实时语义分割模型,采用双分辨率架构和多尺度模块,融合高低级语义信息。文中展示关键结构设计及基于PaddleSeg的开发步骤,模型表现良好。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

基于PaddlePaddle复现DDRNet23-Slim

简介

论文链接:https://arxiv.org/pdf/2101.06085.pdf

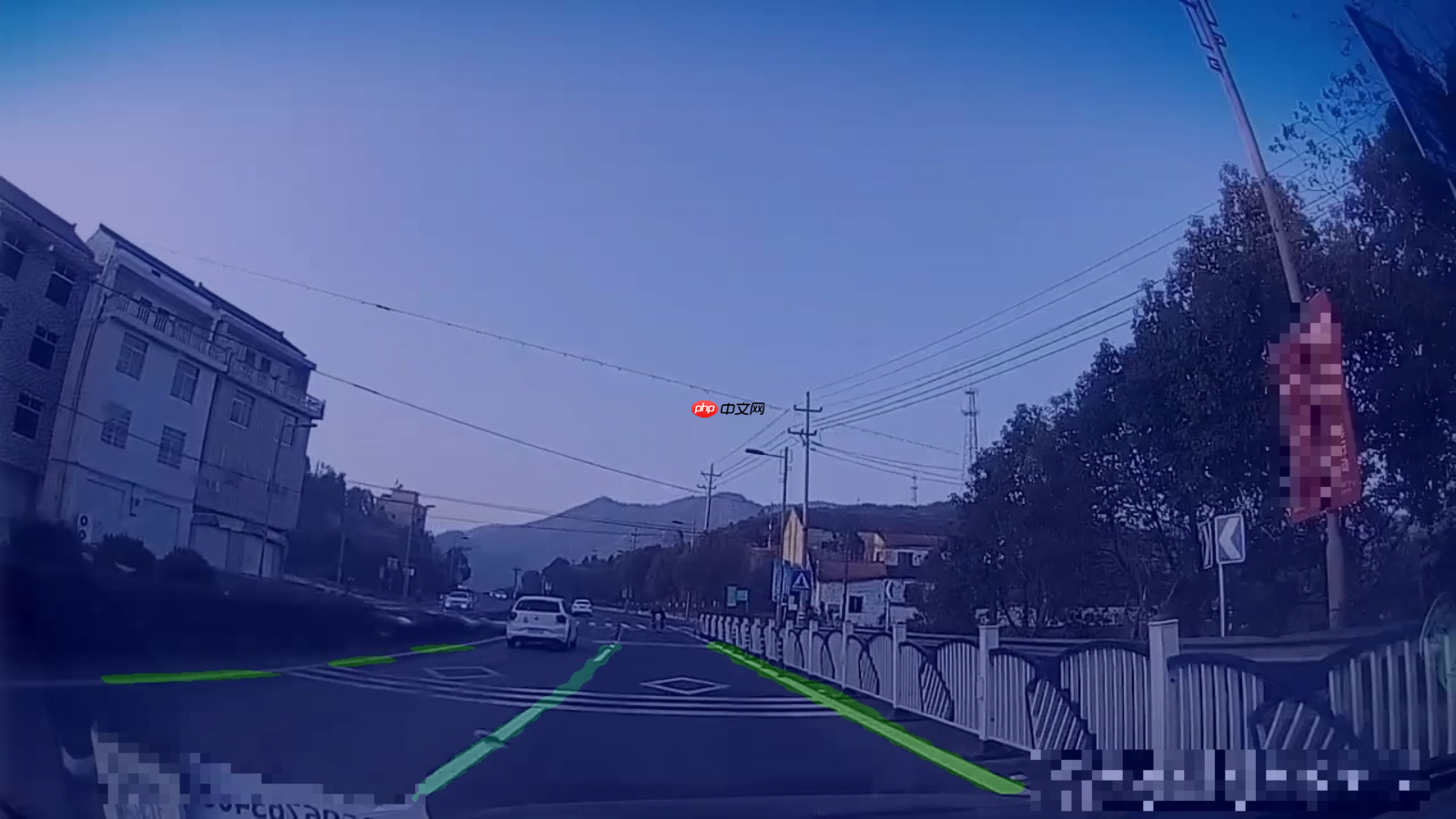

在开始之前先放一张作者实验的效果图镇楼:

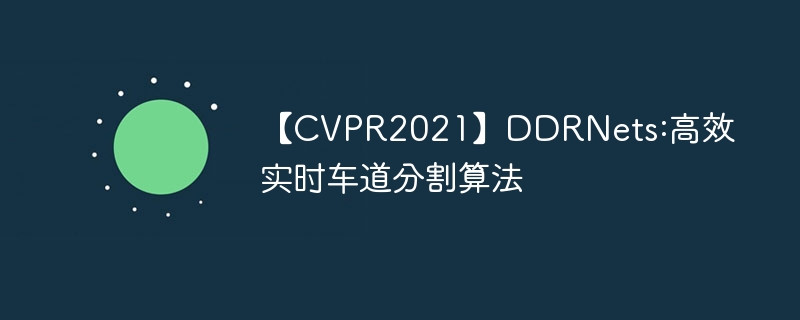

该网络结构是截止到目前在cityspace数据集上表现最好的一个分割网络模型。该模型的DDRNet23-Slim的速度堪比BisenetV2但同时上限又远高于BisenetV2,个人认为这个网络其实就是模型者上位(bushi 由于其思路与Bisenetv2过于相似,但在相似的基础上又有很独到的见解,就很nice。下面让我们看一下他的模型结构:

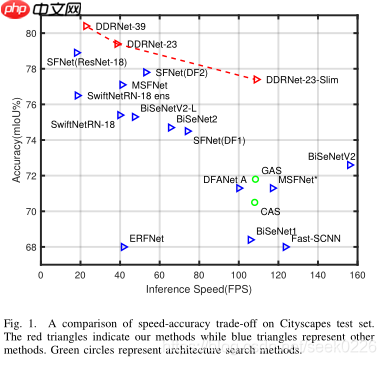

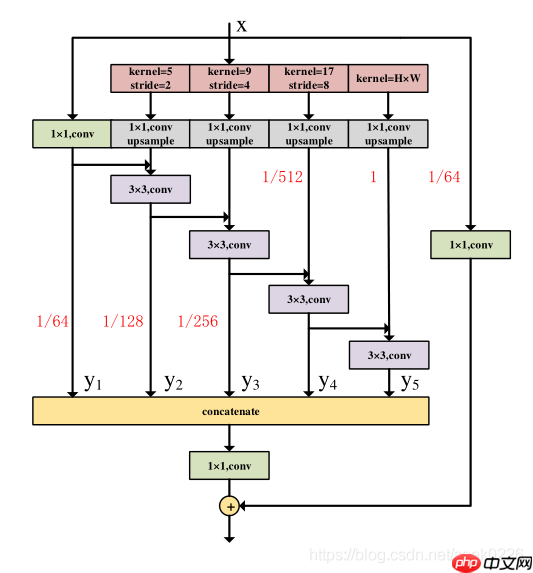

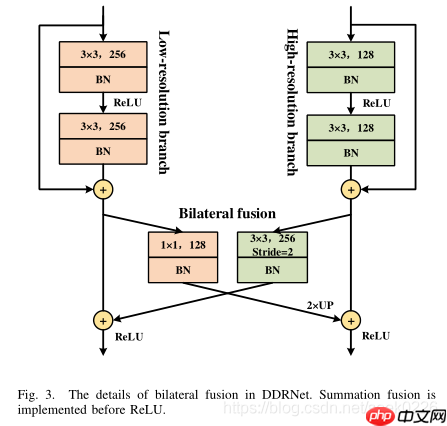

对比BiseNetV2来说是不是十分相似,都是采用了快速下降特征图尺寸,同时采用了并行细节分支和粗语义分支,而BisenetV2是在两个分支最后进行了粗语义(边缘,形状等浅语义信息)而DDRNet则在这个思路上进行了更高级的操作:每一步都进行融合,保证高级语义特征中始终保持有低级语义信息(因为随着特征层的深入低级语义信息会不断丢失,而这个操作就仿佛时时刻刻都让低级高级语义信息始终共存)。同时BisenetV2的booster都整过来了,在训练的时候启用中间的seghead,预测就不用(用也没问题,增加的计算量微乎其微),比较惊喜的就是这个DAPPM模块,这个模块看似和aspp一样无脑且大计算量,但是由于输入的层数以及featuremap size已经非常非常小了,这时候这个模块在整体的计算量就几乎可以免去。虽然在最后的sum部分我认为是有点违背轻量级网络设计的原则(ShuffleNetV2提出的四原则)进行了元素级别的运算,但是依然不能否认这个模型的优越性。(个人认为这里变成concat再进行conv提取信息会比较好)

关于DAPPM模块结构后续给出。

总结:本文提出了一种新的用于道路场景实时语义分割的深度双分辨率体系结构,并提出了一种新的多尺度上下文信息提取模块。据作者所知,作者是第一个将深度高分辨率表示引入实时语义分割的公司,作者的简单策略在两种流行基准上优于所有以前的模型,而不需要任何额外的附加功能。现有的实时网络大多是为ImageNet精心设计的或专门为ImageNet设计的高级骨干,这与广泛用于高精度方法的扩张骨干有很大不同。相比之下,DDRNet只利用了基本的残余模块和瓶颈模块,通过缩放模型的宽度和深度,可以提供更大范围的速度和精度权衡。由于作者的方法简单和高效,它可以被视为统一实时和高精度的语义分割的强大基线。

解压数据集&准备相应的环境

!unzip data/data125507/car_data_2022.zip

!!注意!!

为了方便起见我将ddrnet内置到paddleseg的安装包一并放入,但是有个问题是我之前写的ddrnet在生成静态图的时候会报错,与paddle的相关api冲突,因此我不得不在网络文件中定死尺寸并算出每个block的size,如果想使用生成静态图的版本请自行修改尺寸。可通过运行val.py文件根据报错的shape修改对应size的数值

#%cd work/PaddleSeg_withDDRnets/#!unzip /home/aistudio/data/data134261/PaddleSeg_with_DDRNets.zip

下面是自己添加ddrnet需要做的,拉取原生paddleseg环境,自行添加(此操作为不需要生成静态图的同学做,因为可换成自动获取shape的code)

# 首先从gitee上下载PaddleDetection和PaddleSeg程序# 此步骤已经完成,在此基线环境内,不需要再次进行# 当前为大家提供了PaddleSeg2.1版本做为基线,用户也可以选择PaddleSeg2.2、2.3或者develop版本。%cd work/ !git clone https://gitee.com/paddlepaddle/PaddleSeg.git # 查看一下是否下载成功!ls /home/aistudio/work# 在AIStudio环境中安装相关依赖!pip install paddleseg -i https://mirror.baidu.com/pypi/simple

模型组网

引入相关库,当加入paddleseg之前需要额外添加两个库 from paddleseg.cvlibs import manager from paddleseg.utils import utils

import paddleimport paddle.nn as nnimport paddle.nn.functional as F

这里使用不同大小的卷积核进行扩大感受野,没有使用空洞卷积的原因可能就是因为空洞卷积的膨胀率不好把握(水太深,把握不住)这里可以看一下前面一篇regseg的复现,regseg反倒是支持使用大膨胀率卷积核讲解视频:(https://www.bilibili.com/video/BV1La41127Hc?spm_id_from=333.999.0.0) 不过采用这种级联式的流水线级的扩大感受野也未尝不可。几个关键部件都齐了:首先有shortcut,避免梯度消失和爆炸的同时保留进入模块前的语义信息,有kernel从1,5,9,17到全局池化,不断的增加感受野,但是不使用空洞卷积,以一个简单有效的结构去完成了功能,最后concat到一起逐点卷积去联系跨通道信息。(个人感觉这个跨通道信息里的全局平均池化与前面的感受野结合起来一定程度上解决了长程依赖问题。。吧ps:个人认为)

class DAPPM(nn.Layer):

def __init__(self,in_dim,middle_dim,out_dim):

super(DAPPM,self).__init__() '''

以1/64图像分辨率的特征图作为输入,采用指数步幅的大池核,生成1/128、1/256、1/512图像分辨率的特征图。

'''

kernel_pool=[5,9,17]

stride_pool=[2,4,8]

bn_mom = 0.1

self.scale_1=nn.Sequential(

nn.AvgPool2D(kernel_size=kernel_pool[0], stride=stride_pool[0], padding=stride_pool[0]),

nn.BatchNorm2D(in_dim,momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(in_dim, middle_dim, kernel_size=1, stride=1, padding=0,bias_attr=False)

)

self.scale_2 = nn.Sequential(

nn.AvgPool2D(kernel_size=kernel_pool[1], stride=stride_pool[1], padding=stride_pool[1]),

nn.BatchNorm2D(in_dim,momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(in_dim, middle_dim, kernel_size=1, stride=1, padding=0,bias_attr=False)

)

self.scale_3 = nn.Sequential(

nn.AvgPool2D(kernel_size=kernel_pool[2], stride=stride_pool[2], padding=stride_pool[2]),

nn.BatchNorm2D(in_dim,momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(in_dim, middle_dim, kernel_size=1, stride=1, padding=0,bias_attr=False)

)

self.scale_4 = nn.Sequential(

nn.AdaptiveAvgPool2D(output_size=(1,1)),

nn.BatchNorm2D(in_dim, momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(in_dim, middle_dim, kernel_size=1, stride=1, padding=0,bias_attr=False)

) #最小扩张感受野

self.scale_0=nn.Sequential(

nn.BatchNorm2D(in_dim,momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(in_dim,middle_dim,kernel_size=1,stride=1,padding=0,bias_attr=False)

) '''

残差连接部分

'''

self.shortcut=nn.Sequential(

nn.BatchNorm2D(in_dim,momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(in_dim,out_dim,kernel_size=1,stride=1,padding=0,bias_attr=False)

) '''

conv3x3集合

'''

self.process1 = nn.Sequential(

nn.BatchNorm2D(middle_dim, momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(middle_dim, middle_dim, kernel_size=3, padding=1, bias_attr=False),

)

self.process2 = nn.Sequential(

nn.BatchNorm2D(middle_dim, momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(middle_dim, middle_dim, kernel_size=3, padding=1, bias_attr=False),

)

self.process3 = nn.Sequential(

nn.BatchNorm2D(middle_dim, momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(middle_dim, middle_dim, kernel_size=3, padding=1, bias_attr=False),

)

self.process4 = nn.Sequential(

nn.BatchNorm2D(middle_dim, momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(middle_dim, middle_dim, kernel_size=3, padding=1, bias_attr=False),

)

self.concat_conv1x1=nn.Sequential(

nn.BatchNorm2D(middle_dim * 5, momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(middle_dim * 5, out_dim, kernel_size=1, bias_attr=False),

) def forward(self,inps):

layer_list=[]

H=inps.shape[2]

W=inps.shape[3] '''

{ C1x1(x) i=1

Y_i=| C3x3( U( C1x1(AvgPool) ) + Y_i-1 ) 1

这个结构的玄机之二就是残差卷积块的设计,不过分追求每个残差块中的激活函数步步到位,设计普通残差连接块和bottleneck残差链接块,分别对高级语义信息和低级语义信息进行融合处理。我感觉这个激活函数的位置和整体结构设计还是比较考究的,当然最关键的还是fushion模块,始终让低级高级语义信息共存。

class BasicBlock(nn.Layer):

def __init__(self, in_dim, out_dim, stride=1, downsample=None, no_relu=False):

super(BasicBlock,self).__init__() '''

组成低分辨率和高分辨率分支的卷积集合块,由两个3x3卷积组成

'''

bn_mom=0.1

self.expansion = 1.0

self.layer1 = nn.Sequential(

nn.Conv2D(in_dim,out_dim, kernel_size=3, stride=stride, padding=1, bias_attr=False),

nn.BatchNorm2D(out_dim, momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(out_dim, out_dim, kernel_size=3, stride=1, padding=1, bias_attr=False),

nn.BatchNorm2D(out_dim, momentum=bn_mom)

)

self.layer2=nn.Sequential(

nn.Conv2D(out_dim, out_dim, kernel_size=3, stride=1, padding=1, bias_attr=False),

nn.BatchNorm2D(out_dim, momentum=bn_mom)

)

self.no_relu = no_relu

self.relu = nn.ReLU()

self.downsample = downsample def forward(self, inps):

residual=inps

oups=self.layer1(inps)

oups=self.layer2(oups) if self.downsample is not None:

residual=self.downsample(inps)

oups=residual+oups if self.no_relu:

oups=oups else:

oups = self.relu(oups) return oupsclass BottleNeckBlock(nn.Layer):

def __init__(self, in_dim, mid_dim, stride=1, downsample=None, no_relu=True):

super(BottleNeckBlock, self).__init__()

self.expansion = 2

self.layer1=nn.Sequential(

nn.Conv2D(in_dim, mid_dim, kernel_size=1, bias_attr=False),

nn.BatchNorm2D(mid_dim,momentum=0.1),

nn.ReLU()

)

self.layer2 = nn.Sequential(

nn.Conv2D(mid_dim, mid_dim, kernel_size=3, stride=stride, padding=1, bias_attr=False),

nn.BatchNorm2D(mid_dim, momentum=0.1),

nn.ReLU()

)

self.layer3 = nn.Sequential(

nn.Conv2D(mid_dim, mid_dim * self.expansion, kernel_size=1, bias_attr=False),

nn.BatchNorm2D(mid_dim * self.expansion, momentum=0.1),

nn.ReLU(),

)

self.downsample = downsample

self.stride = stride

self.no_relu = no_relu def forward(self, x):

residual = x

out=self.layer1(x)

out = self.layer2(out)

out = self.layer3(out) if self.downsample is not None:

residual = self.downsample(x)

out += residual if self.no_relu: return out else: return self.relu(out)渐入层设计

class Stem(nn.Layer):

def __init__(self,in_dim,out_dim):

super(Stem,self).__init__() '''

进行两次下采样

'''

bn_mom=0.1

self.layer=nn.Sequential(

nn.Conv2D(in_dim, out_dim, kernel_size=3, stride=2, padding=1, bias_attr=False),

nn.BatchNorm2D(out_dim, momentum=bn_mom),

nn.ReLU(),

nn.Conv2D(out_dim, out_dim, kernel_size=3, stride=2, padding=1, bias_attr=False),

nn.BatchNorm2D(out_dim, momentum=bn_mom)

) def forward(self,inps):

oups=self.layer(inps) return oupsclass SegHead(nn.Layer):

def __init__(self, inplanes, interplanes, outplanes, scale_factor=None):

super(SegHead, self).__init__()

self.layer=nn.Sequential(

nn.BatchNorm2D(inplanes,momentum=0.1),

nn.Conv2D(inplanes,interplanes,kernel_size=3,padding=1,bias_attr=False),

nn.ReLU(),

nn.BatchNorm2D(interplanes,momentum=0.1),

nn.ReLU(),

nn.Conv2D(interplanes,outplanes,kernel_size=1,padding=0,bias_attr=True)

)

self.scale_factor = scale_factor def forward(self,inps):

oups=self.layer(inps) if self.scale_factor is not None:

height = inps.shape[-2] * self.scale_factor

width = inps.shape[-1] * self.scale_factor

oups = F.interpolate(oups,

size=[height, width],

mode='bilinear') return oupsdef _make_layer(block,in_dim,out_dim,block_num,stride=1,expansion=1):

layer=[]

downsample=None

if stride!=1 or in_dim != out_dim * expansion:

downsample = nn.Sequential(

nn.Conv2D(in_dim, out_dim * expansion,kernel_size=1, stride=stride, bias_attr=False),

nn.BatchNorm2D(out_dim * expansion, momentum=0.1),

)

layer.append(block(in_dim, out_dim, stride, downsample))

inplanes = out_dim * expansion for i in range(1, block_num): if i == (block_num - 1):

layer.append(block(inplanes, out_dim, stride=1, no_relu=True)) else:

layer.append(block(inplanes, out_dim, stride=1, no_relu=False)) return nn.Sequential(*layer)#在使用paddleseg二次开发时请取消下面注释#@manager.MODELS.add_componentclass DDRNet_23_slim(nn.Layer):

def __init__(self,num_classes=4):

super(DDRNet_23_slim,self).__init__()

spp_plane=128

head_plane=64

planes=32

highres_planes=planes*2

basicBlock_expansion=1

bottleNeck_expansion=2

self.relu=nn.ReLU()

self.stem=Stem(3,32)

self.layer1 = _make_layer(BasicBlock, planes, planes, block_num=2,stride=1 ,expansion=basicBlock_expansion)

self.layer2 = _make_layer(BasicBlock, planes, planes * 2, block_num=2, stride=2,expansion=basicBlock_expansion) #1/8下采样

self.layer3 = _make_layer(BasicBlock, planes * 2, planes * 4, block_num=2, stride=2,expansion=basicBlock_expansion) #1/16下采样 高分辨率

self.layer3_ =_make_layer(BasicBlock, planes * 2, highres_planes, 2,stride=1,expansion=basicBlock_expansion)

self.layer4 = _make_layer(BasicBlock, planes * 4, planes * 8, block_num=2, stride=2,expansion=basicBlock_expansion) #high-resolution branch layer attch to layer3_

self.layer4_ = _make_layer(BasicBlock, highres_planes, highres_planes, 2,stride=1,expansion=basicBlock_expansion) '''

上采样过程中的操作

'''

self.compression3 = nn.Sequential(

nn.Conv2D(planes * 4, highres_planes, kernel_size=1, bias_attr=False),

nn.BatchNorm2D(highres_planes, momentum=0.1),

)

self.compression4 = nn.Sequential(

nn.Conv2D(planes * 8, highres_planes, kernel_size=1, bias_attr=False),

nn.BatchNorm2D(highres_planes, momentum=0.1),

) '''

low-resolution brach downsample

'''

self.down3 = nn.Sequential(

nn.Conv2D(highres_planes, planes * 4, kernel_size=3, stride=2, padding=1, bias_attr=False),

nn.BatchNorm2D(planes * 4, momentum=0.1),

)

self.down4 = nn.Sequential(

nn.Conv2D(highres_planes, planes * 4, kernel_size=3, stride=2, padding=1, bias_attr=False),

nn.BatchNorm2D(planes * 4, momentum=0.1),

nn.ReLU(),

nn.Conv2D(planes * 4, planes * 8, kernel_size=3, stride=2, padding=1, bias_attr=False),

nn.BatchNorm2D(planes * 8, momentum=0.1),

)

self.layer5_ = _make_layer(BottleNeckBlock, highres_planes, highres_planes, 1,stride=1,expansion=bottleNeck_expansion)

self.layer5 = _make_layer(BottleNeckBlock, planes * 8, planes * 8, 1, stride=2,expansion=bottleNeck_expansion)

self.spp = DAPPM(planes * 16, spp_plane, planes * 4)

self.final_layer = SegHead(planes * 4, head_plane, num_classes) def forward(self, inps):

layers = [0, 0, 0, 0] # layers = []

width_output = inps.shape[-1] // 8

height_output = inps.shape[-2] // 8

x = self.stem(inps)

x = self.layer1(x)

layers[0] = x # -- 0

# layers.append(x)

x = self.layer2(self.relu(x))

layers[1] = x #1/8 -- 1

# layers.append(x)

#Bilateral fusion

x = self.layer3(self.relu(x)) # get 1/16

layers[2] = x # -- 2

# layers.append(x)

x_low_resolution_branch = self.layer3_(self.relu(layers[1])) #get 1/8

x = x + self.down3(self.relu(x_low_resolution_branch)) # 低分辨度下采样成1/16+高分辨率1/16

x_low_resolution_branch = x_low_resolution_branch + F.interpolate(

self.compression3(self.relu(layers[2])), #scale_factor=[2, 2], # from 1/16 to 1/8

size=[height_output, width_output],

mode='bilinear') #低分辨率1/16上采样成高分辨率的1/8

x = self.layer4(self.relu(x)) # 1/32

layers[3] = x # -- 3

# layers.append(x)

x_ = self.layer4_(self.relu(x_low_resolution_branch)) # 1/8

x = x + self.down4(self.relu(x_)) # 1/32

x_ = x_ + F.interpolate(

self.compression4(self.relu(layers[3])), #scale_factor=[4, 4], # from 1/32 to 1/8

size=[height_output, width_output],

mode='bilinear') # 1/8

x_ = self.layer5_(self.relu(x_)) # 1/8

x = F.interpolate(

self.spp(self.layer5(self.relu(x))), #scale_factor=[8, 8], # from 1/64 to 1/8

size=[height_output, width_output],

mode='bilinear')

x_ = self.final_layer(x + x_) # 1/8

oups=F.interpolate(x_, # scale_factor=[8, 8],

size=[inps.shape[-2],inps.shape[-1]],

mode='bilinear') return [oups]测试一下网络结构

data=paddle.randn([1,3,480,480]) model=DDRNet_23_slim(4)print(model(data)[0].shape)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:653: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance.")

[1, 4, 480, 480]

ok,没问题,同时这篇文章附上不能生成静态图的pdparams文件(十七届智能车完全模型组数据集)假期搞了两个月结果不能生成静态图(写法上的冲突可能,有点玄乎),miou0.712,在相同搜索空间下的的测试数据为0.66,可惜不能生成静态图!提交不了,虽然后面改了一版能生成静态图的但是总是不对味,也很难复现到之前这版这么丝滑的涨点。只能换模型和方案去提交这个比赛了!因此开源(非常气!两三个月白给呜呜呜)我记得当时的成绩是在相同的训练环境下能比stdcseg少训练1w iters高出将近10个点的miou,炼丹,很玄学吧(摊手)

模型预测

%cd work/PaddleSeg_withDDRnets/

/home/aistudio/work/PaddleSeg_withDDRnets

!python predict.py --config configs/ddrnet/train.yml --model_path /home/aistudio/model.pdparams --image_path /home/aistudio/car_data_2022/JPEGImages --save_dir output/result

如果是自己训练的话,model_path改成你的model.pdparams的路径哦

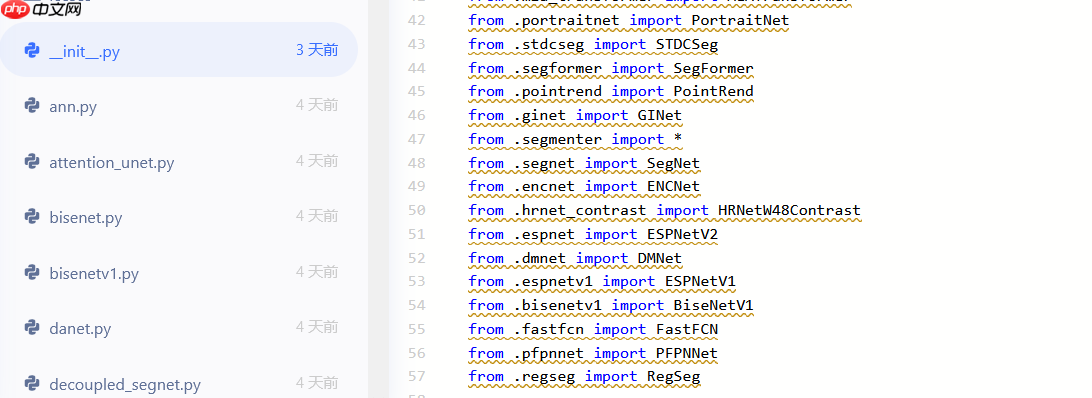

基于paddleseg的二次开发

先在这个路径下创建一个py文件,把上面的东西放进去,同时记住将init文件添加py文件中的类名,例如本文就是 from .xxx(创建的文件名) import DDRNet23-slim

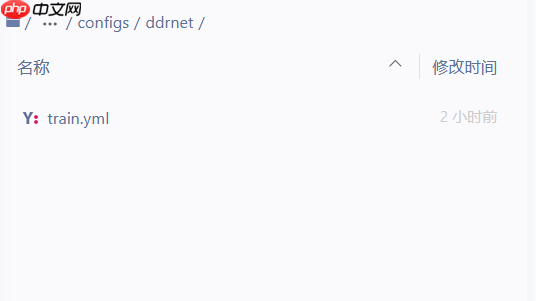

先在这个路径下创建一个py文件,把上面的东西放进去,同时记住将init文件添加py文件中的类名,例如本文就是 from .xxx(创建的文件名) import DDRNet23-slim  然后在config文件下建立文件

然后在config文件下建立文件

然后在文件中加入你的配置,这里的配置可以是:

base: '../base/car2022.yml'

model: type: DDRNet_23_slim num_classes: 4

optimizer: type: sgd weight_decay: 0.0001

loss: types: - type: CrossEntropyLoss coef: [1]

batch_size: 2 iters: 160000

lr_scheduler: type: PolynomialDecay learning_rate: 0.01 end_lr: 0.0 power: 0.9

!!!注意!!!

下面的操作是内置固定尺寸的方法,而想使用上面动态尺寸的方法请自行按照上方拉取原生paddleSeg二次开发方式进行拉取,自主构建即可

模型训练

config后要跟自己的路径哦!

%cd work/PaddleSeg_withDDRnets/

!python train.py \

--config configs/ddrnet/train.yml \

--do_eval \

--use_vdl \

--save_interval 500 \

--save_dir output模型评估

!python val.py --config configs/ddrnet/train.yml --model_path output/best_model/model.pdparams

由于不能生成静态图,我就不放生成静态图的程序啦,但是动态图下还是很丝滑的!效果很nice

效果图: