本文介绍了使用SSIM或MS-SSIM作为损失函数训练图像压缩自编码器的方法。自编码器含编码器与解码器,编码器用GDN模块,解码器用IGDN模块。以柯达24张照片为训练样本,将1减去SSIM或MS-SSIM作为损失函数,通过指定参数训练模型,还演示了图像压缩和解压缩过程,因训练样本少,恢复图像画质损失较严重。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

引入

自编码器(AutoEncoder)是一类自监督算法,即输入数据和标签数据是相同的数据,常用于数据降维,图像压缩等领域

自编码器一般由一个提取特征的编码器和一个恢复特征的解码器组成

本次就介绍如何使用 SSIM 或 MS-SSIM 作为损失函数训练一个用于图像压缩的自编码器

参考资料

END-TO-END OPTIMIZED IMAGE COMPRESSION

Paddle MS-SSIM

自编码器

-

一般的自编码器包含两个主要部分,即编码器和解码器,编码器用于压缩特征,解码器由于解压缩特征,大致架构如下图所示:

准备

安装 Paddle MS-SSIN

!pip install paddle_msssim

导入必要的包

import osimport sysimport argparseimport numpy as npfrom PIL import Imageimport paddleimport paddle.nn as nnimport paddle.nn.functional as Ffrom paddle.optimizer import Adamfrom paddle.vision import transformsfrom paddle.io import DataLoader, Datasetfrom paddle_msssim import ssim, ms_ssim, SSIM, MS_SSIM

模型实现

GDN / IGDN 模块

GDN(Generalized Divisive Normalization)/ IGDN(Inverse Generalized Divisive Normalization)

其类似一般卷积神经网络(CNN)中批归一化(Batch Normalization)的作用,可以很好的捕捉图像的统计特性,并将其转换为高斯分布。

在自编码器的编码器(Encoder)阶段使用 GDN 模块参与网络学习

对应的在解码器(Decoder)阶段,使用 GDN 的逆运算 IGND 模块参与网络学习

-

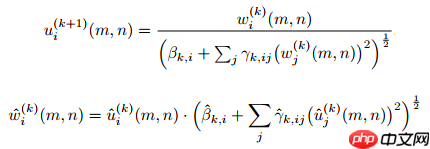

GDN / IGDN 的公式如下,在下式中,k 代表 stage 阶段序号,i,j 代表像素位置:

具体的代码实现如下:

class GDN(nn.Layer):

def __init__(self,

num_features,

inverse=False,

gamma_init=.1,

beta_bound=1e-6,

gamma_bound=0.0,

reparam_offset=2**-18, ):

super(GDN, self).__init__()

self._inverse = inverse

self.num_features = num_features

self.reparam_offset = reparam_offset

self.pedestal = self.reparam_offset**2

beta_init = paddle.sqrt(paddle.ones((num_features, ), dtype=paddle.float32) + self.pedestal)

gama_init = paddle.sqrt(paddle.full((num_features, num_features), fill_value=gamma_init, dtype=paddle.float32)

* paddle.eye(num_features, dtype=paddle.float32) + self.pedestal)

self.beta = self.create_parameter(

shape=beta_init.shape, default_initializer=nn.initializer.Assign(beta_init))

self.gamma = self.create_parameter(

shape=gama_init.shape, default_initializer=nn.initializer.Assign(gama_init))

self.beta_bound = (beta_bound + self.pedestal) ** 0.5

self.gamma_bound = (gamma_bound + self.pedestal) ** 0.5

def _reparam(self, var, bound):

var = paddle.clip(var, min=bound) return (var**2) - self.pedestal def forward(self, x):

gamma = self._reparam(self.gamma, self.gamma_bound).reshape((self.num_features, self.num_features, 1, 1)) # expand to (C, C, 1, 1)

beta = self._reparam(self.beta, self.beta_bound)

norm_pool = F.conv2d(x ** 2, gamma, bias=beta, stride=1, padding=0)

norm_pool = paddle.sqrt(norm_pool) if self._inverse:

norm_pool = x * norm_pool else:

norm_pool = x / norm_pool return norm_pool

编码器

-

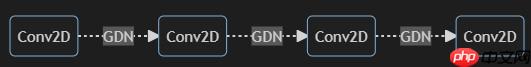

使用多层卷积和 GDN 模块构建一个简易的编码器,具体结构如下图所示:

class Encoder(nn.Layer):

def __init__(self, C=32, M=128, in_chan=3):

super(Encoder, self).__init__()

self.enc = nn.Sequential(

nn.Conv2D(in_channels=in_chan, out_channels=M,

kernel_size=5, stride=2, padding=2, bias_attr=False),

GDN(M),

nn.Conv2D(in_channels=M, out_channels=M, kernel_size=5,

stride=2, padding=2, bias_attr=False),

GDN(M),

nn.Conv2D(in_channels=M, out_channels=M, kernel_size=5,

stride=2, padding=2, bias_attr=False),

GDN(M),

nn.Conv2D(in_channels=M, out_channels=C, kernel_size=5,

stride=2, padding=2, bias_attr=False)

) def forward(self, x):

return self.enc(x)

解码器

-

使用多层转置卷积和 IGDN 模块构建一个简易的解码器,具体结构如下图:

class Decoder(nn.Layer):

def __init__(self, C=32, M=128, out_chan=3):

super(Decoder, self).__init__()

self.dec = nn.Sequential(

nn.Conv2DTranspose(in_channels=C, out_channels=M, kernel_size=5,

stride=2, padding=2, output_padding=1, bias_attr=False),

GDN(M, inverse=True),

nn.Conv2DTranspose(in_channels=M, out_channels=M, kernel_size=5,

stride=2, padding=2, output_padding=1, bias_attr=False),

GDN(M, inverse=True),

nn.Conv2DTranspose(in_channels=M, out_channels=M, kernel_size=5,

stride=2, padding=2, output_padding=1, bias_attr=False),

GDN(M, inverse=True),

nn.Conv2DTranspose(in_channels=M, out_channels=out_chan, kernel_size=5,

stride=2, padding=2, output_padding=1, bias_attr=False),

) def forward(self, q):

return F.sigmoid(self.dec(q))

自编码器

- 将上述的编码器和解码器组合起来就可以构成一个简单的自编码器

class AutoEncoder(nn.Layer):

def __init__(self, C=128, M=128, in_chan=3, out_chan=3):

super(AutoEncoder, self).__init__()

self.encoder = Encoder(C=C, M=M, in_chan=in_chan)

self.decoder = Decoder(C=C, M=M, out_chan=out_chan) def forward(self, x, **kargs):

code = self.encoder(x)

out = self.decoder(code) return out

数据集

介绍

作为演示,这里使用柯达提供的 24 张无版权测试照片作为训练样本,数据集比较小

当然使用更多的图片作为数据进行模型训练效果会更佳

-

样例图像如下:

数据集代码实现

class ImageDataset(Dataset):

def __init__(self, root, transform=None):

self.root = root

self.transform = transform

self.images = list(os.listdir(root))

self.images.sort() def __getitem__(self, idx):

img = Image.open(os.path.join(self.root, self.images[idx])) if self.transform is not None:

img = self.transform(img) return img,

def __len__(self):

return len(self.images)

模型训练

损失函数

- 因为 SSIM 和 MS-SSIM 指数越高越好,而网络训练时一般使用的是最小化优化的方式

- 所以需要使用 1 减去算出来的 SSIM 或 MS-SSIM 指数作为网络训练的损失函数

class MS_SSIM_Loss(MS_SSIM):

def forward(self, img1, img2):

return 100*(1 - super(MS_SSIM_Loss, self).forward(img1, img2))class SSIM_Loss(SSIM):

def forward(self, img1, img2):

return 100*(1 - super(SSIM_Loss, self).forward(img1, img2))

训练参数

def get_argparser():

parser = argparse.ArgumentParser()

parser.add_argument("--ckpt", default=None, type=str, help="path to trained model. Leave it None if you want to retrain your model")

parser.add_argument("--loss_type", type=str,

default='ms_ssim', choices=['ssim', 'ms_ssim'])

parser.add_argument("--batch_size", type=int, default=24)

parser.add_argument("--log_interval", type=int, default=1)

parser.add_argument("--total_epochs", type=int, default=200) return parser

模型训练与评估

def test(opts, model, val_loader, epoch):

save_dir = os.path.join('results', 'epoch_%d' % epoch) if not os.path.exists(save_dir):

os.mkdir(save_dir)

model.eval()

cur_score = 0.0

metric = ssim if opts.loss_type == 'ssim' else ms_ssim with paddle.no_grad(): for i, (images, ) in enumerate(val_loader):

outputs = model(images) # save the first reconstructed image

cur_score += metric(outputs, images, data_range=1.0)

Image.fromarray((outputs*255).squeeze(0).detach().numpy().astype('uint8').transpose(1, 2, 0)).save(os.path.join(save_dir, 'recons_%s_%d.png' % (opts.loss_type, i)))

cur_score /= len(val_loader.dataset) return cur_scoreif not os.path.exists('results'):

os.mkdir('results')

opts, unparsed = get_argparser().parse_known_args()# datasettrain_trainsform = transforms.Compose([

transforms.RandomCrop(size=512, pad_if_needed=True),

transforms.RandomHorizontalFlip(),

transforms.RandomVerticalFlip(),

transforms.ToTensor(),

])

val_transform = transforms.Compose([

transforms.CenterCrop(size=512),

transforms.ToTensor()

])

train_loader = DataLoader(

ImageDataset(root='datasets/kodak', transform=train_trainsform),

batch_size=opts.batch_size, shuffle=True, num_workers=0, drop_last=True)

val_loader = DataLoader(

ImageDataset(root='datasets/kodak', transform=val_transform),

batch_size=1, shuffle=False, num_workers=0)print("Train set: %d, Val set: %d" %

(len(train_loader.dataset), len(val_loader.dataset)))

model = AutoEncoder(C=128, M=128, in_chan=3, out_chan=3)# optimizeroptimizer = Adam(parameters=model.parameters(),

learning_rate=1e-4,

weight_decay=1e-5)# checkpointbest_score = 0.0cur_epoch = 0if opts.ckpt is not None and os.path.isfile(opts.ckpt):

model.set_dict(paddle.load(opts.ckpt))else: print("[!] Retrain")if opts.loss_type == 'ssim':

criterion = SSIM_Loss(data_range=1.0, size_average=True, channel=3)else:

criterion = MS_SSIM_Loss(data_range=1.0, size_average=True, channel=3)#========== Train Loop ==========#for cur_epoch in range(opts.total_epochs): # ===== Train =====

model.train() for cur_step, (images, ) in enumerate(train_loader):

optimizer.clear_grad()

outputs = model(images)

loss = criterion(outputs, images)

loss.backward()

optimizer.step() if (cur_step) % opts.log_interval == 0: print("Epoch %d, Batch %d/%d, loss=%.6f" %

(cur_epoch, cur_step, len(train_loader), loss.item())) # ===== Save Latest Model =====

paddle.save(model.state_dict(), 'latest_model.pdparams') # ===== Validation =====

print("Val...")

best_score = 0.0

cur_score = test(opts, model, val_loader, cur_epoch) print("%s = %.6f" % (opts.loss_type, cur_score)) # ===== Save Best Model =====

if cur_score > best_score: # save best model

best_score = cur_score

paddle.save(model.state_dict(), 'best_model.pdparams') print("Best model saved as best_model.pdparams")

图像压缩演示

- 使用下列代码即可对图像进行压缩和解压缩

- 当然由于使用的训练图片较少,恢复的图像画质损失比较严重

# 读取测试图像dataset = ImageDataset(root='datasets/kodak', transform=val_transform)

x = dataset[0][0][None, ...]print('input shape:', x.shape, 'size:', x.size)# 图像编码压缩hidden = model.encoder(x)print('hidden shape:', hidden.shape, 'size:', hidden.size)# 图像解码解压缩y = model.decoder(hidden)print('output shape:', y.shape, 'size:', y.size)# 图像张量还原函数def postprocess(tensor):

tensor = (tensor*255).squeeze().cast(paddle.uint8).numpy()

img = tensor.transpose(1, 2, 0) return img# 图像还原拼接对比img_y = postprocess(y)

img_x = postprocess(x)

img = np.concatenate([img_x, img_y], 1)

img = Image.fromarray(img)

img.save('test.jpg')from IPython import display

display.Image('test.jpg')

input shape: [1, 3, 512, 512] size: 786432 hidden shape: [1, 128, 32, 32] size: 131072 output shape: [1, 3, 512, 512] size: 786432