本项目基于2021年的“中国软件杯”的《单/多镜头行人追踪模型》赛题做的一个非官方的baseline。该项目使用PaddleDetection快速训练分类模型,然后通过PaddleLite部署到安卓手机上,实现飞桨框架深度学习模型的落地。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

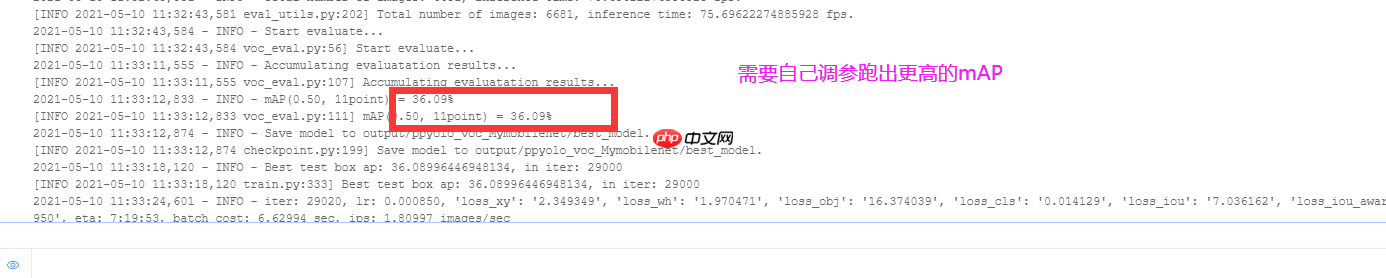

加入追踪效果以后~ 我是在基于mAP:70%的模型基础上跑出如下情况 V13版本 在第50代码块

本项目基于2021年的“中国软件杯针”的《单/多镜头行人追踪模型》赛题做的一个非官方的baseline。对项目还存在的改进空间,以及其它模型在不同移动设备的部署,希望大家多交流观点、介绍经验,共同学习进步,可以互相关注♥。个人主页

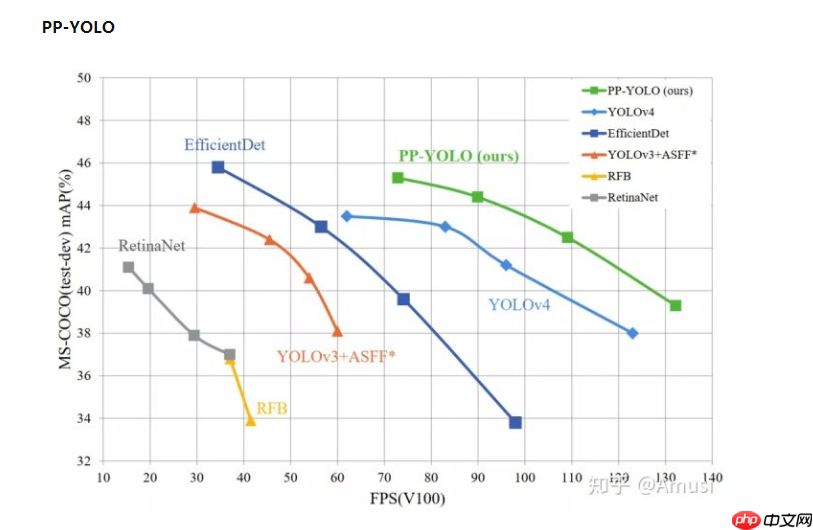

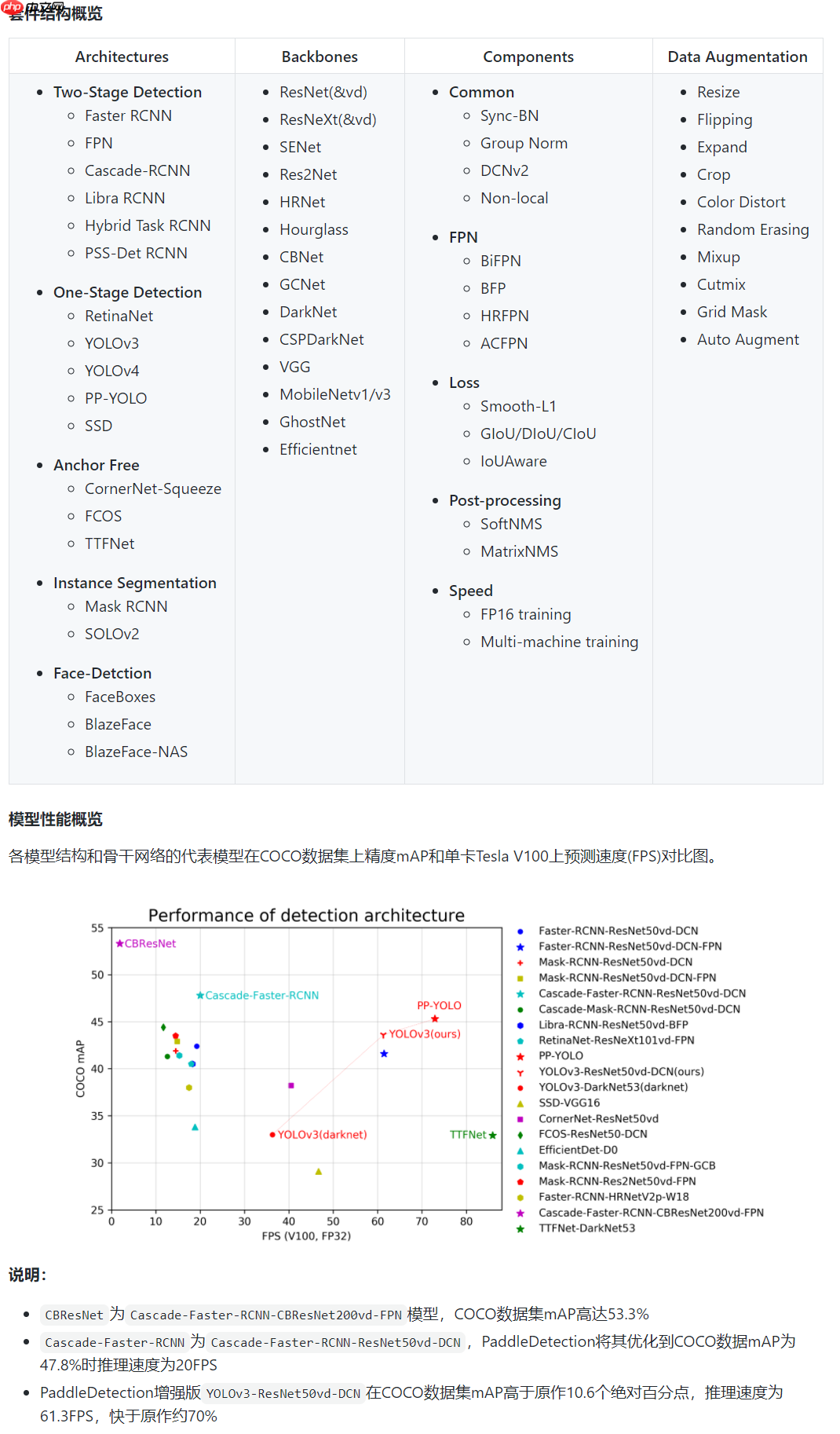

PaddleDetection飞桨目标检测开发套件,旨在帮助开发者更快更好地完成检测模型的组建、训练、优化及部署等全开发流程。PaddleDetection模块化地实现了多种主流目标检测算法,提供了丰富的数据增强策略、网络模块组件(如骨干网络)、损失函数等,并集成了模型压缩和跨平台高性能部署能力。 PPYolo文档

!unzip data/data87668/MyDetectionconfig.zip

#初次跑需要 PaddleDetection的资源!unzip data/data87668/MyDetectionconfig.zip

!pip install pycocotools !pip install scikit-image

#创建解析好的图片与xml文件的目录!mkdir VOCData/ !mkdir VOCData/images/ !mkdir VOCData/annotations/

该项目数据集使用COCO数据集中的行人部分。

#运行一次 后面注释 !unzip -oq data/data7122/train2017.zip -d ./ !unzip -oq data/data7122/val2017.zip -d ./ !unzip -oq data/data7122/annotations_trainval2017.zip -d ./

将COCO中的行人类别提取出来并转换为VOC格式。

# 创建索引from pycocotools.coco import COCO import osimport shutilfrom tqdm import tqdmimport skimage.io as ioimport matplotlib.pyplot as pltimport cv2from PIL import Image, ImageDrawfrom shutil import moveimport xml.etree.ElementTree as ET from random import shuffle# import fileinput #操作文件流

# 保存路径savepath = "VOCData/"img_dir = savepath + 'images/' #images 存取所有照片anno_dir = savepath + 'annotations/' #Annotations存取xml文件信息datasets_list=['train2017', 'val2017']

classes_names = ['person']# 读取COCO数据集地址 Store annotations and train2014/val2014/... in this folderdataDir = './'#写好模板,里面的%s与%d 后面文件输入输出流改变 -------转数据集阶段--------headstr = """

<annotation>

<folder>VOC</folder>

<filename>%s</filename>

<source>

<database>My Database</database>

<annotation>COCO</annotation>

<image>flickr</image>

<flickrid>NULL</flickrid>

</source>

<owner>

<flickrid>NULL</flickrid>

<name>company</name>

</owner>

<size>

<width>%d</width>

<height>%d</height>

<depth>%d</depth>

</size>

<segmented>0</segmented>

"""objstr = """

<object>

<name>%s</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<difficult>0</difficult>

<bndbox>

<xmin>%d</xmin>

<ymin>%d</ymin>

<xmax>%d</xmax>

<ymax>%d</ymax>

</bndbox>

</object>

"""

tailstr = '''

</annotation>

'''

# if the dir is not exists,make it,else delete itdef mkr(path):

if os.path.exists(path):

shutil.rmtree(path)

os.mkdir(path) else:

os.mkdir(path)

mkr(img_dir)

mkr(anno_dir)

def id2name(coco): # 生成字典 提取数据中的id,name标签的值 ---------处理数据阶段---------

classes = dict() for cls in coco.dataset['categories']:

classes[cls['id']] = cls['name'] return classes

def write_xml(anno_path, head, objs, tail): #把提取的数据写入到相应模板的地方

f = open(anno_path, "w")

f.write(head) for obj in objs:

f.write(objstr % (obj[0], obj[1], obj[2], obj[3], obj[4]))

f.write(tail)

def save_annotations_and_imgs(coco, dataset, filename, objs):

# eg:COCO_train2014_000000196610.jpg-->COCO_train2014_000000196610.xml

anno_path = anno_dir + filename[:-3] + 'xml'

img_path = dataDir + dataset + '/' + filename

dst_imgpath = img_dir + filename

img = cv2.imread(img_path) if (img.shape[2] == 1): print(filename + " not a RGB image") return

shutil.copy(img_path, dst_imgpath)

head = headstr % (filename, img.shape[1], img.shape[0], img.shape[2])

tail = tailstr

write_xml(anno_path, head, objs, tail)

def showimg(coco, dataset, img, classes, cls_id, show=True):

global dataDir

I = Image.open('%s/%s/%s' % (dataDir, dataset, img['file_name']))# 通过id,得到注释的信息

annIds = coco.getAnnIds(imgIds=img['id'], catIds=cls_id, iscrowd=None)

anns = coco.loadAnns(annIds) # coco.showAnns(anns)

objs = [] for ann in anns:

class_name = classes[ann['category_id']] if class_name in classes_names: if 'bbox' in ann:

bbox = ann['bbox']

xmin = int(bbox[0])

ymin = int(bbox[1])

xmax = int(bbox[2] + bbox[0])

ymax = int(bbox[3] + bbox[1])

obj = [class_name, xmin, ymin, xmax, ymax]

objs.append(obj)

return objs# ----------测试的无用代码---------- 注释不影响 # def del_firstline(file_name):# for line in fileinput.input(file_name, inplace = 1):# if fileinput.isfirstline():# print(line.replace("\n", " "))# fileinput.close()# break# def del_firstline(file_name):# with open(file_name) as fp_in:# with open(file_name, 'w') as fp_out:# fp_out.writelines(line for i, line in enumerate(fp_in) if i != 10)for dataset in datasets_list: # ./COCO/annotations/instances_train2014.json

annFile = '{}/annotations/instances_{}.json'.format(dataDir, dataset)

# COCO API for initializing annotated data

coco = COCO(annFile) '''

COCO 对象创建完毕后会输出如下信息:

loading annotations into memory...

Done (t=0.81s)

creating index...

index created!

至此, json 脚本解析完毕, 并且将图片和对应的标注数据关联起来.

'''

# show all classes in coco

classes = id2name(coco) print(classes) # [1, 2, 3, 4, 6, 8]

classes_ids = coco.getCatIds(catNms=classes_names) print(classes_ids) for cls in classes_names: # Get ID number of this class

cls_id = coco.getCatIds(catNms=[cls])

img_ids = coco.getImgIds(catIds=cls_id) # imgIds=img_ids[0:10]

for imgId in tqdm(img_ids):

img = coco.loadImgs(imgId)[0]

filename = img['file_name']

objs = showimg(coco, dataset, img, classes, classes_ids, show=False)

save_annotations_and_imgs(coco, dataset, filename, objs)out_img_base = 'images'out_xml_base = 'Annotations'img_base = 'VOCData/images/'xml_base = 'VOCData/annotations/'if not os.path.exists(out_img_base):

os.mkdir(out_img_base)if not os.path.exists(out_xml_base):

os.mkdir(out_xml_base)for img in tqdm(os.listdir(img_base)):

xml = img.replace('.jpg', '.xml')

src_img = os.path.join(img_base, img)

src_xml = os.path.join(xml_base, xml)

dst_img = os.path.join(out_img_base, img)

dst_xml = os.path.join(out_xml_base, xml) if os.path.exists(src_img) and os.path.exists(src_xml):

move(src_img, dst_img)

move(src_xml, dst_xml)def extract_xml(infile):

# tree = ET.parse(infile) #源码与我写的效果相同

# root = tree.getroot()

# size = root.find('size')

# classes = []

# for obj in root.iter('object'):

# cls_ = obj.find('name').text

# classes.append(cls_)

# return classes

with open(infile,'r') as f: #解析xml中的name标签

xml_text = f.read()

root = ET.fromstring(xml_text)

classes = [] for obj in root.iter('object'):

cls_ = obj.find('name').text

classes.append(cls_) return classesif __name__ == '__main__':

base = 'Annotations/'

Xmls=[] # Xmls = sorted([v for v in os.listdir(base) if v.endswith('.xml')])

for v in os.listdir(base): if v.endswith('.xml'):

Xmls.append(str(v)) # iterable -- 可迭代对象。key -- 主要是用来进行比较的元素,只有一个参数,具体的函数的参数就是取自于可迭代对象中,指定可迭代对象中的一个元素来进行排序。reverse -- 排序规则,reverse = True 降序 , reverse = False 升序(默认)。

print('-[INFO] total:', len(Xmls)) # print(Xmls)

labels = {'person': 0} for xml in Xmls:

infile = os.path.join(base, xml) # print(infile)

cls_ = extract_xml(infile) for c in cls_: if not c in labels: print(infile, c) raise

labels[c] += 1

for k, v in labels.items(): print('-[Count] {} total:{} per:{}'.format(k, v, v/len(Xmls)))#删除多余的文件 运行一次!rm -rf val2017/ !rm -rf train2017/ !rm -rf annotations/ !rm -rf VOCData/

#每次先运行进入这个路径 ,为26编号的运行代码正常运行!!!!!!!!!%cd work/PaddleDetection-release-2.0-rc/

!mkdir VOCData !mv -f ../../images/ VOCData/ !mv -f ../../Annotations/ VOCData/

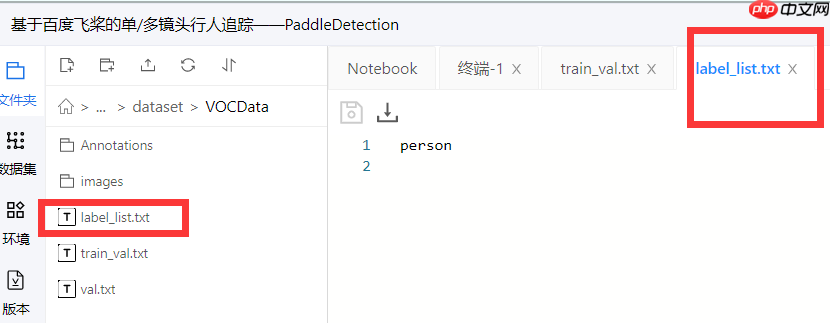

#没有这个文件时创建一个空的label的txt文件!touch VOCData/label_list.txt

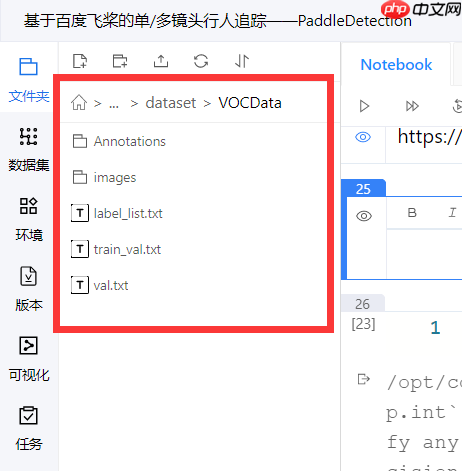

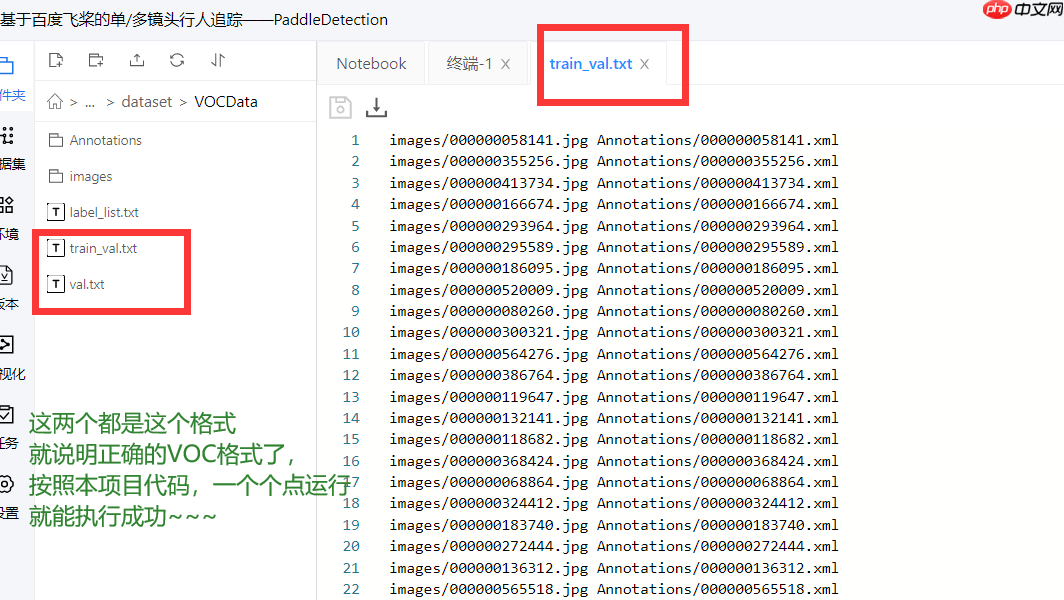

dataset = 'VOCData/'train_txt = os.path.join(dataset, 'train_val.txt')

val_txt = os.path.join(dataset, 'val.txt')

lbl_txt = os.path.join(dataset, 'label_list.txt')

classes = [ "person"

]with open(lbl_txt, 'w') as f: for l in classes:

f.write(l+'\n')

xml_base = 'Annotations'img_base = 'images'xmls = [v for v in os.listdir(os.path.join(dataset, xml_base)) if v.endswith('.xml')]# xmls=[]# for v in os.listdir(os.path.join(dataset, xml_base)):# if v.endswith('.xml'):# xmls.append(str(v))shuffle(xmls)

split = int(0.9 * len(xmls))with open(train_txt, 'w') as f: for x in tqdm(xmls[:split]):

m = x[:-4]+'.jpg'

xml_path = os.path.join(xml_base, x)

img_path = os.path.join(img_base, m)

f.write('{} {}\n'.format(img_path, xml_path))

with open(val_txt, 'w') as f: for x in tqdm(xmls[split:]):

m = x[:-4]+'.jpg'

xml_path = os.path.join(xml_base, x)

img_path = os.path.join(img_base, m)

f.write('{} {}\n'.format(img_path, xml_path))#存入paddleDetection的dataset中保持yml文件的路径不乱!mv -f VOCData/ dataset/

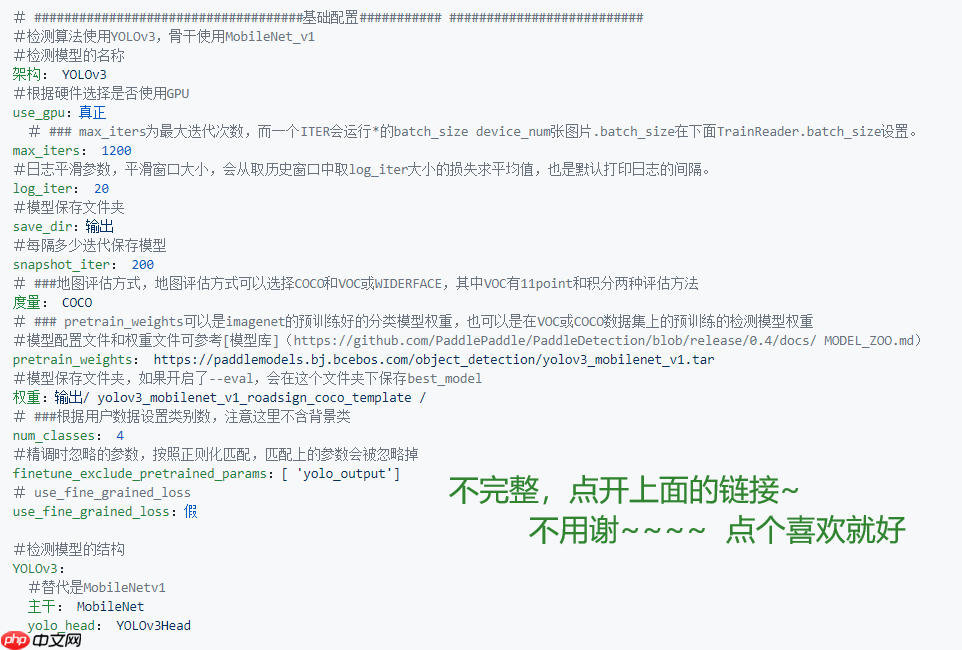

#成功转成VOC后开始训练 进入这个目录下保证yml配置文件中的路径正确%cd work/PaddleDetection-release-2.0-rc/

#配置PaddleDetection环境 #0!pip install -r /home/aistudio/work/PaddleDetection-release-2.0-rc/requirements.txt

#初次炼丹 ,等有一个好的模型了,可以使用断点续训,在下一个代码命令 注意看完注释 这个代码有好模型后就用下面的断点续训!python tools/train.py -c configs/ppyolo/ppyolo_voc_Mymobilenet.yml --use_vdl True --eval -o use_gpu=true

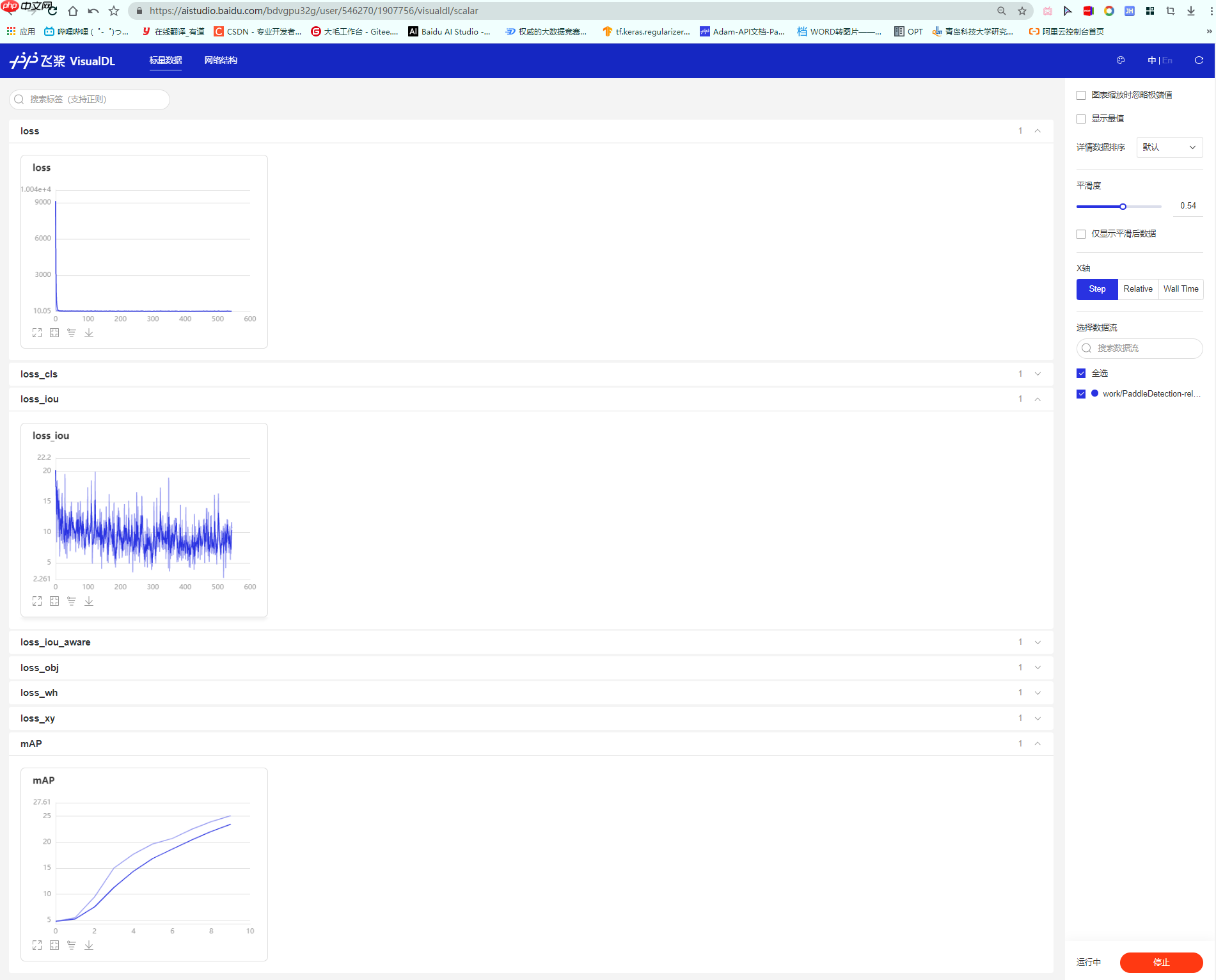

查看VisualDL训练过程,需要执行如下步骤:

%cd /home/aistudio/work/PaddleDetection-release-2.0-rc/

#这是指在10000轮的地方接着他的参数训练 上面的就不用重新从第一轮开始炼丹了 从自己好的断点开始训练!python -u tools/train.py -c configs/ppyolo/ppyolo_voc_Mymobilenet.yml -r output/ppyolo_voc_Mymobilenet/8000 --use_vdl True --eval

!python tools/infer.py -c configs/ppyolo/ppyolo_voc_Mymobilenet.yml -o weights=output/ppyolo_voc_Mymobilenet/62000.pdmodel --infer_img=../../Test/113.jpg #自己在最开始的目录下创建Test文件夹 放入自己要推理的照片

%cd /home/aistudio/work/

# # #Paddle Lite 有时候挺快有时候很慢 下载11k/s(不推荐,网速快除外) !git clone https://gitee.com/paddlepaddle/paddle-lite.git

#改名用的习惯点!mv paddle-lite/ Paddle-lite

%cd Paddle-lite/

# 启动编译,编译opt工具,初次编译需要稍长的时间,请耐心等待。!./lite/tools/build.sh build_optimize_tool

%cd /home/aistudio/work/PaddleDetection-release-2.0-rc/

#导出模型!python tools/export_model.py -c configs/ppyolo/ppyolo_voc_Mymobilenet.yml -o weights=output/ppyolo_voc_Mymobilenet/62000.pdparams

# 准备PaddleLite依赖!pip install paddlelite

Looking in indexes: https://mirror.baidu.com/pypi/simple/

Collecting paddlelite

Downloading https://mirror.baidu.com/pypi/packages/3a/24/f38338b340c7625c227454fd5ab349a8f8d88a6439963e78544f088904db/paddlelite-2.8-cp37-cp37m-manylinux1_x86_64.whl (43.9 MB)

|████████████████████████████████| 43.9 MB 8.4 MB/s eta 0:00:012

Installing collected packages: paddlelite

Successfully installed paddlelite-2.8WARNING: You are using pip version 21.0.1; however, version 21.1.1 is available.

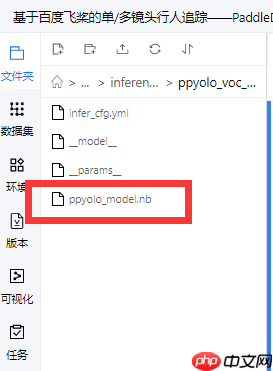

You should consider upgrading via the '/opt/conda/envs/python35-paddle120-env/bin/python -m pip install --upgrade pip' command.# 准备PaddleLite部署模型!paddle_lite_opt \

--model_file=output/inference_model/ppyolo_voc_Mymobilenet/__model__ \

--param_file=output/inference_model/ppyolo_voc_Mymobilenet/__params__ \

--optimize_out=output/inference_model/ppyolo_voc_Mymobilenet/ppyolo_model \

--optimize_out_type=naive_buffer \

--valid_targets=arm

#下面命令可以打印出ppyolo_voc模型中包含的所有算子,并判断在硬件平台valid_targets下Paddle-Lite是否支持该模型!paddle_lite_opt --print_model_ops=true --model_dir=output/inference_model/ppyolo_voc_Mymobilenet --valid_targets=arm

%cd /home/aistudio

/home/aistudio

# 拉取deep_sort_paddle #!git clone https://github.com/Wei-JL/deep_sort_paddle GitHub慢!git clone https://gitee.com/weidamao/deep_sort_paddle

!mkdir deep_sort_paddle/model/detection !mkdir deep_sort_paddle/model/embedding

#把自己训练好的.yml ,__model__ ,__params__拷贝到deep sort中 %cd ~/work/PaddleDetection-release-2.0-rc/ !cp output/inference_model/ppyolo_voc_Mymobilenet/infer_cfg.yml /home/aistudio/deep_sort_paddle/model/detection !cp output/inference_model/ppyolo_voc_Mymobilenet/__model__ /home/aistudio/deep_sort_paddle/model/detection !cp output/inference_model/ppyolo_voc_Mymobilenet/__params__ /home/aistudio/deep_sort_paddle/model/detection

/home/aistudio/work/PaddleDetection-release-2.0-rc

%cd /home/aistudio/deep_sort_paddle/

/home/aistudio/deep_sort_paddle

# 使用测试视频进行预测推理# 更多参数设置请参考README和项目源码!python main.py \

--video_path ~/Test/PETS09-S2L1-raw.mp4 \

--save_dir output \

--threshold 0.2 \

--use_gpuInference: 26.503801345825195 ms per batch image [[181 152 208 221 3] [261 158 292 237 25] [482 170 510 242 27] [374 146 400 214 38] [163 330 214 451 42] [524 156 553 231 43] [462 247 500 346 48]] Inference: 30.849456787109375 ms per batch image [[173 153 201 221 3] [269 156 301 239 25] [484 167 513 240 27] [382 145 408 214 38] [152 330 202 449 42] [514 154 543 230 43] [448 241 486 342 48]] Inference: 23.58222007751465 ms per batch image [[168 155 197 223 3] [276 157 308 240 25] [486 166 514 239 27] [388 146 414 213 38] [145 329 195 448 42] [509 153 538 231 43] [437 239 475 340 48]] Inference: 24.28293228149414 ms per batch image [[164 155 194 224 3] [281 157 313 240 25] [487 165 515 237 27] [393 146 419 213 38] [141 327 192 446 42] [505 154 534 232 43] [430 240 468 338 48]] Inference: 23.044347763061523 ms per batch image [[160 155 189 223 3] [288 158 319 240 25] [492 162 521 237 27] [400 145 425 212 38] [130 322 181 442 42] [500 153 529 232 43] [419 238 457 335 48]] Inference: 23.487567901611328 ms per batch image [[157 155 186 225 3] [291 159 323 241 25] [498 162 526 235 27] [404 144 429 207 38] [122 319 175 442 42] [406 240 440 328 48]] Inference: 23.191452026367188 ms per batch image [[154 157 183 226 3] [297 157 329 241 25] [503 162 530 234 27] [407 144 431 202 38] [119 318 172 442 42] [484 152 511 227 43] [398 239 432 326 48]] Inference: 20.77198028564453 ms per batch image [[151 157 180 226 3] [301 157 332 242 25] [506 162 533 234 27] [413 143 436 201 38] [118 318 170 442 42] [476 152 504 226 43] [390 231 425 323 48]] Inference: 20.093441009521484 ms per batch image [[148 158 177 228 3] [308 160 339 242 25] [513 163 539 232 27] [116 316 166 436 42] [469 154 496 226 43] [375 228 412 322 48]] Inference: 20.197153091430664 ms per batch image [[146 159 176 229 3] [312 161 343 242 25] [516 162 542 232 27] [116 313 164 430 42] [462 153 491 225 43] [367 226 405 320 48]] Inference: 96.466064453125 ms per batch image [[146 159 176 230 3] [316 161 347 242 25] [519 160 545 229 27] [113 309 161 425 42] [456 151 484 225 43] [359 223 397 318 48]] Inference: 20.709514617919922 ms per batch image [[148 160 178 231 3] [323 161 356 246 25] [524 159 550 228 27] [112 307 161 425 42] [447 151 475 224 43] [348 223 385 315 48]] Inference: 25.289297103881836 ms per batch image [[150 160 180 231 3] [329 164 361 246 25] [526 158 552 227 27] [112 304 160 421 42] [439 146 469 224 43] [340 222 377 313 48]] Inference: 20.5080509185791 ms per batch image [[151 161 182 233 3] [334 164 366 247 25] [528 158 554 227 27] [109 302 158 420 42] [436 142 468 225 43] [323 179 373 310 48]] Inference: 20.06220817565918 ms per batch image [[153 162 186 236 3] [529 158 555 227 27] [108 300 157 418 42] [437 140 467 217 43] [317 166 370 309 48]] Inference: 20.058631896972656 ms per batch image [[157 163 189 237 3] [339 166 370 249 25] [529 158 555 226 27] [447 138 473 206 38] [107 297 156 415 42] [431 139 461 216 43] [316 195 358 306 48] [732 222 757 314 51]] Inference: 20.047426223754883 ms per batch image [[159 163 192 237 3] [342 164 373 250 25] [529 159 555 226 27] [452 138 478 204 38] [106 295 155 416 42] [315 208 351 303 48] [727 220 753 312 51]] Inference: 20.34783363342285 ms per batch image [[161 162 194 240 3] [347 165 377 250 25] [527 160 553 227 27] [456 137 482 203 38] [105 294 154 414 42] [308 213 343 301 48] [721 218 748 312 51]] Inference: 20.10798454284668 ms per batch image [[167 163 198 239 3] [352 167 382 251 25] [525 162 551 228 27] [462 137 487 202 38] [104 290 151 405 42] [298 211 332 299 48] [710 216 737 309 51]] Inference: 20.17068862915039 ms per batch image [[172 164 203 238 3] [357 169 386 251 25] [522 162 549 229 27] [465 136 491 202 38] [102 289 147 399 42] [290 211 325 299 48] [699 214 728 308 51]] Inference: 20.009517669677734 ms per batch image [[176 164 207 238 3] [361 170 391 255 25] [521 163 548 230 27] [468 135 495 202 38] [100 287 145 395 42] [282 209 317 296 48] [693 212 724 308 51]] Inference: 105.47232627868652 ms per batch image [[180 165 211 239 3] [364 169 396 257 25] [520 164 546 231 27] [473 134 499 200 38] [100 286 144 392 42] [276 207 309 294 48] [687 212 718 306 51]] Inference: 26.230573654174805 ms per batch image [[187 165 218 240 3] [371 173 402 259 25] [517 166 544 235 27] [479 135 504 199 38] [ 95 282 139 391 42] [266 210 299 290 48] [678 212 709 305 51]] Inference: 23.80514144897461 ms per batch image [[193 166 224 242 3] [375 174 406 258 25] [515 166 542 238 27] [482 134 508 199 38] [ 92 280 137 389 42] [258 207 291 288 48] [669 212 700 304 51]] Inference: 102.69880294799805 ms per batch image [[197 167 229 243 3] [380 174 412 259 25] [513 167 541 238 27] [484 133 509 198 38] [ 90 278 134 384 42] [251 205 284 287 48] [662 213 694 305 51]] Inference: 22.726774215698242 ms per batch image [[200 167 232 245 3] [385 175 417 260 25] [510 171 537 241 27] [489 131 514 195 38] [ 86 276 130 381 42] [242 205 274 285 48] [654 213 686 304 51]] Inference: 22.423982620239258 ms per batch image [[204 167 235 244 3] [506 173 533 243 27] [494 130 519 195 38] [ 84 274 128 378 42] [233 204 265 282 48] [647 210 679 301 51]] Inference: 21.02351188659668 ms per batch image [[207 168 236 242 3] [503 174 530 243 27] [ 81 272 125 375 42] [224 203 256 280 48] [641 208 673 301 51]] Inference: 20.467519760131836 ms per batch image [[209 169 238 242 3] [495 144 534 246 27] [ 78 270 121 372 42] [362 151 391 227 43] [220 199 253 278 48] [636 208 668 300 51] [392 177 427 262 55]] Inference: 20.43890953063965 ms per batch image [[495 160 528 247 27] [506 128 531 192 38] [ 73 270 115 369 42] [356 154 385 229 43] [211 191 246 275 48] [627 207 660 299 51] [393 177 428 263 55]] Inference: 20.8282470703125 ms per batch image [[219 169 250 245 3] [495 172 524 249 27] [509 128 534 192 38] [ 69 267 111 366 42] [351 154 379 228 43] [203 193 237 274 48] [622 207 654 296 51]] Inference: 29.40988540649414 ms per batch image [[223 169 255 248 3] [494 176 522 250 27] [512 127 537 192 38] [ 65 264 107 366 42] [346 155 374 229 43] [198 194 231 273 48] [616 206 649 295 51]] Inference: 28.705835342407227 ms per batch image [[228 172 261 252 3] [489 176 518 253 27] [518 127 543 192 38] [ 58 261 101 362 42] [338 157 366 230 43] [190 193 222 271 48] [606 206 640 295 51]] Inference: 21.220684051513672 ms per batch image [[232 174 264 253 3] [486 177 516 254 27] [522 125 547 191 38] [ 56 260 98 359 42] [333 158 361 231 43] [182 192 214 270 48] [599 205 633 294 51]] Inference: 20.402908325195312 ms per batch image [[234 174 266 254 3] [484 179 513 255 27] [524 124 549 190 38] [ 54 260 96 358 42] [329 158 358 232 43] [177 190 209 268 48] [595 205 629 293 51]] Inference: 20.311832427978516 ms per batch image [[237 175 268 253 3] [481 180 511 258 27] [529 125 554 189 38] [ 54 259 96 358 42] [325 158 354 233 43] [172 189 205 266 48] [591 204 625 292 51]] Inference: 102.32686996459961 ms per batch image [[242 176 272 254 3] [478 183 508 260 27] [535 125 560 188 38] [ 52 256 94 356 42] [317 159 347 235 43] [163 188 193 259 48] [584 200 618 290 51]] Inference: 20.763397216796875 ms per batch image [[245 177 275 256 3] [439 188 473 281 25] [478 183 507 261 27] [539 125 564 188 38] [ 51 252 92 351 42] [312 160 342 238 43] [157 186 187 258 48] [579 199 612 289 51]] Inference: 26.246070861816406 ms per batch image [[249 178 279 257 3] [444 190 479 284 25] [479 184 508 262 27] [543 124 568 187 38] [ 51 251 91 348 42] [308 161 339 239 43] [151 185 182 258 48] [573 199 607 288 51]] Inference: 20.293474197387695 ms per batch image [[253 179 285 259 3] [451 191 486 286 25] [482 189 511 265 27] [548 122 574 187 38] [ 52 250 91 348 42] [303 162 334 241 43] [142 184 172 259 48] [567 199 601 288 51]] Inference: 20.730018615722656 ms per batch image [[256 180 289 260 3] [457 193 491 287 25] [487 191 515 266 27] [552 122 577 186 38] [ 55 250 93 344 42] [300 163 330 242 43] [136 183 166 257 48] [563 198 596 287 51]] Inference: 20.232677459716797 ms per batch image [[260 181 293 262 3] [460 196 495 291 25] [491 190 520 267 27] [556 123 580 185 38] [ 56 247 94 340 42] [298 165 328 242 43] [130 184 161 256 48] [558 197 591 285 51]] Inference: 20.310640335083008 ms per batch image [[263 182 297 263 3] [466 197 500 293 25] [499 192 527 267 27] [560 121 585 185 38] [ 59 245 96 336 42] [294 169 324 245 43] [120 182 150 253 48] [551 198 584 287 51]] Inference: 20.152807235717773 ms per batch image [[268 183 301 263 3] [472 198 505 292 25] [504 192 533 268 27] [564 121 588 184 38] [ 62 245 98 334 42] [292 170 321 244 43] [114 181 144 252 48] [547 199 580 287 51]] Inference: 20.08342742919922 ms per batch image [[272 178 307 264 3] [478 199 510 292 25] [510 193 538 268 27] [567 119 591 182 38] [ 64 244 100 333 42] [290 171 320 245 43] [109 181 139 252 48] [543 199 576 287 51]] Inference: 20.767688751220703 ms per batch image [[278 173 315 266 3] [484 202 517 296 25] [515 191 545 271 27] [572 120 596 183 38] [ 71 241 106 329 42] [286 172 315 245 43] [100 179 130 250 48] [536 197 569 283 51]] Inference: 21.434307098388672 ms per batch image [[280 172 318 267 3] [490 204 523 299 25] [518 192 548 272 27] [576 122 600 182 38] [ 76 239 112 328 42] [ 94 177 124 250 48] [530 193 563 281 51]] Inference: 20.697832107543945 ms per batch image [[282 173 320 269 3] [495 202 528 299 25] [579 121 603 181 38] [ 79 238 115 326 42] [ 89 178 119 248 48] [528 193 561 282 51]] Inference: 20.52593231201172 ms per batch image [[282 174 319 266 3] [500 200 535 301 25] [582 119 607 180 38] [ 81 236 117 325 42] [ 83 174 113 247 48] [530 192 562 280 51]] Inference: 20.498037338256836 ms per batch image [[292 184 326 270 3] [504 197 541 304 25] [585 118 610 180 38] [ 85 238 121 323 42] [274 174 308 259 43] [ 77 173 106 245 48] [535 191 565 273 51]] Inference: 20.410537719726562 ms per batch image [[299 189 333 273 3] [505 197 543 306 25] [588 118 613 179 38] [ 88 234 122 318 42] [273 176 305 257 43] [ 70 174 99 243 48] [544 188 572 267 51]] Inference: 20.471572875976562 ms per batch image [[303 190 336 275 3] [510 198 549 308 25] [590 117 615 180 38] [ 90 233 124 315 42] [274 178 305 259 43] [ 63 174 92 243 48] [550 187 579 265 51]] Inference: 101.5472412109375 ms per batch image [[306 189 339 272 3] [522 203 556 304 25] [592 116 617 179 38] [ 95 233 129 314 42] [274 181 305 263 43] [ 59 174 87 242 48] [556 187 585 264 51]] Inference: 101.1197566986084 ms per batch image [[309 191 342 273 3] [528 205 562 305 25] [595 113 621 178 38] [ 97 227 133 314 42] [273 182 305 265 43] [ 53 173 82 243 48] [561 188 590 265 51] [487 193 521 276 59]] Inference: 20.56598663330078 ms per batch image [[313 193 346 275 3] [533 209 567 306 25] [597 113 623 177 38] [100 227 136 313 42] [272 183 304 265 43] [ 49 172 78 242 48] [564 188 594 263 51] [482 191 516 273 59]] Inference: 20.633459091186523 ms per batch image [[317 194 351 276 3] [539 212 573 308 25] [599 113 625 177 38] [105 226 139 310 42] [272 184 304 265 43] [ 43 173 72 240 48] [567 186 596 262 51] [476 188 511 272 59]] Inference: 20.819902420043945 ms per batch image [[322 195 356 278 3] [542 214 577 310 25] [602 113 628 176 38] [108 224 142 306 42] [271 183 305 267 43] [ 38 172 67 239 48] [568 185 597 261 51] [470 187 505 272 59]] Inference: 20.56407928466797 ms per batch image [[324 195 360 279 3] [544 211 582 311 25] [604 112 630 176 38] [110 223 145 306 42] [271 186 304 269 43] [ 33 170 62 239 48] [569 185 597 259 51] [463 186 498 271 59]] Inference: 20.871877670288086 ms per batch image [[328 197 364 281 3] [550 210 589 312 25] [607 113 633 176 38] [112 222 148 306 42] [270 187 304 271 43] [ 30 169 58 239 48] [570 184 598 257 51] [456 187 491 270 59]] Inference: 20.78557014465332 ms per batch image [[332 199 369 286 3] [559 209 599 315 25] [613 112 639 174 38] [116 219 151 303 42] [269 188 304 273 43] [ 23 169 51 237 48] [450 189 484 269 59]] Inference: 32.35983848571777 ms per batch image [[335 200 372 287 3] [560 195 604 317 25] [617 113 641 174 38] [119 217 154 301 42] [268 189 303 275 43] [ 17 168 45 236 48] [442 187 478 269 59]] Inference: 40.311336517333984 ms per batch image [[338 201 375 287 3] [568 207 609 317 25] [621 114 646 173 38] [123 216 155 297 42] [265 192 300 277 43] [ 14 168 41 234 48] [557 181 586 255 51] [435 182 471 268 59]] Inference: 32.13024139404297 ms per batch image [[341 200 378 289 3] [575 215 612 316 25] [625 114 650 174 38] [128 216 160 293 42] [263 196 298 280 43] [ 10 169 37 233 48] [553 181 583 258 51] [430 181 466 267 59]] Inference: 30.70974349975586 ms per batch image [[348 204 384 290 3] [586 216 623 318 25] [629 113 654 173 38] [134 213 166 292 42] [258 198 293 282 43] [ 1 167 29 232 48] [548 180 577 256 51] [425 180 461 267 59]] Inference: 29.515981674194336 ms per batch image [[352 203 389 291 3] [593 217 629 318 25] [632 112 656 172 38] [139 211 171 290 42] [254 199 290 283 43] [ -2 166 25 233 48] [544 179 573 253 51]] Inference: 29.435396194458008 ms per batch image [[358 204 396 292 3] [599 218 635 317 25] [636 112 661 172 38] [146 210 177 289 42] [250 200 286 286 43] [ -4 166 22 233 48] [541 177 569 252 51]] Inference: 30.087947845458984 ms per batch image [[366 205 404 295 3] [604 218 642 321 25] [641 112 666 172 38] [154 208 185 287 42] [247 204 283 290 43] [ -9 166 17 232 48] [533 176 561 251 51]] Inference: 28.652191162109375 ms per batch image [[370 207 408 296 3] [611 217 649 319 25] [645 112 671 172 38] [159 207 190 286 42] [245 206 281 292 43] [528 177 556 250 51]] Inference: 29.4339656829834 ms per batch image [[377 207 415 296 3] [618 216 655 318 25] [647 112 673 172 38] [162 206 193 284 42] [242 206 279 293 43] [523 177 552 250 51]] Inference: 30.387163162231445 ms per batch image [[376 188 423 299 3] [628 215 664 318 25] [653 111 680 173 38] [169 200 201 281 42] [239 207 276 296 43] [516 174 545 247 51]] Inference: 29.33812141418457 ms per batch image [[376 182 424 299 3] [634 214 670 316 25] [657 111 684 173 38] [176 199 208 279 42] [237 210 275 298 43] [511 174 539 245 51]] Inference: 31.136512756347656 ms per batch image [[388 199 429 299 3] [637 214 674 316 25] [661 113 687 174 38] [182 205 214 281 42] [235 213 272 298 43] [506 173 534 246 51]] Inference: 31.276702880859375 ms per batch image [[395 209 434 302 3] [638 214 676 317 25] [663 114 689 175 38] [184 204 215 277 42] [233 213 270 299 43] [503 175 531 248 51]] Inference: 30.541419982910156 ms per batch image [[400 210 438 303 3] [644 212 683 316 25] [667 115 693 175 38] [194 203 225 276 42] [229 214 268 303 43] [497 174 524 245 51] [358 179 389 258 61]] Inference: 40.46297073364258 ms per batch image [[648 210 686 314 25] [671 114 696 173 38] [199 204 231 280 42] [227 216 266 306 43] [493 173 521 244 51] [352 177 383 256 61]] Inference: 34.00015830993652 ms per batch image [[651 209 689 313 25] [674 114 700 173 38] [206 201 238 277 42] [228 222 266 309 43] [486 174 514 245 51] [341 177 373 256 61]] Inference: 32.26327896118164 ms per batch image [[653 209 691 313 25] [211 202 242 273 42] [230 225 267 311 43] [481 174 510 245 51] [337 177 369 255 61]] Inference: 31.506061553955078 ms per batch image [[430 224 466 309 3] [653 207 691 312 25] [214 199 244 270 42] [231 225 270 314 43] [477 174 506 245 51] [332 176 365 255 61]] Inference: 31.22115135192871 ms per batch image [[433 222 469 308 3] [654 205 691 308 25] [215 193 247 271 42] [233 226 272 316 43] [472 174 501 245 51] [327 176 360 255 61]] Inference: 34.34157371520996 ms per batch image [[442 220 478 308 3] [654 205 689 304 25] [219 193 249 270 42] [234 230 274 320 43] [467 177 495 247 51] [320 175 352 254 61]] Inference: 33.80727767944336 ms per batch image [[447 219 485 311 3] [653 205 687 301 25] [222 195 252 270 42] [235 233 277 327 43] [462 179 490 248 51] [313 176 345 254 61]] Inference: 33.4627628326416 ms per batch image [[451 219 489 313 3] [651 203 685 302 25] [227 194 256 269 42] [235 233 278 329 43] [456 179 483 243 51] [309 177 341 253 61]] Inference: 32.46498107910156 ms per batch image [[445 190 494 316 3] [649 201 683 300 25] [230 211 282 332 43] [452 180 478 242 51] [305 174 337 252 61]] Inference: 33.273935317993164 ms per batch image [[455 207 496 314 3] [648 200 682 300 25] [684 119 711 185 38] [229 200 285 332 43] [445 178 475 249 51] [300 174 332 252 61]] Inference: 34.48176383972168 ms per batch image [[463 216 501 316 3] [646 198 678 295 25] [685 121 710 184 38] [231 193 288 333 43] [441 179 470 251 51] [295 174 326 252 61]] Inference: 33.56432914733887 ms per batch image [[469 219 506 320 3] [644 197 675 293 25] [685 121 711 185 38] [240 212 290 334 43] [436 180 466 253 51] [290 174 322 251 61]] Inference: 33.568382263183594 ms per batch image [[476 223 513 323 3] [639 196 670 292 25] [686 122 711 186 38] [251 228 294 333 43] [427 177 459 255 51] [284 173 314 249 61]] Inference: 32.984256744384766 ms per batch image [[481 225 516 324 3] [635 197 666 291 25] [685 123 711 187 38] [252 229 295 335 43] [422 177 455 255 51] [279 173 310 253 61]] Inference: 34.577369689941406 ms per batch image [[487 225 521 324 3] [633 197 664 291 25] [684 123 710 189 38] [275 173 307 253 61]] Inference: 34.11746025085449 ms per batch image [[495 227 530 325 3] [628 197 659 290 25] [685 123 711 190 38] [274 236 314 334 43] [269 172 302 254 61]] Inference: 21.976709365844727 ms per batch image [[501 229 536 326 3] [624 197 655 291 25] [282 242 322 336 43] [266 172 300 255 61]] Inference: 22.08232879638672 ms per batch image [[506 229 541 327 3] [620 196 651 291 25] [286 243 325 338 43] [392 186 425 268 51] [262 170 296 253 61]] Inference: 22.77517318725586 ms per batch image [[518 231 554 329 3] [615 193 646 288 25] [296 245 336 342 43] [383 191 416 268 51] [259 170 292 249 61]] Inference: 32.54365921020508 ms per batch image [[524 234 561 333 3] [612 190 643 289 25] [680 128 705 193 38] [302 244 341 342 43] [375 192 408 268 51] [255 170 287 248 61]] Inference: 23.753643035888672 ms per batch image [[529 235 567 337 3] [609 194 640 290 25] [678 129 703 193 38] [310 245 349 343 43] [367 192 399 268 51] [253 171 284 246 61]] Inference: 22.775650024414062 ms per batch image [[531 236 569 337 3] [607 194 638 292 25] [677 129 703 194 38] [315 247 354 345 43] [359 191 391 269 51] [252 171 282 245 61] [291 179 315 251 64]] Inference: 33.826351165771484 ms per batch image [[538 237 575 339 3] [605 194 636 292 25] [673 130 699 197 38] [321 248 361 348 43] [351 196 383 271 51] [248 168 278 245 61] [296 179 320 249 64]] Inference: 22.206783294677734 ms per batch image [[543 239 580 340 3] [604 193 635 293 25] [671 131 697 198 38] [328 248 370 350 43] [343 197 374 270 51] [246 169 275 244 61] [299 175 324 247 64]] Inference: 21.520137786865234 ms per batch image [[547 240 584 341 3] [604 194 635 293 25] [670 132 695 198 38] [333 249 376 353 43] [334 198 363 265 51] [243 169 272 243 61] [301 171 327 246 64]] Inference: 21.40021324157715 ms per batch image [[555 242 591 341 3] [606 195 637 294 25] [667 132 692 198 38] [339 254 383 358 43] [320 196 353 272 51] [239 168 268 242 61] [306 171 331 243 64]] Inference: 21.796226501464844 ms per batch image [[561 243 597 342 3] [607 196 639 294 25] [665 133 690 198 38] [344 257 389 362 43] [309 179 351 277 51] [236 166 265 242 61] [309 170 334 242 64]] Inference: 21.970748901367188 ms per batch image [[565 244 602 344 3] [610 199 641 293 25] [664 134 689 200 38] [348 259 393 365 43] [304 173 348 277 51] [233 165 262 242 61]] Inference: 21.302223205566406 ms per batch image [[570 246 606 346 3] [612 200 642 293 25] [301 198 329 275 27] [662 135 687 201 38] [351 260 396 368 43] [296 171 341 279 51] [230 164 260 242 61] [322 169 347 242 64]] Inference: 21.439313888549805 ms per batch image [[574 247 612 348 3] [613 204 645 301 25] [290 198 320 274 27] [661 136 686 201 38] [362 262 406 368 43] [227 164 256 242 61] [328 168 353 242 64]] Inference: 21.463871002197266 ms per batch image [[577 249 616 351 3] [613 207 646 308 25] [284 198 316 274 27] [658 135 684 205 38] [368 263 412 369 43] [224 163 253 240 61] [334 169 359 241 64]] Inference: 105.08418083190918 ms per batch image [[579 250 619 353 3] [614 209 647 310 25] [277 197 311 273 27] [656 136 682 204 38] [371 263 415 369 43] [223 162 252 239 61] [340 170 365 242 64]] Inference: 21.198749542236328 ms per batch image [[582 253 624 359 3] [616 210 650 312 25] [267 196 300 273 27] [653 137 679 205 38] [383 263 426 369 43] [222 161 250 237 61] [347 170 372 239 64]] Inference: 20.952701568603516 ms per batch image [[587 255 630 360 3] [618 211 653 315 25] [260 195 293 273 27] [652 137 678 206 38] [391 264 434 369 43] [222 161 250 237 61] [352 168 378 238 64]] Inference: 20.904541015625 ms per batch image [[590 255 634 362 3] [621 214 655 317 25] [257 195 289 272 27] [650 136 677 207 38] [396 266 440 373 43] [222 161 250 236 61] [356 167 382 238 64]] Inference: 21.225690841674805 ms per batch image [[595 256 640 367 3] [625 217 659 321 25] [250 194 281 270 27] [649 138 676 210 38] [403 268 447 375 43] [221 158 249 234 61] [363 166 389 235 64]] Inference: 104.07614707946777 ms per batch image [[598 258 644 370 3] [629 218 663 322 25] [245 194 276 270 27] [649 140 675 212 38] [410 270 454 377 43] [220 158 247 231 61] [367 167 394 234 64]] Inference: 23.801803588867188 ms per batch image [[600 260 646 371 3] [632 220 667 322 25] [241 192 272 267 27] [650 141 675 211 38] [220 158 247 230 61] [372 166 399 233 64]] Inference: 21.283626556396484 ms per batch image [[603 261 648 372 3] [634 222 670 328 25] [239 192 269 266 27] [651 142 676 211 38] [420 272 463 380 43] [219 158 245 229 61] [378 165 406 234 64]] Inference: 42.29116439819336 ms per batch image [[606 260 653 372 3] [636 225 672 334 25] [237 191 267 265 27] [651 142 676 213 38] [428 273 469 377 43] [218 158 244 227 61] [383 164 411 233 64]] Inference: 30.60293197631836 ms per batch image [[608 262 653 375 3] [639 228 675 337 25] [234 189 265 263 27] [652 143 676 214 38] [436 273 478 379 43] [215 157 241 226 61] [387 165 415 232 64]] Inference: 30.5631160736084 ms per batch image [[610 264 654 377 3] [641 230 676 338 25] [232 188 262 262 27] [652 143 676 215 38] [438 274 482 381 43] [213 156 239 225 61] [389 164 418 232 64]]

参考安装 Android Studio,下载自己PC对应的环境安装,我自己是在Linux自带的火狐浏览器上去Android Studio官网下载的。 本项目介绍在Ubuntu 18.04 64-bit上的Android Studio安装和配置。

sudo apt-get install libc6:i386 libncurses5:i386 libstdc++6:i386 lib32z1 libbz2-1.0:i386

注意:官方教程没有提到的是,运行Android Studio需要Java环境,而且最新的OpenJDK 11会出现报错,因此需要下载OpenJDK 8 确认一下Java版本

java --version

下载OpenJDK 8

sudo apt-get install openjdk-8-jdk

如果需要,输入对应选项的数字切换Java版本

update-alternatives --config java

There are 2 choices for the alternative java (providing /usr/bin/java). Selection Path Priority Status ------------------------------------------------------------ 0 /usr/lib/jvm/java-11-openjdk-amd64/bin/java 1111 auto mode 1 /usr/lib/jvm/java-11-openjdk-amd64/bin/java 1111 manual mode * 2 /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java 1081 manual mode Press <enter> to keep the current choice[*], or type selection number: 2

此外,部署Paddle模型还需要安装cmake和ninja环境等

# 1. Install basic software 参考官方文档,注意权限,应该是root用户apt update

apt-get install -y --no-install-recommends \

gcc g++ git make wget python unzip adb curl# 2. Install cmake 3.10 or above 参考官方文档,注意权限,应该是root用户wget -c https://mms-res.cdn.bcebos.com/cmake-3.10.3-Linux-x86_64.tar.gz && \

tar xzf cmake-3.10.3-Linux-x86_64.tar.gz && \

mv cmake-3.10.3-Linux-x86_64 /opt/cmake-3.10 && \

ln -s /opt/cmake-3.10/bin/cmake /usr/bin/cmake && \

ln -s /opt/cmake-3.10/bin/ccmake /usr/bin/ccmake# 3. Install ninja-buildsudo apt-get install ninja-build参考安装视频: Ubuntu上推荐的设置流程

注意:安装过程中会如果出现下面的报错unable to access android sdk add-on lis,点击cancel跳过。

在自己的PC上下载好Paddle-Lite-Demo项目

git clone https://gitee.com/paddlepaddle/Paddle-Lite-Demo.git

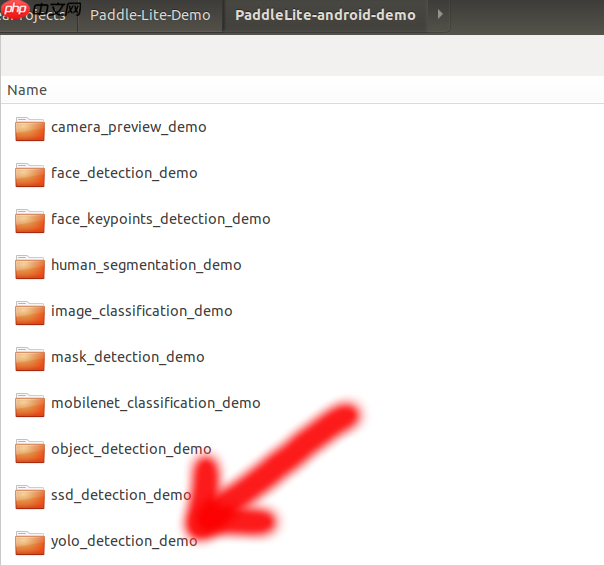

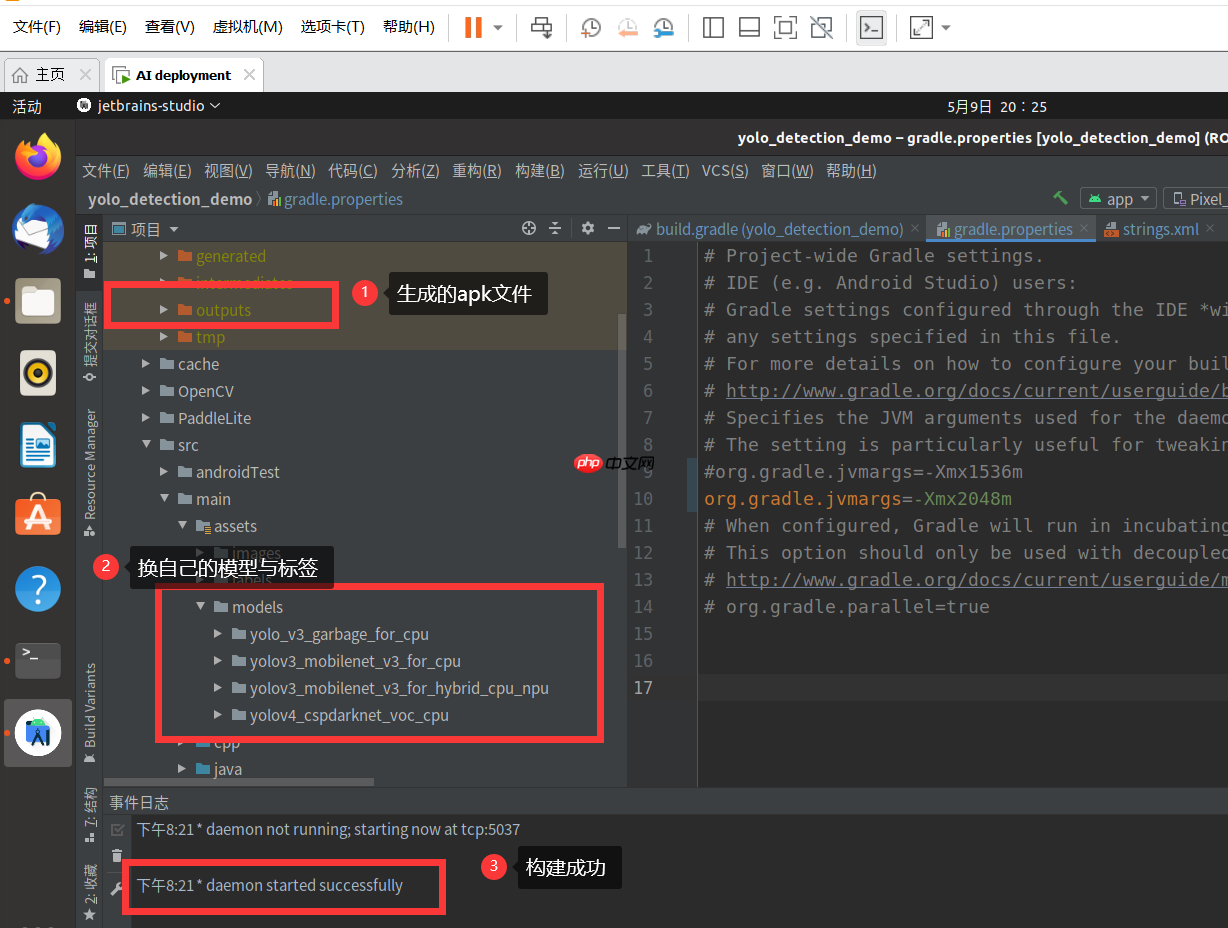

在PaddleLite-android-demo目录下找到准备导入的yolo_detection_demo,如下图所示

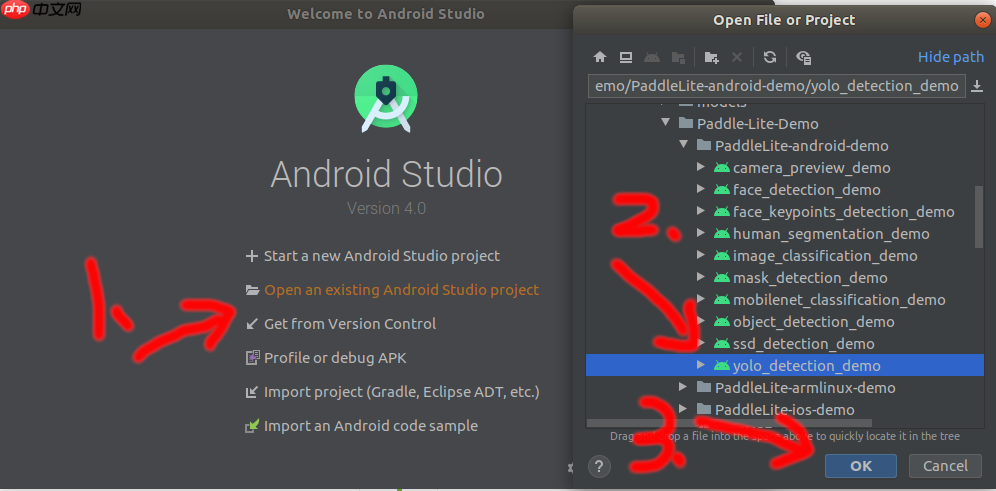

在Android Studio的欢迎界面点击Open an existing Android Studio project导入yolo_detection_demo工程

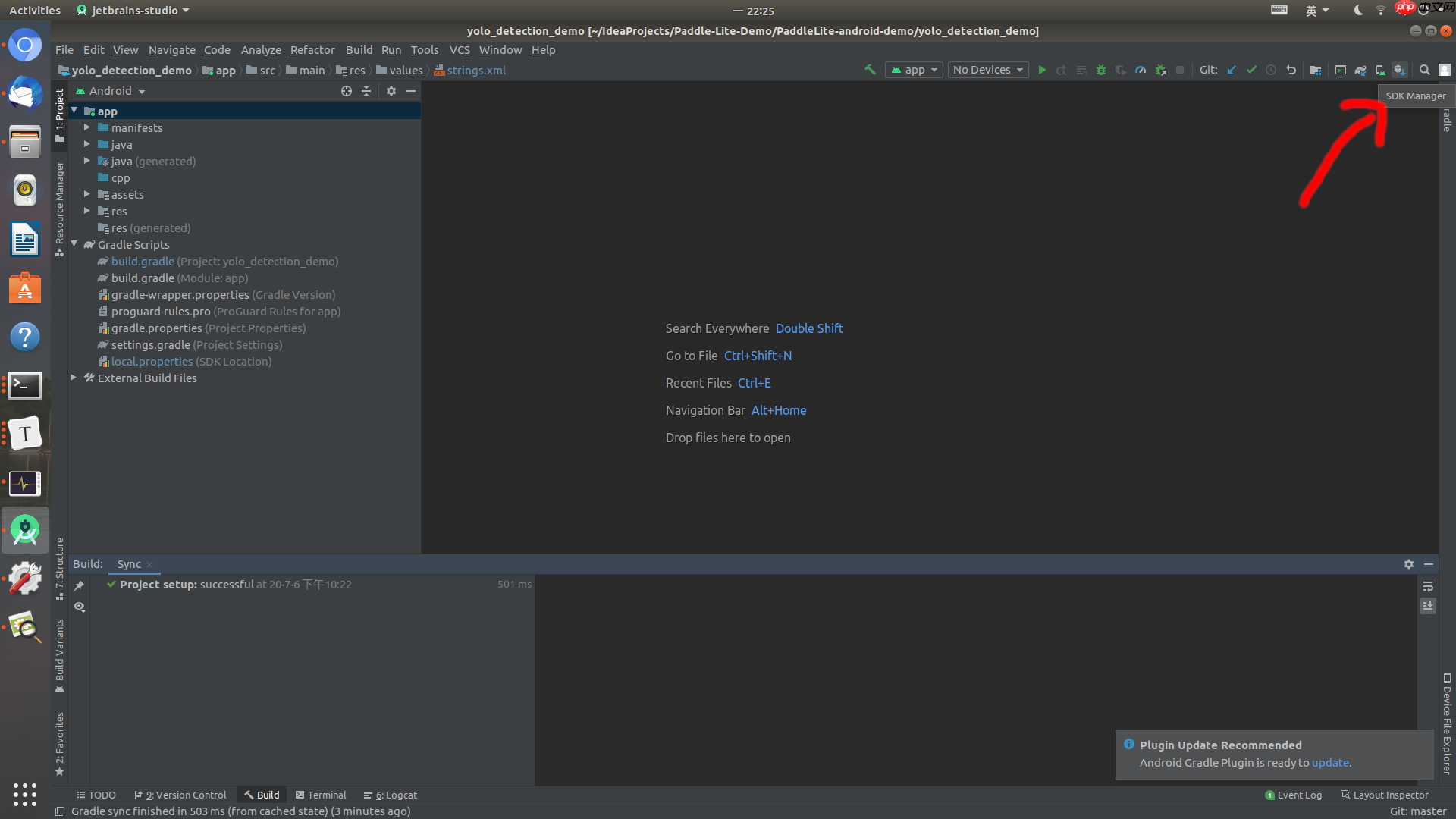

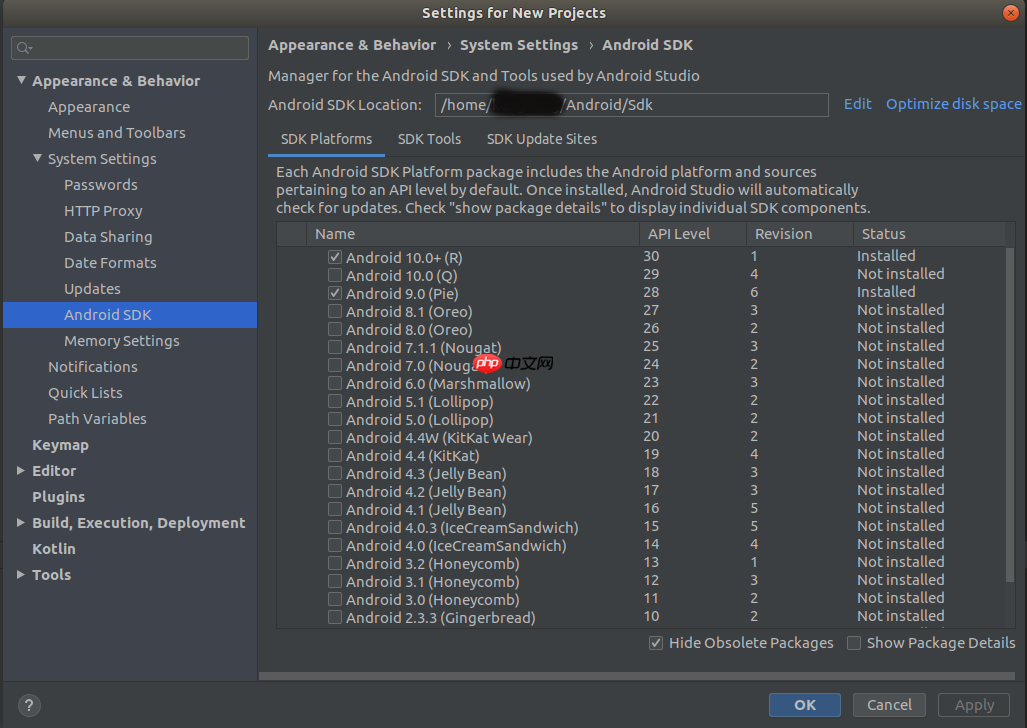

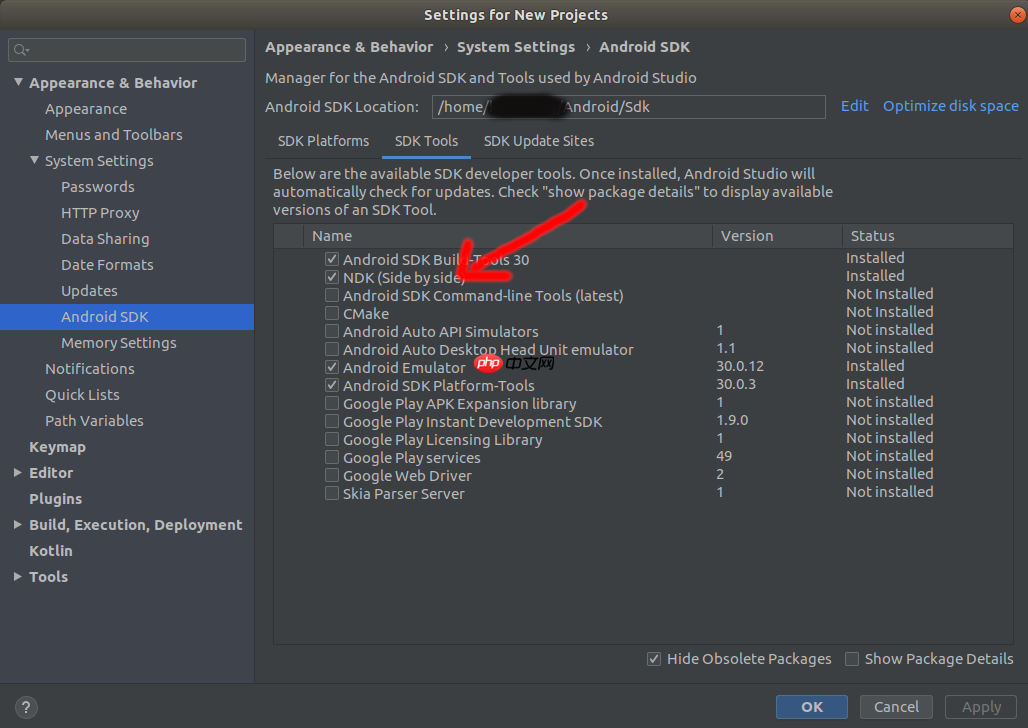

进入开发界面后,点击右上角的SDK Manager按钮,下载好SDK和NDK

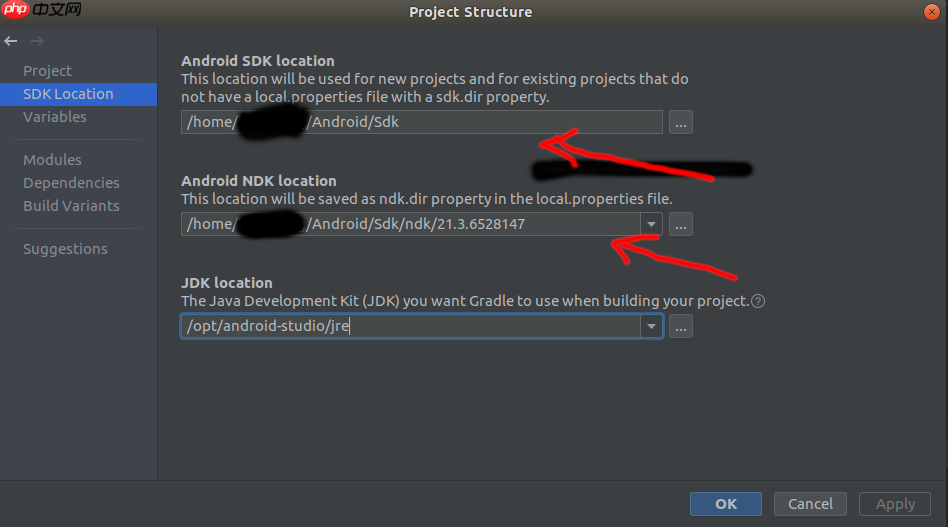

使用快捷键Ctrl+Alt+Shift+S打开Project Structure设置好该项目的SDK和NDK路径

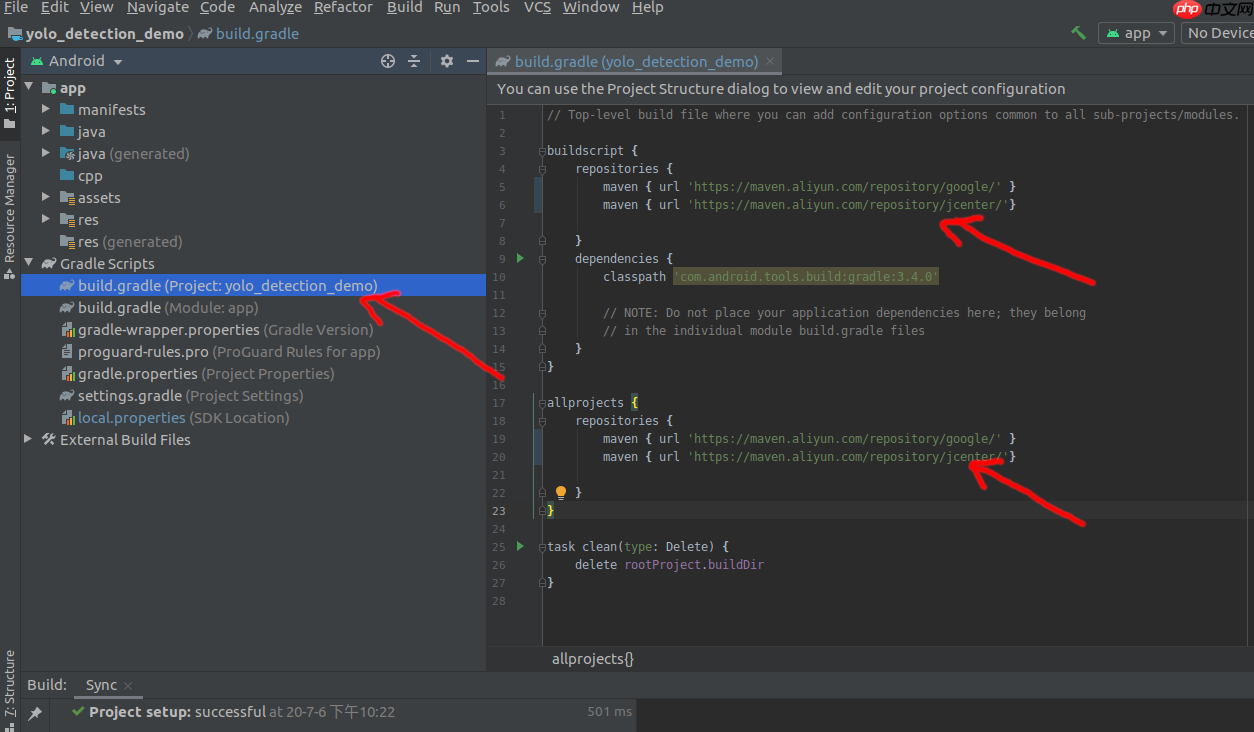

这里其实就是将原工程build.gradle文件中的

repositories {

google()

jcenter()

}全部替换成对应的国内镜像加速仓库,修改后文件如下

// Top-level build file where you can add configuration options common to all sub-projects/modules.buildscript {

repositories {

maven { url 'https://maven.aliyun.com/repository/google/' }

maven { url 'https://maven.aliyun.com/repository/jcenter/'}

}

dependencies {

classpath 'com.android.tools.build:gradle:3.4.0'

// NOTE: Do not place your application dependencies here; they belong

// in the individual module build.gradle files

}

}

allprojects {

repositories {

maven { url 'https://maven.aliyun.com/repository/google/' }

maven { url 'https://maven.aliyun.com/repository/jcenter/'}

}

}task clean(type: Delete) {

delete rootProject.buildDir

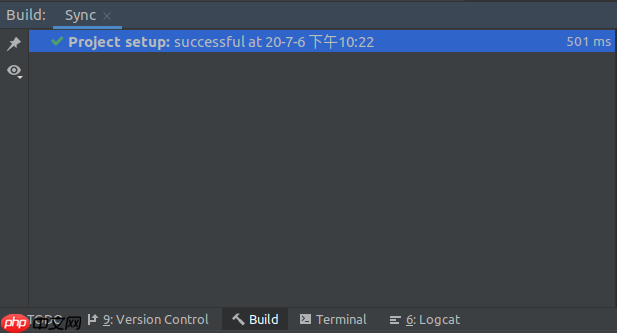

}个人的做法是关闭当前工程再重新进入(感觉比较快),或者也可以使用快捷键Ctrl+F9,等待进度条跑完,最终要如同官方文档的介绍,Build中Sync要全部同步完成,看到绿色打勾才行。

完成构建后,PaddleLite-android-demo/yolo_detection_demo/app/src/main/assets目录下会出现COCO数据集上的yolov3_mobilenet_v3_for_cpu和yolov3_mobilenet_v3_for_hybrid_cpu_npu两个demo模型。

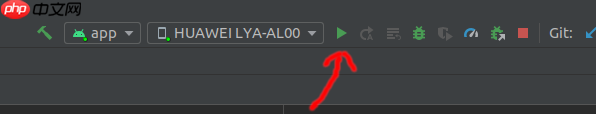

如果直接点击run,会将目标检测的CPU模型yolov3_mobilenet_v3_for_cpu直接部署到手机。

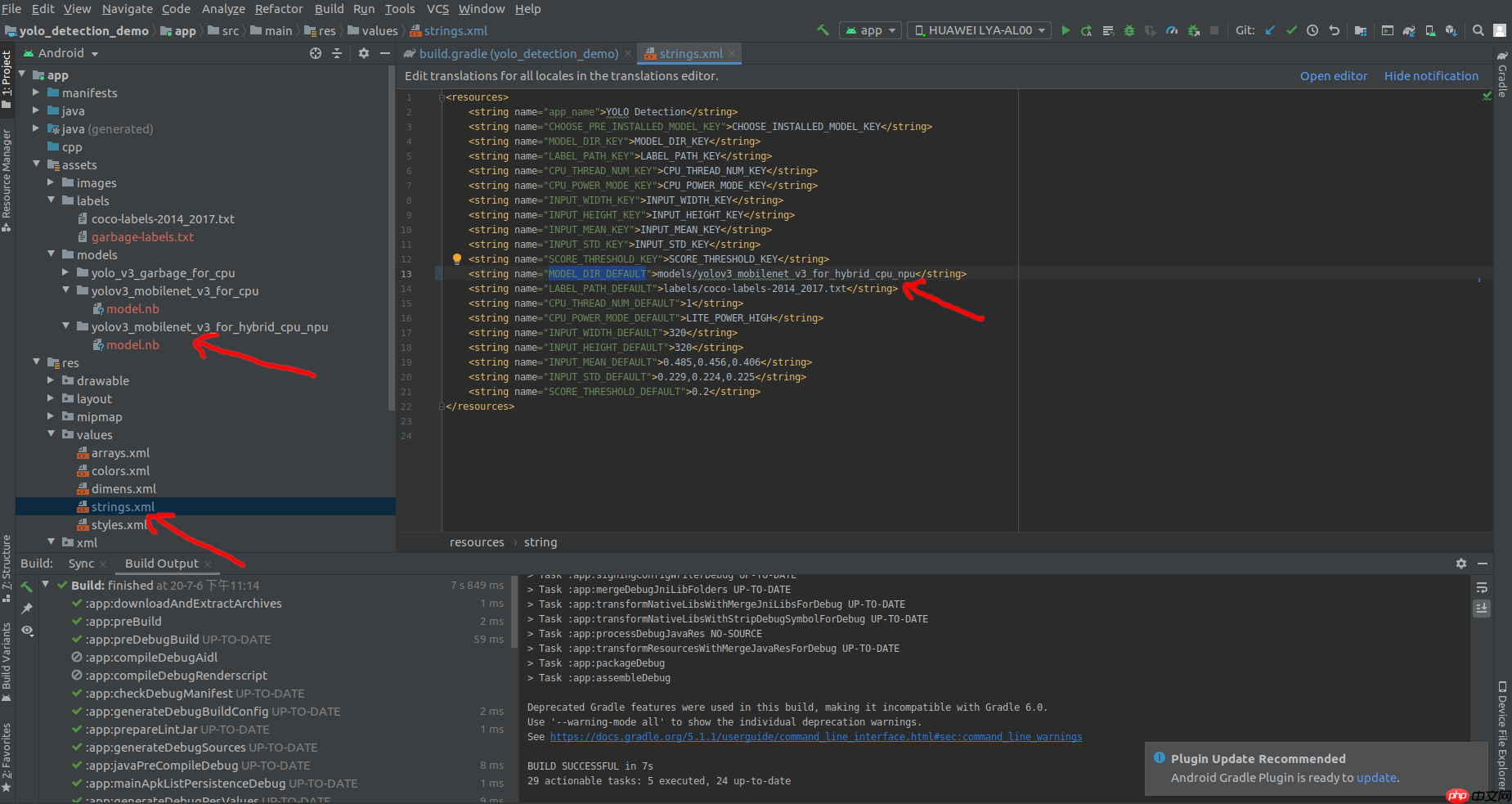

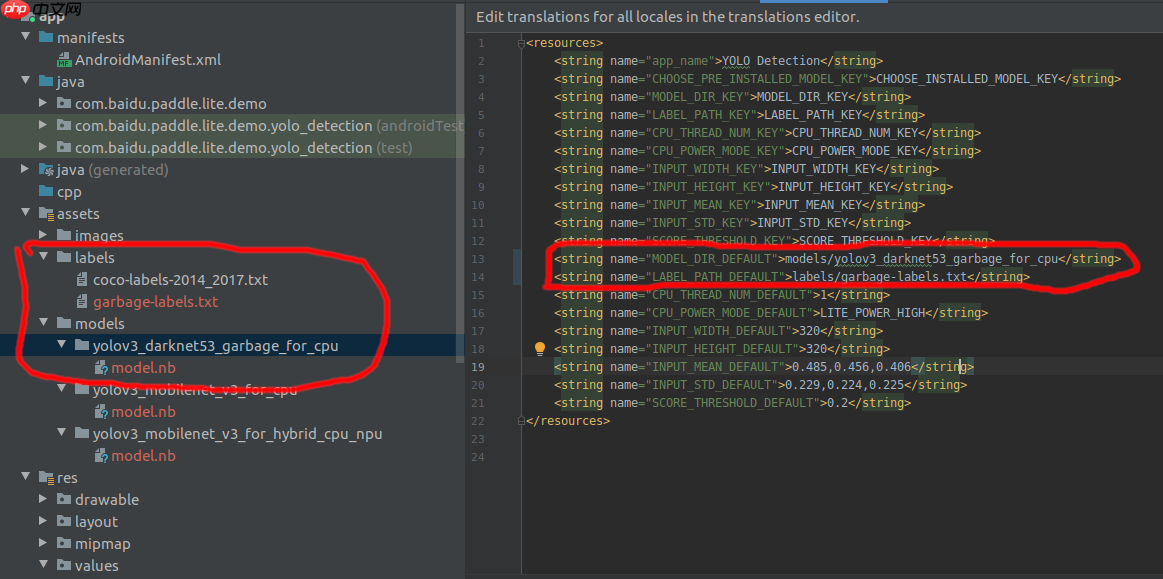

如果需要部署NPU模型,那么需要修改PaddleLite-android-demo/yolo_detection_demo/app/src/main/res/values/strings.xml中对应的MODEL_DIR_DEFAULT,将其改为yolov3_mobilenet_v3_for_hybrid_cpu_npu所在位置,如下图

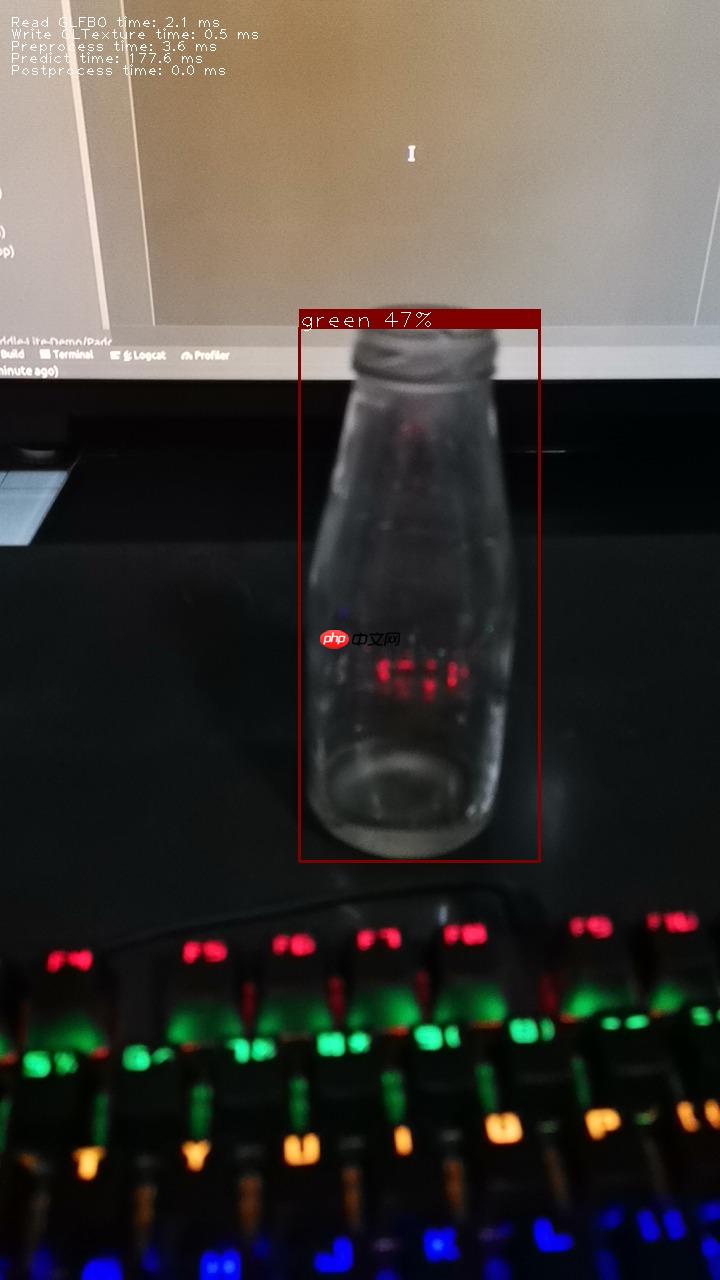

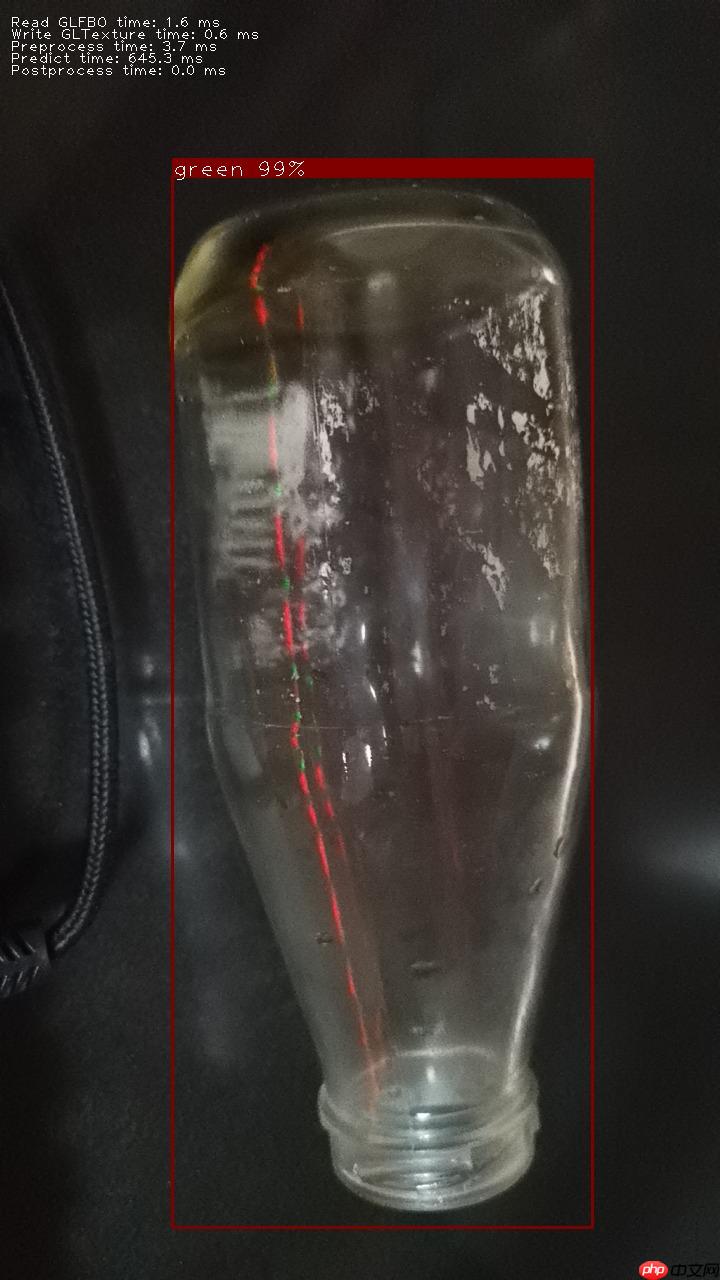

demo中NPU模型预测效果:

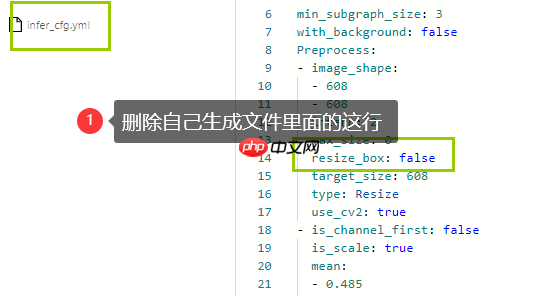

部署迁移学习模型其实只比前面部署NPU上的demo模型多一个步骤,就是修改对应label文件的路径:

backgroundgreenbluetransparent white yellowred

请点击此处查看本环境基本用法.

Please click here for more detailed instructions.

以上就是八小时完成基于百度飞桨的单/多镜头行人追踪全流程部署到安卓的baseline的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号