该内容围绕基于用户画像的商品推荐挑战赛展开,介绍赛事背景、任务及评审规则。重点呈现了利用深度学习处理结构化数据二分类的baseline思路,将用户tagid序列转化为文本分类问题,用LSTM模型,展示了数据处理、模型构建、训练及预测过程,最终生成提交结果。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

基于用户画像的商品推荐挑战赛

赛题链接:https://challenge.xfyun.cn/?ch=dc-zmt-05

一、赛事背景

讯飞AI营销云基于深耕多年的人工智能和大数据技术,赋予营销智慧创新的大脑,以健全的产品矩阵和全方位的服务,帮助广告主用AI+大数据实现营销效能的全面提升,打造数字营销新生态。

二、赛事任务

基于用户画像的产品推荐,是目前AI营销云服务广告主的一项重要能力,本次赛题选择了两款产品分别在初赛和复赛中进行用户付费行为预测,参赛选手需基于提供的样本构建模型,预测用户是否会购买相应商品。

三、评审规则

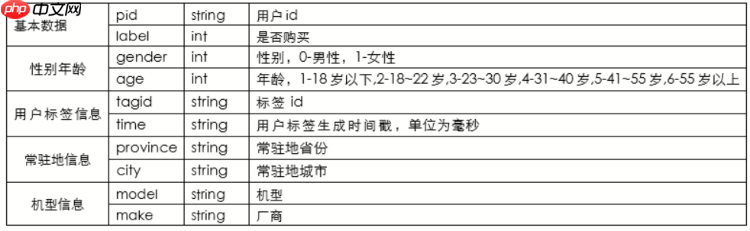

- 数据说明 本次赛题是一个二分类任务,特征维度主要包括:基本数据,性别年龄、用户标签、常驻地信息、机型信息5类特征,出于数据安全的考虑,所有数据均为脱敏处理后的数据。初赛数据和复赛数据特征维度相同。

2.评估指标 本模型依据提交的结果文件,采用F1-score进行评价。

本次数据的特点

仔细分析tagid列,发现该列提供了用户行为的序列信息,想到序列信息,自然联想到lstm,因此这里使用了lstm为基础模型进行分类。

本质是将tagid中每个元素考虑为单词,这样,tagid的序列就是句子,将问题转化为文本分类 + 额外特征的模式。

from multiprocessing import cpu_countimport shutilimport osimport paddleimport paddle.fluid as fluidimport pandas as pd

paddle.enable_static()

train = pd.read_csv('./训练集/train.txt', header=None)

test = pd.read_csv('./测试集/apply_new.txt', header=None)

train.columns = ['pid', 'label', 'gender', 'age', 'tagid', 'time', 'province', 'city', 'model', 'make']

test.columns = ['pid', 'gender', 'age', 'tagid', 'time', 'province', 'city', 'model', 'make']

data = pd.concat([train, test])

data['tagid'] = data['tagid'].apply(lambda x: ' '.join(map(str, eval(x))))print(train.shape,test.shape)

(300000, 10) (100000, 9)

本次采用了文本分类思路,将数据中的tagid当作单词,这里是构建了单词的数据字典和对应的序号

{字:1,字:2,... 字:n}

# 生成数据字典dict_set = {}

ik = 1for tagid in data['tagid'].values: for s in tagid.split(' '): if s in dict_set: pass

else:

dict_set[s] = ik

ik = ik + 1dict_dim = len(dict_set) + 1# 根据数据字典编码tagiddata['input_data'] = data['tagid'].apply(lambda x: ','.join([str(dict_set[i]) for i in x.split(' ')]))print(data.shape,dict_dim)

(400000, 11) 230638

定义了基本的网络结构

input - embedding(这里可以引入预训练,基于word2vec)- bilstm - output

# 定义网络def bilstm_net(data, dict_dim, class_dim, emb_dim=128, hid_dim=128, hid_dim2=96, emb_lr=30.0):

# embedding layer

emb = fluid.layers.embedding(input=data,

size=[dict_dim, emb_dim],

param_attr=fluid.ParamAttr(learning_rate=emb_lr)) # bi-lstm layer

fc0 = fluid.layers.fc(input=emb, size=hid_dim * 4)

rfc0 = fluid.layers.fc(input=emb, size=hid_dim * 4)

lstm_h, c = fluid.layers.dynamic_lstm(input=fc0, size=hid_dim * 4, is_reverse=False)

rlstm_h, c = fluid.layers.dynamic_lstm(input=rfc0, size=hid_dim * 4, is_reverse=True) # extract last layer

lstm_last = fluid.layers.sequence_last_step(input=lstm_h)

rlstm_last = fluid.layers.sequence_last_step(input=rlstm_h) # concat layer

lstm_concat = fluid.layers.concat(input=[lstm_last, rlstm_last], axis=1) # full connect layer

fc1 = fluid.layers.fc(input=lstm_concat, size=hid_dim2, act='tanh') # softmax layer

prediction = fluid.layers.fc(input=fc1, size=class_dim, act='softmax') return prediction

train = data[:train.shape[0]] test = data[train.shape[0]:]

train.head(5)

pid label gender age \

0 1016588 0.0 NaN NaN

1 1295808 1.0 NaN 5.0

2 1110160 0.0 NaN NaN

3 1132597 0.0 NaN 2.0

4 1108714 0.0 NaN NaN

tagid \

0 4457057 9952871 8942704 11273992 12412410356 1293...

1 10577375 13567578 4437795 8934804 9352464 1332...

2 11171956 9454883 9361934 10578048 10234462 125...

3 4457927 9412324 12292192 9231799 11977927 8520...

4 5737867 5105608 13792904 5454488 13098817 1416...

time province city model \

0 [1.606747390128E12,1.606747390128E12,1.6067473... 广西 北海 华为

1 [1.605842042532E12,1.592187596698E12,1.5598650... 广东 广州 OPPO

2 [1.607351673175E12,1.607351673175E12,1.6073516... 内蒙古 锡林郭勒盟 小米

3 [1.56015519913E12,1.56015519913E12,1.582942163... 四川 成都 vivo

4 [1.591494981671E12,1.616071068225E12,1.6160710... 湖南 长沙 vivo

make input_data

0 华为 mate20pro 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,1...

1 r11 60,61,49,62,63,64,31,65,34,66,67,68,69,70,6,71...

2 小米 红米note2 111,112,93,91,103,58,113,114,115,116,117,118,1...

3 vivo x20 23,143,144,15,145,146,147,148,149,150,151,152,...

4 x23 17,187,188,189,14,190,33,40,150,58,191,192,151...

# 训练数据的预处理def train_mapper(sample):

data, label = sample

data = [int(data) for data in data.split(',')] return data, int(label)# 训练数据的readerdef train_reader():

def reader():

for line in train[['input_data', 'label']].values:

data, label = line[0], line[1] yield data, label return paddle.reader.xmap_readers(train_mapper, reader, cpu_count(), 128)

# 定义输入数据, lod_level不为0指定输入数据为序列数据words = fluid.layers.data(name='input_data', shape=[1], dtype='int64', lod_level=1)

label = fluid.layers.data(name='label', shape=[1], dtype='int64')# 输入,字典,输出model = bilstm_net(words, dict_dim, 2)# 获取损失函数和准确率cost = fluid.layers.cross_entropy(input=model, label=label)

avg_cost = fluid.layers.mean(cost)

acc = fluid.layers.accuracy(input=model, label=label)# 获取预测程序test_program = fluid.default_main_program().clone(for_test=True)# 定义优化方法optimizer = fluid.optimizer.AdagradOptimizer(learning_rate=0.002)

opt = optimizer.minimize(avg_cost)# 创建一个执行器place = fluid.CUDAPlace(0)

exe = fluid.Executor(place)# 进行参数初始化exe.run(fluid.default_startup_program())

train_reader = paddle.batch(reader=train_reader(), batch_size=2048)# 定义输入数据的维度feeder = fluid.DataFeeder(place=place, feed_list=[words, label])# 开始训练for pass_id in range(5): # 进行训练

bst = 0

for batch_id, data in enumerate(train_reader()):

train_cost, train_acc = exe.run(program=fluid.default_main_program(),

feed=feeder.feed(data),

fetch_list=[avg_cost, acc]) if batch_id % 25 == 0: print('Pass:%d, Batch:%d, Cost:%0.5f, Acc:%0.5f' % (pass_id, batch_id, train_cost[0], train_acc[0])) # # 进行测试

# test_costs = []

# test_accs = []

# for batch_id, data in enumerate(train_reader()):

# test_cost, test_acc = exe.run(program=test_program,

# feed=feeder.feed(data),

# fetch_list=[avg_cost, acc])

# test_costs.append(test_cost[0])

# test_accs.append(test_acc[0])

# # 计算平均预测损失在和准确率

# test_cost = (sum(test_costs) / len(test_costs))

# test_acc = (sum(test_accs) / len(test_accs))

# print('Test:%d, Cost:%0.5f, ACC:%0.5f' % (pass_id, test_cost, test_acc))

if train_acc[0] > bst:

bst = train_acc # 保存预测模型

save_path = './tmp_123'

# 删除旧的模型文件

shutil.rmtree(save_path, ignore_errors=True) # 创建保持模型文件目录

os.makedirs(save_path) # 保存预测模型

fluid.io.save_inference_model(save_path, feeded_var_names=[words.name], target_vars=[model], executor=exe)

Pass:0, Batch:0, Cost:0.69311, Acc:0.51904 Pass:0, Batch:25, Cost:0.59071, Acc:0.68750 Pass:0, Batch:50, Cost:0.58202, Acc:0.69531 Pass:0, Batch:75, Cost:0.57571, Acc:0.70215 Pass:0, Batch:100, Cost:0.56397, Acc:0.71241240 Pass:0, Batch:125, Cost:0.54188, Acc:0.72217 Pass:1, Batch:0, Cost:0.54547, Acc:0.71094 Pass:1, Batch:25, Cost:0.50396, Acc:0.75342 Pass:1, Batch:50, Cost:0.51804, Acc:0.74512 Pass:1, Batch:75, Cost:0.50244, Acc:0.74902 Pass:1, Batch:100, Cost:0.49387, Acc:0.75195 Pass:1, Batch:125, Cost:0.48410, Acc:0.75488 Pass:2, Batch:0, Cost:0.49620, Acc:0.75488 Pass:2, Batch:25, Cost:0.44974, Acc:0.79248 Pass:2, Batch:50, Cost:0.46388, Acc:0.77344 Pass:2, Batch:75, Cost:0.44936, Acc:0.78174 Pass:2, Batch:100, Cost:0.42214, Acc:0.80078 Pass:2, Batch:125, Cost:0.44749, Acc:0.77881 Pass:3, Batch:0, Cost:0.43751, Acc:0.79297 Pass:3, Batch:25, Cost:0.40624, Acc:0.81250 Pass:3, Batch:50, Cost:0.43140, Acc:0.78125 Pass:3, Batch:75, Cost:0.40490, Acc:0.80469 Pass:3, Batch:100, Cost:0.38624, Acc:0.81104 Pass:3, Batch:125, Cost:0.39334, Acc:0.81055 Pass:4, Batch:0, Cost:0.37891, Acc:0.82031 Pass:4, Batch:25, Cost:0.38579, Acc:0.81543 Pass:4, Batch:50, Cost:0.38710, Acc:0.81006 Pass:4, Batch:75, Cost:0.37326, Acc:0.81689 Pass:4, Batch:100, Cost:0.35876, Acc:0.82520 Pass:4, Batch:125, Cost:0.36726, Acc:0.82471

# 保存预测模型路径save_path = './tmp_123'# 从模型中获取预测程序、输入数据名称列表、分类器[infer_program, feeded_var_names, target_var] = fluid.io.load_inference_model(dirname=save_path, executor=exe)

test_data = []for input_data in test['input_data'].values:

tmp = [] for input_data_1 in input_data.split(','):

tmp.append(int(input_data_1))

test_data.append(tmp)

result = []

n = 4096for b in [test_data[i:i + n] for i in range(0, len(test_data), n)]:

tensor_words = fluid.create_lod_tensor(b, [[len(x) for x in b]], place)

t_result = exe.run(program=infer_program,

feed={feeded_var_names[0]: tensor_words},

fetch_list=target_var)

result.extend(list(t_result[0][:,1]))

submit = test[['pid']] submit['tmp_train'] = result submit.columns = ['user_id', 'tmp_train'] submit['rank'] = submit['tmp_train'].rank() submit['category_id'] = 1submit.loc[submit['rank'] <= int(submit.shape[0] * 0.5), 'category_id'] = 0

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ipykernel_launcher.py:2: SettingWithCopyWarning: A value is trying to be set on a copy of a slice from a DataFrame. Try using .loc[row_indexer,col_indexer] = value instead See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ipykernel_launcher.py:5: SettingWithCopyWarning: A value is trying to be set on a copy of a slice from a DataFrame. Try using .loc[row_indexer,col_indexer] = value instead See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy """ /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ipykernel_launcher.py:6: SettingWithCopyWarning: A value is trying to be set on a copy of a slice from a DataFrame. Try using .loc[row_indexer,col_indexer] = value instead See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/pandas/core/indexing.py:1763: SettingWithCopyWarning: A value is trying to be set on a copy of a slice from a DataFrame. Try using .loc[row_indexer,col_indexer] = value instead See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy isetter(loc, value)

print(submit['category_id'].mean())

submit[['user_id', 'category_id']].to_csv('paddle.csv', index=False)

0.5