基于Self-Attention的Transformer结构,首先在NLP任务中被提出,最近在CV任务中展现出了非常好的效果。然而,大多数现有的Transformer直接在二维特征图上的进行Self-Attention,基于每个空间位置的query和key获得注意力矩阵,但相邻的key之间的上下文信息未得到充分利用。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

本文设计了一种新的注意力结构CoT Block,这种结构充分利用了key的上下文信息,以指导动态注意力矩阵的学习,从而增强了视觉表示的能力。

作者将CoT Block代替了ResNet结构中的3x3卷积,来形成CoTNet,最终在一系列视觉任务(分类、检测、分割)上取得了非常好的性能,此外,CoTNet在CVPR上获得开放域图像识别竞赛冠军。

相关资料:

论文摘要:

传统的CV领域self-attention直接在2D特征图上获取注意力矩阵,确实很好的获取了全局上下文信息,但是忽视了卷积带来的邻近上下文。而邻近上下文确确实实可以提供很多信息。

本文提供的CoTNet模块很好的继承了传统self- attention的全局上下文,也结合了卷积所带来的邻近上下文,提升了视觉表达能力。且CoTNet模块具有“即插即用”的特性,可以直接替换ResNet中Bottleneck里3*3的卷积,形成Transformer风格架构。

Self-attention结构在CV领域的引入,之所以在CV领域使用自注意力机制主要是有以下几点原因:

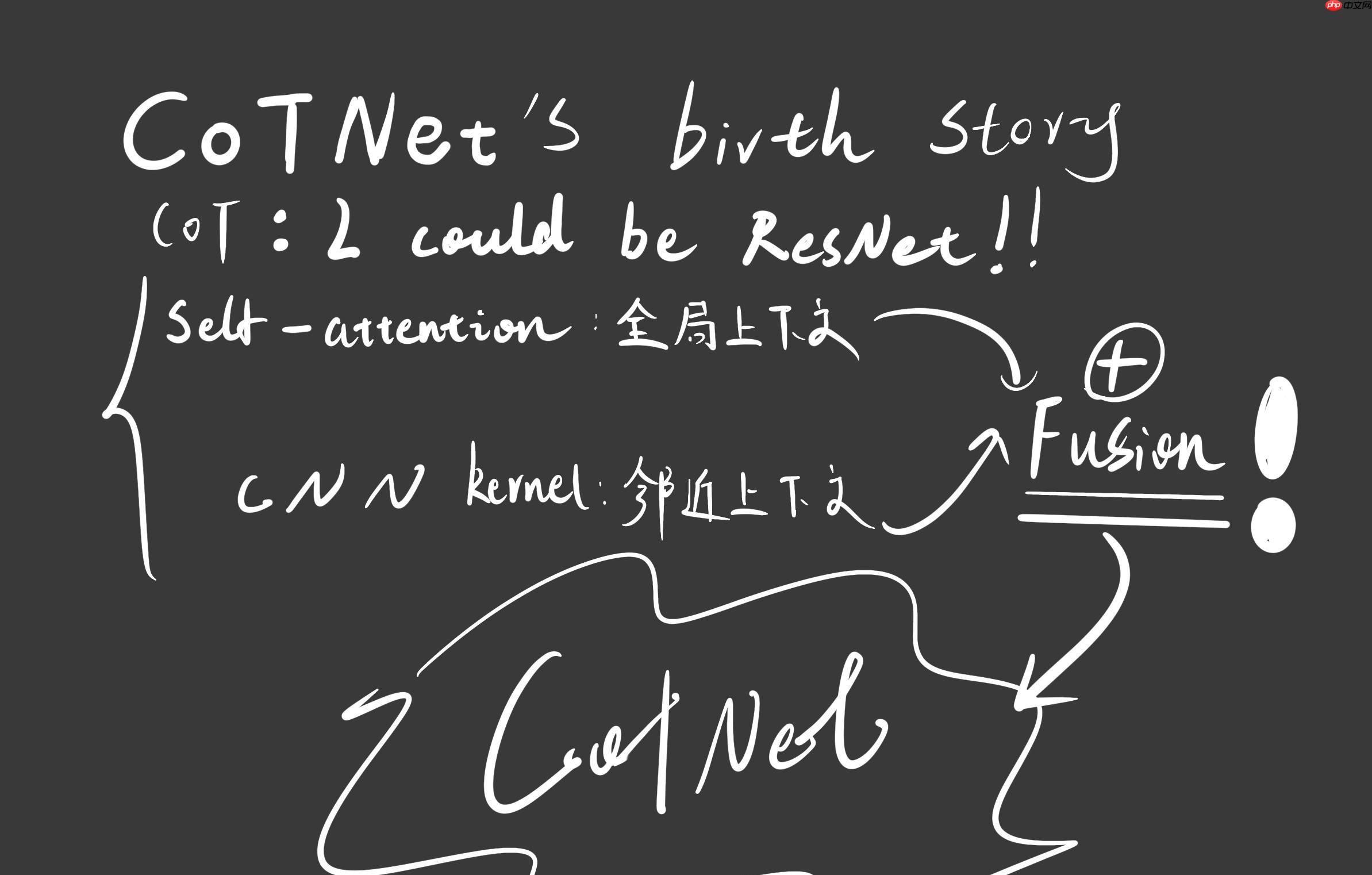

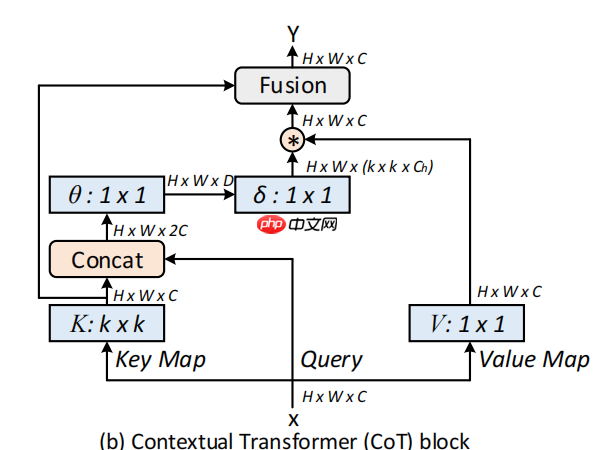

基于此,作者提出了这样一个问题——“有没有一种优雅的方法可以通过利用二维特征图中输入key之间的上下文来增强Transformer结构?”因此作者就提出了上面的结构CoT block。传统的Self-Attention只是根据query和key来计算注意力矩阵,从而导致没有充分利用key的上下文信息。

因此作者首先在key上采用3x3的卷积来建模静态上下文信息,然后将query和上下文信息建模之后的key进行concat,再使用两个连续的1x1卷积来自我注意,生成动态上下文。静态和动态上下文信息最终被融合为输出。(简单的说,就是作者先用卷积来提取了局部了信息,从而充分发掘了key内部的静态上下文信息 )

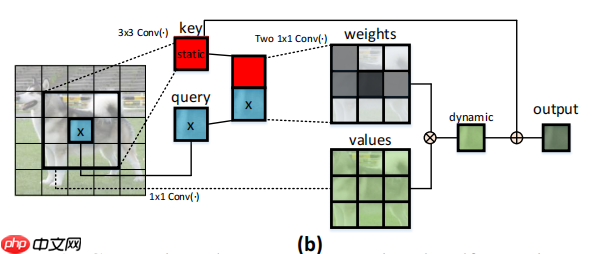

目前在视觉的backbone中,通用的可扩展的局部多头自我注意(scalable local multi-head self-attention),如上图所示。首先用1x1的卷积上X映射到Q、K、V三个不同的空间,Q和K进行相乘获得局部的关系矩阵:

由于原始的Self-Attention对输入特征的位置是不敏感的,所以还需要在Q上加上位置信息,然后将结果与关系矩阵相加:

接着,我们还需要对上面得到的结果进行归一化,得到Attention Map:

得到Attention Map之后,我们需要将kxk的局部信息进行聚合,然后与V相乘,得到Attention之后的结果:

传统的Self-Attention可以很好地触发不同空间位置的特征交互。然而,在传统的Self-Attention机制中,所有的query-key关系都是通过独立的quey-key pair学习的,没有探索两者之间的丰富上下文,这极大的限制了视觉表示学习。

因此,作者提出了CoT Block,如上图所示,这个结构将上下文信息的挖掘和Self-Attention的学习聚合到了一个结构中。

首先对于输入特征 ,首先定义了三个变量 (这里只是将V进行了特征的映射,Q和K还是采用了原来的X值 )。

作者首先在K上进行了kxk的分组卷积,来获得具备局部上下文信息表示的K,(记作 ),这个 可以看做是在局部信息上进行了静态的建模。

接着作者将 和Q进行了concat,然后对concat的结果进行了两次连续的卷积操作:

不同于传统的Self-Attention,这里的A矩阵是由query信息和局部上下文信息 交互得到的,而不只是建模了query和key之间的关系。换句话说,就是通过局部上下文建模的引导,增强了自注意力机制。

然后,作者将这个Attention Map和V进行了相乘,得到了动态上下文建模的 :

CoT的设计是一个统一的自我关注的构建块,可以作为ConvNet中标准卷积的替代品。

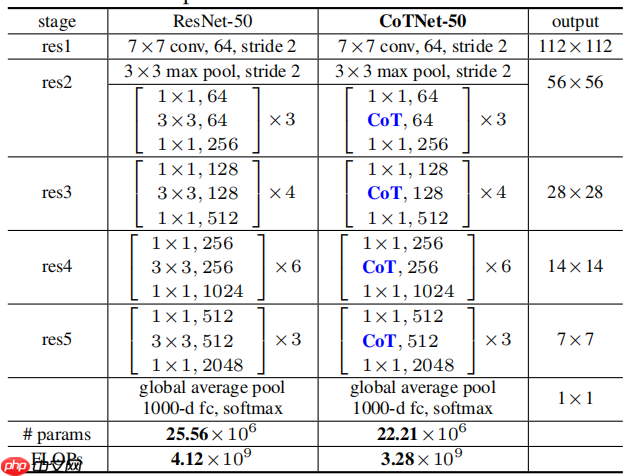

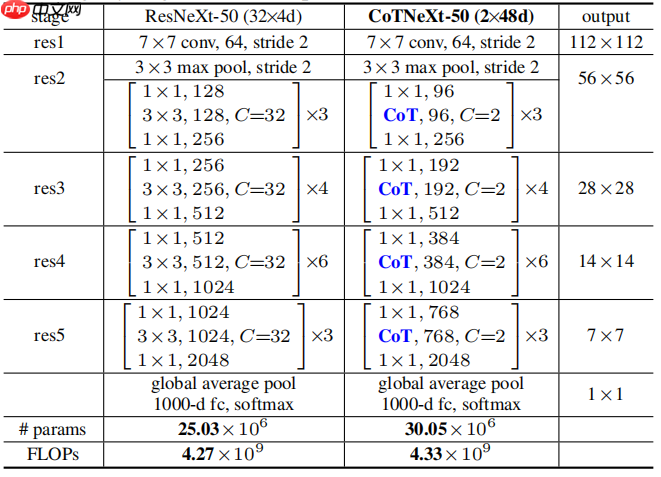

因此,作者用CoT代替了ResNet和ResNeXt结构中的3x3卷积,形成了CoTNet和CoTNeXt。

可以看出,CoTNet-50的参数和计算量比ResNet-50略小。  与ResNeXt-50相比,CoTNeXt-50的参数数量稍多,但与FLOPs相似。

与ResNeXt-50相比,CoTNeXt-50的参数数量稍多,但与FLOPs相似。

import paddleimport paddle.nn as nnimport paddle.nn.functional as Fimport numpy as np

# 构建CoTNetLayerclass CoTNetLayer(nn.Layer):

def __init__(self, dim=512, kernel_size=3):

super().__init__()

self.dim = dim

self.kernel_size = kernel_size

self.key_embed = nn.Sequential( # 通过K*K的卷积提取上下文信息,视作输入X的静态上下文表达

nn.Conv2D(dim, dim, kernel_size=kernel_size, padding=1, stride=1, bias_attr=False),

nn.BatchNorm2D(dim),

nn.ReLU()

)

self.value_embed = nn.Sequential(

nn.Conv2D(dim, dim, kernel_size=1, stride=1, bias_attr=False), # 1*1的卷积进行Value的编码

nn.BatchNorm2D(dim)

)

factor = 4

self.attention_embed = nn.Sequential( # 通过连续两个1*1的卷积计算注意力矩阵

nn.Conv2D(2 * dim, 2 * dim // factor, 1, bias_attr=False), # 输入concat后的特征矩阵 Channel = 2*C

nn.BatchNorm2D(2 * dim // factor),

nn.ReLU(),

nn.Conv2D(2 * dim // factor, kernel_size * kernel_size * dim, 1, stride=1) # out: H * W * (K*K*C)

) def forward(self, x):

bs, c, h, w = x.shape

k1 = self.key_embed(x) # shape:bs,c,h,w 提取静态上下文信息得到key

# v = self.value_embed(x) # shape:bs,c,h*w 得到value编码

# print(v)

flatten1 = nn.Flatten(start_axis=2, stop_axis=-1) # shape:bs,c,h*w 得到value编码

v = flatten1(self.value_embed(x))

y = paddle.concat([k1, x], axis=1)

att = self.attention_embed(y) # shape:bs,c*k*k,h,w 计算注意力矩阵

att = paddle.reshape(att, [bs, c, self.kernel_size * self.kernel_size, h, w]) # att = att.reshape(bs, c, self.kernel_size * self.kernel_size, h, w)

att = att.mean(2, keepdim=False) # shape:bs,c,h*w 求平均降低维度

att = flatten1(att)

k2 = F.softmax(att, axis=-1) * v # 对每一个H*w进行softmax后

k2 = paddle.reshape(k2, [bs, c, h, w]) return k1 + k2 # 注意力融合# if __name__ == '__main__':# input = paddle.randn(shape=[50, 512, 7, 7])# cot = CoTNetLayer(dim=512, kernel_size=3)# output = cot(input)# print(output.shape)from paddle.nn.layer.activation import ReLUfrom CoTNetBlock import CoTNetLayer

zeros_ = nn.initializer.Constant(value=0.)

ones_ = nn.initializer.Constant(value=1.)

kaiming_normal_ = nn.initializer.KaimingNormal()def get_n_params(model):

pp = 0

for p in list(model.parameters()):

nn = 1

for s in list(p.size()):

nn = nn * s

pp += nn return pp# 构建Bottleneckclass Bottleneck(nn.Layer):

expansion = 4

def __init__(self, in_planes, planes, stride=1, downsample=None):

super(Bottleneck, self).__init__() # width = int(planes * (base_width / 64.)) * groups

self.conv1 = nn.Conv2D(in_planes, planes, kernel_size=1, bias_attr=False)

self.bn1 = nn.BatchNorm2D(planes)

self.cot_layer = CoTNetLayer(dim=planes, kernel_size=3)

self.bn2 = nn.BatchNorm2D(planes)

self.conv3 = nn.Conv2D(planes, planes * self.expansion, 1, bias_attr=False)

self.bn3 = nn.BatchNorm2D(planes * self.expansion)

self.relu = ReLU(True)

self.downsample = downsample

self.stride = stride if stride > 1:

self.avd = nn.AvgPool2D(3, 2, padding=1) else:

self.avd = None

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out) if self.avd is not None:

out = self.avd(out)

out = self.cot_layer(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out) if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out) return out# 构建CoTResNetclass CoTResNet(nn.Layer):

def __init__(self, block, layers, num_classes=1000):

super(CoTResNet, self).__init__()

self.in_planes = 64

self.conv1 = nn.Conv2D(3, 64, kernel_size=7, stride=2, padding=3, bias_attr=False)

self.bn1 = nn.BatchNorm2D(64)

self.relu = ReLU()

self.maxpool = nn.MaxPool2D(kernel_size=3, stride=2, padding=1)

self.layer1 = self._maker_layer(block, 64, layers[0])

self.layer2 = self._maker_layer(block, 128, layers[1], stride=2)

self.layer3 = self._maker_layer(block, 256, layers[2], stride=2)

self.layer4 = self._maker_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AvgPool2D(7, stride=1)

self.fc = nn.Linear(512 * block.expansion, num_classes) def _init_weights(self, m):

if isinstance(m, nn.Conv2D):

kaiming_normal_(m.weight) elif isinstance(m, nn.BatchNorm2D):

ones_(m.weight)

zeros_(m.bias) def _maker_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.in_planes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2D(self.in_planes, planes * block.expansion,

kernel_size=1, stride=stride, bias_attr=False),

nn.BatchNorm2D(planes * block.expansion),

)

layers = []

layers.append(block(self.in_planes, planes, stride, downsample))

self.in_planes = planes * block.expansion for i in range(1, blocks):

layers.append(block(self.in_planes, planes)) return nn.Sequential(*layers) def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

flatten = nn.Flatten(start_axis=1, stop_axis=-1)

x = flatten(x)

x = self.fc(x) return xdef cotnet50(**kwargs):

model = CoTResNet(Bottleneck, [3, 4, 6, 3], **kwargs) return model# if __name__ == '__main__':# # x = paddle.rand(shape=[1, 3, 224, 224])# # y = model(x)# # print(y.shape)# paddle.Model(cotnet50()).summary((1, 3, 224, 224))# # paddle.Model(paddle.vision.resnet50()).summary((1, 3, 224, 224))def cotnet50(**kwargs):

model = CoTResNet(Bottleneck, [3, 4, 6, 3], **kwargs) return model# !mkdir ~/data/ILSVRC2012# !tar -xf ~/data/data89857/ILSVRC2012mini.tar -C ~/data/ILSVRC2012

import osimport paddleimport numpy as npfrom PIL import Imageclass ILSVRC2012(paddle.io.Dataset):

def __init__(self, root, label_list, transform):

self.transform = transform

self.root = root

self.label_list = label_list

self.load_datas() def load_datas(self):

self.imgs = []

self.labels = [] with open(self.label_list, 'r') as f: for line in f:

img, label = line[:-1].split(' ')

self.imgs.append(os.path.join(self.root, img))

self.labels.append(int(label)) def __getitem__(self, idx):

label = self.labels[idx]

image = self.imgs[idx]

image = Image.open(image).convert('RGB')

image = self.transform(image) return image.astype('float32'), np.array(label).astype('int64') def __len__(self):

return len(self.imgs)# 1.构建模型network = cotnet50(num_classes=1000)#使用paddle高层APImodel = paddle.Model(network) model.summary((1, 3, 224, 224))#模型可视化

W0426 17:09:21.611791 1204 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1 W0426 17:09:21.615837 1204 device_context.cc:465] device: 0, cuDNN Version: 7.6.

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[1, 3, 224, 224]] [1, 64, 112, 112] 9,408

BatchNorm2D-1 [[1, 64, 112, 112]] [1, 64, 112, 112] 256

ReLU-1 [[1, 64, 112, 112]] [1, 64, 112, 112] 0

MaxPool2D-1 [[1, 64, 112, 112]] [1, 64, 56, 56] 0

Conv2D-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-4 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-4 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-4 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-2 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-5 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-5 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-6 [[1, 128, 56, 56]] [1, 32, 56, 56] 4,096

BatchNorm2D-6 [[1, 32, 56, 56]] [1, 32, 56, 56] 128

ReLU-3 [[1, 32, 56, 56]] [1, 32, 56, 56] 0

Conv2D-7 [[1, 32, 56, 56]] [1, 576, 56, 56] 19,008

CoTNetLayer-1 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

BatchNorm2D-7 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-8 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-8 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Conv2D-2 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-2 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Bottleneck-1 [[1, 64, 56, 56]] [1, 256, 56, 56] 0

Conv2D-9 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-9 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-7 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-10 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-10 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-5 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-11 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-11 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-12 [[1, 128, 56, 56]] [1, 32, 56, 56] 4,096

BatchNorm2D-12 [[1, 32, 56, 56]] [1, 32, 56, 56] 128

ReLU-6 [[1, 32, 56, 56]] [1, 32, 56, 56] 0

Conv2D-13 [[1, 32, 56, 56]] [1, 576, 56, 56] 19,008

CoTNetLayer-2 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

BatchNorm2D-13 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-14 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-14 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Bottleneck-2 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-15 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-15 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-10 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-16 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-16 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-8 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-17 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-17 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-18 [[1, 128, 56, 56]] [1, 32, 56, 56] 4,096

BatchNorm2D-18 [[1, 32, 56, 56]] [1, 32, 56, 56] 128

ReLU-9 [[1, 32, 56, 56]] [1, 32, 56, 56] 0

Conv2D-19 [[1, 32, 56, 56]] [1, 576, 56, 56] 19,008

CoTNetLayer-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

BatchNorm2D-19 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-20 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-20 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Bottleneck-3 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-22 [[1, 256, 56, 56]] [1, 128, 56, 56] 32,768

BatchNorm2D-22 [[1, 128, 56, 56]] [1, 128, 56, 56] 512

ReLU-13 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

AvgPool2D-1 [[1, 128, 56, 56]] [1, 128, 28, 28] 0

Conv2D-23 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-23 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-11 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-24 [[1, 128, 28, 28]] [1, 128, 28, 28] 16,384

BatchNorm2D-24 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-25 [[1, 256, 28, 28]] [1, 64, 28, 28] 16,384

BatchNorm2D-25 [[1, 64, 28, 28]] [1, 64, 28, 28] 256

ReLU-12 [[1, 64, 28, 28]] [1, 64, 28, 28] 0

Conv2D-26 [[1, 64, 28, 28]] [1, 1152, 28, 28] 74,880

CoTNetLayer-4 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

BatchNorm2D-26 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-27 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-27 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Conv2D-21 [[1, 256, 56, 56]] [1, 512, 28, 28] 131,072

BatchNorm2D-21 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Bottleneck-4 [[1, 256, 56, 56]] [1, 512, 28, 28] 0

Conv2D-28 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-28 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-16 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-29 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-29 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-14 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-30 [[1, 128, 28, 28]] [1, 128, 28, 28] 16,384

BatchNorm2D-30 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-31 [[1, 256, 28, 28]] [1, 64, 28, 28] 16,384

BatchNorm2D-31 [[1, 64, 28, 28]] [1, 64, 28, 28] 256

ReLU-15 [[1, 64, 28, 28]] [1, 64, 28, 28] 0

Conv2D-32 [[1, 64, 28, 28]] [1, 1152, 28, 28] 74,880

CoTNetLayer-5 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

BatchNorm2D-32 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-33 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-33 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Bottleneck-5 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-34 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-34 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-19 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-35 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-35 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-36 [[1, 128, 28, 28]] [1, 128, 28, 28] 16,384

BatchNorm2D-36 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-37 [[1, 256, 28, 28]] [1, 64, 28, 28] 16,384

BatchNorm2D-37 [[1, 64, 28, 28]] [1, 64, 28, 28] 256

ReLU-18 [[1, 64, 28, 28]] [1, 64, 28, 28] 0

Conv2D-38 [[1, 64, 28, 28]] [1, 1152, 28, 28] 74,880

CoTNetLayer-6 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

BatchNorm2D-38 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-39 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-39 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Bottleneck-6 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-40 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-40 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-22 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-41 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-41 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-42 [[1, 128, 28, 28]] [1, 128, 28, 28] 16,384

BatchNorm2D-42 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-43 [[1, 256, 28, 28]] [1, 64, 28, 28] 16,384

BatchNorm2D-43 [[1, 64, 28, 28]] [1, 64, 28, 28] 256

ReLU-21 [[1, 64, 28, 28]] [1, 64, 28, 28] 0

Conv2D-44 [[1, 64, 28, 28]] [1, 1152, 28, 28] 74,880

CoTNetLayer-7 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

BatchNorm2D-44 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-45 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-45 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Bottleneck-7 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-47 [[1, 512, 28, 28]] [1, 256, 28, 28] 131,072

BatchNorm2D-47 [[1, 256, 28, 28]] [1, 256, 28, 28] 1,024

ReLU-25 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

AvgPool2D-2 [[1, 256, 28, 28]] [1, 256, 14, 14] 0

Conv2D-48 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-48 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-23 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-49 [[1, 256, 14, 14]] [1, 256, 14, 14] 65,536

BatchNorm2D-49 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-50 [[1, 512, 14, 14]] [1, 128, 14, 14] 65,536

BatchNorm2D-50 [[1, 128, 14, 14]] [1, 128, 14, 14] 512

ReLU-24 [[1, 128, 14, 14]] [1, 128, 14, 14] 0

Conv2D-51 [[1, 128, 14, 14]] [1, 2304, 14, 14] 297,216

CoTNetLayer-8 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-51 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-52 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-52 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Conv2D-46 [[1, 512, 28, 28]] [1, 1024, 14, 14] 524,288

BatchNorm2D-46 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Bottleneck-8 [[1, 512, 28, 28]] [1, 1024, 14, 14] 0

Conv2D-53 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-53 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-28 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-54 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-54 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-26 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-55 [[1, 256, 14, 14]] [1, 256, 14, 14] 65,536

BatchNorm2D-55 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-56 [[1, 512, 14, 14]] [1, 128, 14, 14] 65,536

BatchNorm2D-56 [[1, 128, 14, 14]] [1, 128, 14, 14] 512

ReLU-27 [[1, 128, 14, 14]] [1, 128, 14, 14] 0

Conv2D-57 [[1, 128, 14, 14]] [1, 2304, 14, 14] 297,216

CoTNetLayer-9 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-57 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-58 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-58 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Bottleneck-9 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-59 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-59 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-31 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-60 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-60 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-29 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-61 [[1, 256, 14, 14]] [1, 256, 14, 14] 65,536

BatchNorm2D-61 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-62 [[1, 512, 14, 14]] [1, 128, 14, 14] 65,536

BatchNorm2D-62 [[1, 128, 14, 14]] [1, 128, 14, 14] 512

ReLU-30 [[1, 128, 14, 14]] [1, 128, 14, 14] 0

Conv2D-63 [[1, 128, 14, 14]] [1, 2304, 14, 14] 297,216

CoTNetLayer-10 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-63 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-64 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-64 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Bottleneck-10 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-65 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-65 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-34 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-66 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-66 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-32 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-67 [[1, 256, 14, 14]] [1, 256, 14, 14] 65,536

BatchNorm2D-67 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-68 [[1, 512, 14, 14]] [1, 128, 14, 14] 65,536

BatchNorm2D-68 [[1, 128, 14, 14]] [1, 128, 14, 14] 512

ReLU-33 [[1, 128, 14, 14]] [1, 128, 14, 14] 0

Conv2D-69 [[1, 128, 14, 14]] [1, 2304, 14, 14] 297,216

CoTNetLayer-11 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-69 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-70 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-70 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Bottleneck-11 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-71 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-71 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-37 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-72 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-72 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-35 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-73 [[1, 256, 14, 14]] [1, 256, 14, 14] 65,536

BatchNorm2D-73 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-74 [[1, 512, 14, 14]] [1, 128, 14, 14] 65,536

BatchNorm2D-74 [[1, 128, 14, 14]] [1, 128, 14, 14] 512

ReLU-36 [[1, 128, 14, 14]] [1, 128, 14, 14] 0

Conv2D-75 [[1, 128, 14, 14]] [1, 2304, 14, 14] 297,216

CoTNetLayer-12 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-75 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-76 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-76 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Bottleneck-12 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-77 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-77 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-40 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-78 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-78 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-38 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-79 [[1, 256, 14, 14]] [1, 256, 14, 14] 65,536

BatchNorm2D-79 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-80 [[1, 512, 14, 14]] [1, 128, 14, 14] 65,536

BatchNorm2D-80 [[1, 128, 14, 14]] [1, 128, 14, 14] 512

ReLU-39 [[1, 128, 14, 14]] [1, 128, 14, 14] 0

Conv2D-81 [[1, 128, 14, 14]] [1, 2304, 14, 14] 297,216

CoTNetLayer-13 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

BatchNorm2D-81 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-82 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-82 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Bottleneck-13 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-84 [[1, 1024, 14, 14]] [1, 512, 14, 14] 524,288

BatchNorm2D-84 [[1, 512, 14, 14]] [1, 512, 14, 14] 2,048

ReLU-43 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

AvgPool2D-3 [[1, 512, 14, 14]] [1, 512, 7, 7] 0

Conv2D-85 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-85 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-41 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-86 [[1, 512, 7, 7]] [1, 512, 7, 7] 262,144

BatchNorm2D-86 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-87 [[1, 1024, 7, 7]] [1, 256, 7, 7] 262,144

BatchNorm2D-87 [[1, 256, 7, 7]] [1, 256, 7, 7] 1,024

ReLU-42 [[1, 256, 7, 7]] [1, 256, 7, 7] 0

Conv2D-88 [[1, 256, 7, 7]] [1, 4608, 7, 7] 1,184,256

CoTNetLayer-14 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

BatchNorm2D-88 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-89 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-89 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Conv2D-83 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 2,097,152

BatchNorm2D-83 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Bottleneck-14 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 0

Conv2D-90 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-90 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-46 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-91 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-91 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-44 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-92 [[1, 512, 7, 7]] [1, 512, 7, 7] 262,144

BatchNorm2D-92 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-93 [[1, 1024, 7, 7]] [1, 256, 7, 7] 262,144

BatchNorm2D-93 [[1, 256, 7, 7]] [1, 256, 7, 7] 1,024

ReLU-45 [[1, 256, 7, 7]] [1, 256, 7, 7] 0

Conv2D-94 [[1, 256, 7, 7]] [1, 4608, 7, 7] 1,184,256

CoTNetLayer-15 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

BatchNorm2D-94 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-95 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-95 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Bottleneck-15 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-96 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-96 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-49 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-97 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-97 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-47 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-98 [[1, 512, 7, 7]] [1, 512, 7, 7] 262,144

BatchNorm2D-98 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-99 [[1, 1024, 7, 7]] [1, 256, 7, 7] 262,144

BatchNorm2D-99 [[1, 256, 7, 7]] [1, 256, 7, 7] 1,024

ReLU-48 [[1, 256, 7, 7]] [1, 256, 7, 7] 0

Conv2D-100 [[1, 256, 7, 7]] [1, 4608, 7, 7] 1,184,256

CoTNetLayer-16 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

BatchNorm2D-100 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-101 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-101 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Bottleneck-16 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

AvgPool2D-4 [[1, 2048, 7, 7]] [1, 2048, 1, 1] 0

Linear-1 [[1, 2048]] [1, 1000] 2,049,000

===========================================================================

Total params: 33,855,464

Trainable params: 33,711,464

Non-trainable params: 144,000

---------------------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 426.00

Params size (MB): 129.15

Estimated Total Size (MB): 555.72

---------------------------------------------------------------------------{'total_params': 33855464, 'trainable_params': 33711464}# 2.数据预处理import paddle.vision.transforms as T

train_transforms = T.Compose([

T.Resize(256, interpolation='bicubic'),

T.CenterCrop(224),

T.ToTensor(),

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

train_dataset = ILSVRC2012('ILSVRC2012mini', transform=train_transforms, label_list='ILSVRC2012mini/train_list.txt')

val_transforms = T.Compose([

T.Resize(256, interpolation='bicubic'),

T.CenterCrop(224),

T.ToTensor(),

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

val_dataset = ILSVRC2012('ILSVRC2012mini', transform=val_transforms, label_list='ILSVRC2012mini/val_list.txt')EPOCHS = 100BATCH_SIZE = 64#优化函数def create_optim(parameters):

step_each_epoch = 40000 // BATCH_SIZE

lr = paddle.optimizer.lr.CosineAnnealingDecay(learning_rate=0.01, T_max=step_each_epoch * EPOCHS) return paddle.optimizer.Momentum(

learning_rate=lr,

parameters=parameters,

weight_decay=paddle.regularizer.L2Decay(1.0)) #正则化来提升精度

# 模型训练配置model.prepare(create_optim(network.parameters()),

paddle.nn.CrossEntropyLoss(), # 损失函数

paddle.metric.Accuracy(topk=(1, 5))) # 评估指标

# 训练可视化callback = paddle.callbacks.VisualDL(log_dir='visualdl_log_dir')

# 启动模型全流程训练model.fit(train_dataset, # 训练数据集

val_dataset, # 评估数据集

epochs=EPOCHS, # 总的训练轮次

batch_size=BATCH_SIZE, # 批次计算的样本量大小

shuffle=True, # 是否打乱样本集

verbose=1, # 日志展示格式

save_dir='./chk_points/', # 分阶段的训练模型存储路径

callbacks=callback) # 回调函数使用以上就是基于PaddlePaddle复现CoTNet的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号