本文基于PaddlePaddle复现GreedyHash算法,解决图像检索中NP优化难题。在CIFAR-10 (I)数据集上,12/24/32/48bits模型精度达0.798、0.809、0.817、0.819(最高0.824),优于原论文及PyTorch重跑结果,含完整代码与权重。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

【论文复现-图像分类检索】基于 PaddlePaddle 实现 GreedyHash(NeurIPS2018)

原论文:Greedy Hash: Towards Fast Optimization for Accurate Hash Coding in CNN.

官方原版代码(基于PyTorch)GreedyHash.

第三方参考代码(基于PyTorch)DeepHash-pytorch.

本项目GitHub repo paddle_greedyhash

1. 简介

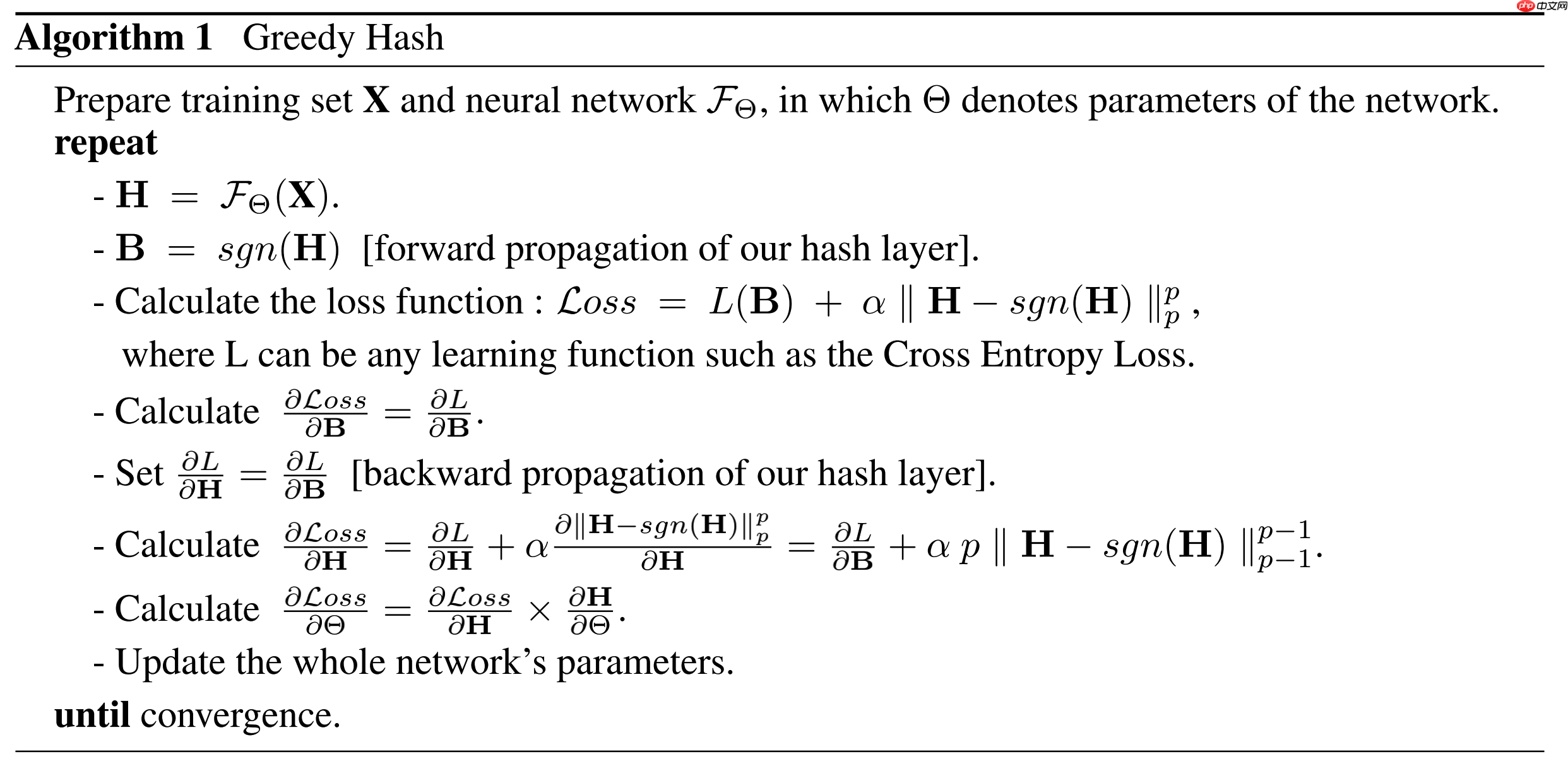

GreedyHash 意在解决图像检索 Deep Hashing 领域中NP优化难的问题,为此,作者在每次迭代中向可能的最优离散解迭代式更新网络参数。具体来说,GreedyHash 在网络模型中加入了一个哈希编码层,在前向传播过程中为了保持离散的限制条件,严格利用sign函数。在反向传播过程中,梯度完整地传向前一层,进而可以避免梯度弥散现象。算法伪代码如下。

GreedyHash 算法伪代码

2. 数据集和复现精度

数据集:cifar-1(即CIFAR-10 (I))

CIFAR-10 数据集共10类,由 60,000 个 32×32 的彩色图像组成。

CIFAR-10 (I)中,选择 1000 张图像(每类 100 张图像)作为查询集,其余 59,000 张图像作为数据库, 而从数据库中随机采样 5,000 张图像(每类 500 张图像)作为训练集。数据集处理代码详见 utils/datasets.py。

复现精度

| Framework | 12bits | 24bits | 32bits | 48bits | |

|---|---|---|---|---|---|

| 论文结果 | PyTorch | 0.774 | 0.795 | 0.810 | 0.822 |

| 重跑结果 | PyTorch | 0.789 | 0.799 | 0.813 | 0.824 |

| 复现结果 | PaddlePaddle | 0.798 | 0.809 | 0.817 | 0.819(0.824) |

需要注意的是,此处在重跑PyTorch版本代码时发现原论文代码 GreedyHash/cifar1.py 由于PyTorch版本较老,CIFAR-10 数据集处理部分代码无法运行,遂将第三方参考代码 DeepHash-pytorch 中的 CIFAR-10 数据集处理部分代码照搬运行,得以重跑PyTorch版本代码,结果罗列如上。严谨起见,已将修改后的PyTorch版本代码及训练日志放在 pytorch_greedyhash/main.py 和 pytorch_greedyhash/logs 中。因为跑的时候忘记设置随机数种子了,复现的时候可能结果有所偏差,不过应该都在可允许范围内,问题不大。

本项目(基于 PaddlePaddle )依次跑 12/24/32/48 bits 的结果罗列在上表中,且已将训练得到的模型参数与训练日志 log 存放于output文件夹下。由于训练时设置了随机数种子,理论上是可复现的。但在反复重跑几次发现结果还是会有波动,比如有1次 48bits 的模型跑到了 0.824,我把对应的 log 和权重放在 output/bit48_alone 路径下了,说明算法的随机性仍然存在。

3. 准备环境

本人环境配置:

Python: 3.7.11

PaddlePaddle: 2.2.2

-

硬件:NVIDIA 2080Ti * 1

华友协同办公自动化OA系统下载

华友协同办公自动化OA系统下载华友协同办公管理系统(华友OA),基于微软最新的.net 2.0平台和SQL Server数据库,集成强大的Ajax技术,采用多层分布式架构,实现统一办公平台,功能强大、价格便宜,是适用于企事业单位的通用型网络协同办公系统。 系统秉承协同办公的思想,集成即时通讯、日记管理、通知管理、邮件管理、新闻、考勤管理、短信管理、个人文件柜、日程安排、工作计划、工作日清、通讯录、公文流转、论坛、在线调查、

p.s. 因为数据集很小,所以放单卡机器上跑了,多卡的代码可能后续补上

4. 快速开始

step1: 下载本项目及训练权重

本项目在AI Studio上,您可以选择fork下来直接运行。首先,cd到paddle_greedyhash项目文件夹下:

cd paddle_greedyhash

/home/aistudio/paddle_greedyhash

或者,您也可以从GitHub上git本repo在本地运行:

git clone https://github.com/hatimwen/paddle_greedyhash.gitcd paddle_greedyhash

权重部分:

由于权重比较多,加起来有 1 个 GB ,因此我放到百度网盘里了,烦请下载后按照 5. 项目结构 排列各个权重文件。或者您也可以按照下载某个bit位数的权重以测试相应性能。

下载链接:BaiduNetdisk, 提取码: tl1i 。

注意:在AI Studio上,已上传了 bit_48.pdparams 权重文件在 output 路径下,方便体验。

step2: 修改参数

- 请根据实际情况,修改main.py中的 arguments 配置内容(如:batch_size等)。

step3: 验证模型

需要提前下载并排列好 BaiduNetdisk 中的各个预训练模型。

注意:在AI Studio上,由于已预先上传bit_48.pdparams 权重文件,因此可以直接运行:

# 验证模型! python eval.py --batch-size 32 --bit 48

W0427 21:33:47.931723 449 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.0, Runtime API Version: 10.1 W0427 21:33:47.935976 449 device_context.cc:465] device: 0, cuDNN Version: 7.6. Loading AlexNet state from path: /home/aistudio/paddle_greedyhash/models/AlexNet_pretrained.pdparams 0427 09:33:53 PM Namespace(batch_size=32, bit=48, crop_size=224, dataset='cifar10-1', log_path='logs/', model='GreedyHash', n_class=10, pretrained=None, seed=2000, topK=-1) 0427 09:33:53 PM ----- Pretrained: Load model state from output/bit_48.pdparams --- Calculating Acc : 100%|█████████████████████| 32/32 [00:02<00:00, 13.36it/s] --- Compressing(train) : 100%|██████████████| 1844/1844 [01:42<00:00, 17.97it/s] --- Compressing(test) : 100%|███████████████████| 32/32 [00:02<00:00, 13.89it/s] --- Calculating mAP : 100%|█████████████████| 1000/1000 [01:23<00:00, 11.94it/s] 0427 09:37:06 PM EVAL-GreedyHash, bit:48, dataset:cifar10-1, MAP:0.819

step4: 训练模型

- 例如要训练 12bits 的模型,可以运行:

# 训练模型! python train.py --batch-size 32 --learning_rate 1e-3 --seed 2000 --bit 12# 这里记录是看运行没问题就中断了。

W0427 21:38:07.032394 780 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.0, Runtime API Version: 10.1 W0427 21:38:07.036984 780 device_context.cc:465] device: 0, cuDNN Version: 7.6. Loading AlexNet state from path: /home/aistudio/paddle_greedyhash/models/AlexNet_pretrained.pdparams 0427 09:38:12 PM Namespace(alpha=0.1, batch_size=32, bit=12, crop_size=224, dataset='cifar10-1', epoch=50, epoch_lr_decrease=30, eval_epoch=2, learning_rate=0.001, log_path='logs/', model='GreedyHash', momentum=0.9, n_class=10, num_train=5000, optimizer='SGD', output_dir='checkpoints/', seed=2000, topK=-1, weight_decay=0.0005) 0427 09:38:22 PM GreedyHash[ 1/50][21:38:22] bit:12, lr:0.001000000, dataset:cifar10-1, train loss:1.904 0427 09:38:31 PM GreedyHash[ 2/50][21:38:31] bit:12, lr:0.001000000, dataset:cifar10-1, train loss:1.574 --- Calculating Acc : 100%|█████████████████████| 32/32 [00:02<00:00, 13.48it/s] --- Compressing(train) : 100%|██████████████| 1844/1844 [01:46<00:00, 17.28it/s] --- Compressing(test) : 100%|███████████████████| 32/32 [00:02<00:00, 13.81it/s] --- Calculating mAP : 100%|█████████████████| 1000/1000 [01:14<00:00, 13.39it/s] 0427 09:41:39 PM save in checkpoints/model_best_12 0427 09:41:40 PM GreedyHash epoch:2, bit:12, dataset:cifar10-1, MAP:0.614, Best MAP: 0.614, Acc: 77.000 0427 09:41:51 PM GreedyHash[ 3/50][21:41:51] bit:12, lr:0.001000000, dataset:cifar10-1, train loss:1.316 0427 09:42:00 PM GreedyHash[ 4/50][21:42:00] bit:12, lr:0.001000000, dataset:cifar10-1, train loss:1.120 --- Calculating Acc : 100%|█████████████████████| 32/32 [00:02<00:00, 13.93it/s] --- Compressing(train) : 46%|██████▊ | 841/1844 [00:49<00:58, 17.28it/s]^C Traceback (most recent call last): File "train.py", line 183, inmain() File "train.py", line 180, in main database_loader) File "train.py", line 136, in train_val mAP, acc = val(model, test_loader, database_loader) File "train.py", line 81, in val retrievalB, retrievalL, queryB, queryL = compress(database_loader, test_loader, model) File "/home/aistudio/paddle_greedyhash/utils/tools.py", line 31, in compress _,_, code = model(data) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py", line 917, in __call__ return self._dygraph_call_func(*inputs, **kwargs) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py", line 907, in _dygraph_call_func outputs = self.forward(*inputs, **kwargs) File "/home/aistudio/paddle_greedyhash/models/greedyhash.py", line 67, in forward x = self.features(x) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py", line 917, in __call__ return self._dygraph_call_func(*inputs, **kwargs) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py", line 907, in _dygraph_call_func outputs = self.forward(*inputs, **kwargs) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/container.py", line 98, in forward input = layer(input) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py", line 917, in __call__ return self._dygraph_call_func(*inputs, **kwargs) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py", line 907, in _dygraph_call_func outputs = self.forward(*inputs, **kwargs) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/conv.py", line 677, in forward use_cudnn=self._use_cudnn) File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/functional/conv.py", line 123, in _conv_nd pre_bias = getattr(_C_ops, op_type)(x, weight, *attrs) KeyboardInterrupt --- Compressing(train) : 46%|██████▊ | 841/1844 [00:49<00:58, 17.00it/s]

! python predict.py --bit 48 --pic_id 1949

W0427 21:43:31.814743 1416 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.0, Runtime API Version: 10.1 W0427 21:43:31.819936 1416 device_context.cc:465] device: 0, cuDNN Version: 7.6. Loading AlexNet state from path: /home/aistudio/paddle_greedyhash/models/AlexNet_pretrained.pdparams ----- Pretrained: Load model state from output/bit_48.pdparams ----- Predicted Class_ID: 0, Prob: 0.9965014457702637, Real Label_ID: 0 ----- Predicted Class_NAME: 飞机 airplane, Real Class_NAME: 飞机 airplane

显然,预测结果正确。

七、代码结构与详细说明

|-- paddle_greedyhash

|-- output # 日志及模型文件

|-- bit48_alone # 偶然把bit48跑到了0.824,日志和权重存于此

|-- bit_48.pdparams # bit48_alone的模型权重

|-- log_48.txt # bit48_alone的训练日志

|-- bit_12.pdparams # 12bits的模型权重

|-- bit_24.pdparams # 24bits的模型权重

|-- bit_32.pdparams # 32bits的模型权重

|-- bit_48.pdparams # 48bits的模型权重

|-- log_eval.txt # 用训练好的模型测试日志(包含bit48_alone)

|-- log_train.txt # 依次训练 12/24/32/48 bits(不包含bit48_alone)

|-- models

|-- __init__.py

|-- alexnet.py # AlexNet 定义,注意这里有略微有别于 paddle 集成的 AlexNet

|-- greedyhash.py # GreedyHash 算法定义

|-- utils

|-- datasets.py # dataset, dataloader, transforms

|-- lr_scheduler.py # 学习率策略定义

|-- tools.py # mAP, acc计算;随机数种子固定函数

|-- eval.py # 单卡测试代码

|-- predict.py # 预测演示代码

|-- train.py # 单卡训练代码

|-- README.md

|-- pytorch_greedyhash

|-- datasets.py # PyTorch 定义dataset, dataloader, transforms

|-- cal_map.py # PyTorch mAP计算;

|-- main.py # PyTorch 单卡训练代码

|-- output # PyTorch 重跑日志八、模型信息

关于模型的其他信息,可以参考下表:

| 信息 | 说明 |

|---|---|

| 发布者 | 文洪涛 |

| hatimwen@163.com | |

| 时间 | 2022.04 |

| 框架版本 | Paddle 2.2.2 |

| 应用场景 | 图像检索 |

| 支持硬件 | GPU、CPU |

| 下载链接 | 预训练模型 提取码: tl1i |

| 在线运行 | AI Studio |

| License | Apache 2.0 license |

九、参考及引用

@article{su2018greedy,

title={Greedy hash: Towards fast optimization for accurate hash coding in cnn},

author={Su, Shupeng and Zhang, Chao and Han, Kai and Tian, Yonghong},

year={2018},

journal={Advances in Neural Information Processing Systems},

volume={31},

year={2018}}