本文基于Unet分割网络,利用49例头部磁共振T1、T2数据,通过配准使二者解剖位置一致,转换数据格式并裁剪窗宽窗位,构建数据集。以T1为输入、T2为标签训练Unet进行回归,用SSIM评估,经200轮训练,最佳SSIM达0.571,实现由T1生成虚拟T2图像。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

基于分割网络Unet生成虚拟图像

这个想法是基于

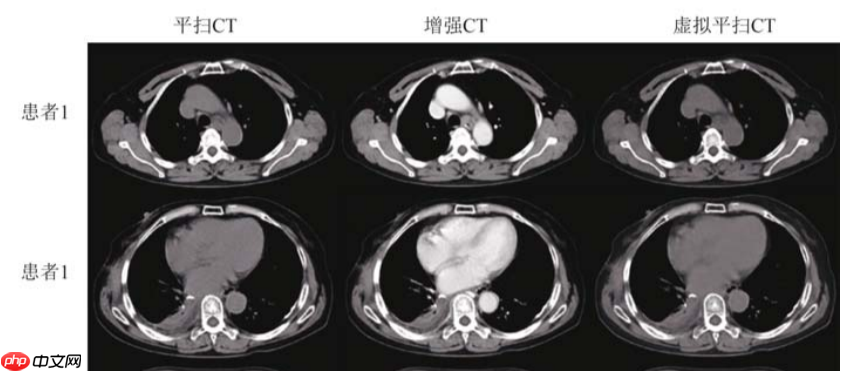

[1]高留刚, 李春迎, 陆正大,等. 基于卷积神经网络生成虚拟平扫CT图像[J]. 中国医学影像技术, 2022, 38(3):5.

【1】文章使用Unet对增强CT数据进行训练,最终预测生成对应的虚拟平扫CT图像,达到只需要对患者扫描一次CT即可,避免患者接受过多的放射辐射。

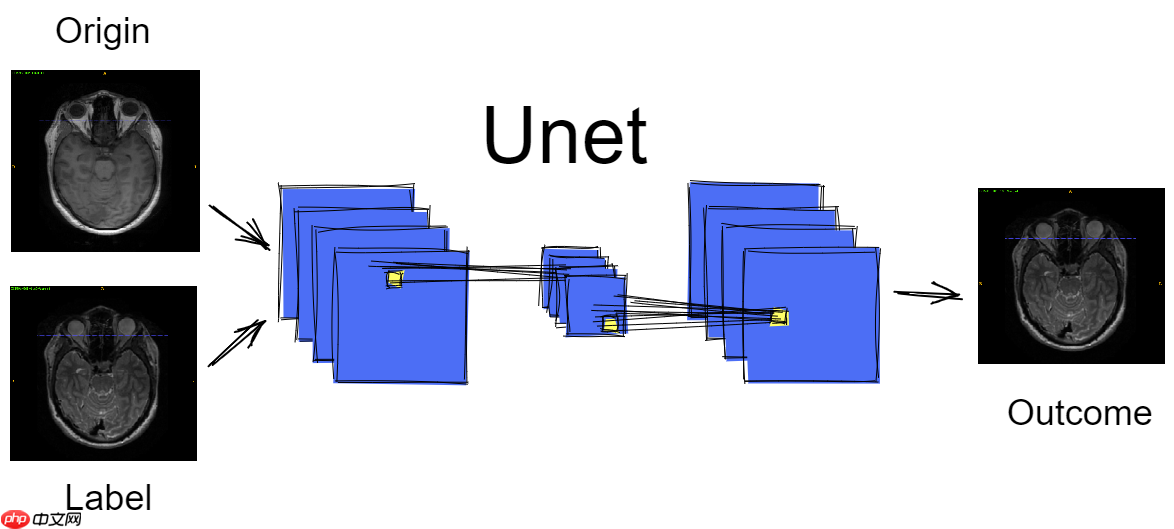

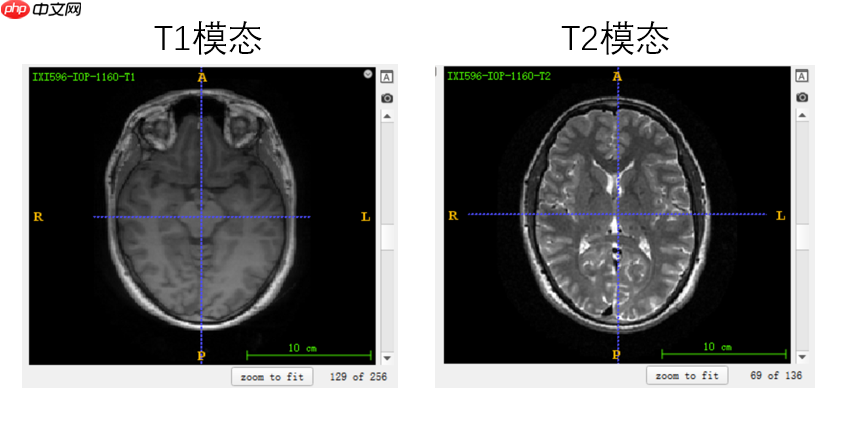

【2】因为本项目没有对应的CT数据,刚好有一个T1、T2磁共振头部公开数据,先磁共振数据进行配准,然后输入T1模态的数据到Unet中然后生成对应的T2模态数据。

1. 数据

数量:49例

模态和部位:磁共振的T1和T2头部数据

#解压数据!unzip -o /home/aistudio/data/data146796/mydata.zip -d /home/aistudio/work

#antspyx 是一个配准工具包,SImpleITK处理医学数据!pip install antspyx SimpleITK

import osimport antsimport SimpleITK as sitkfrom tqdm import tqdmimport numpy as npimport randomimport matplotlib.pyplot as pltimport paddleimport cv2

2. 对数据进行配准

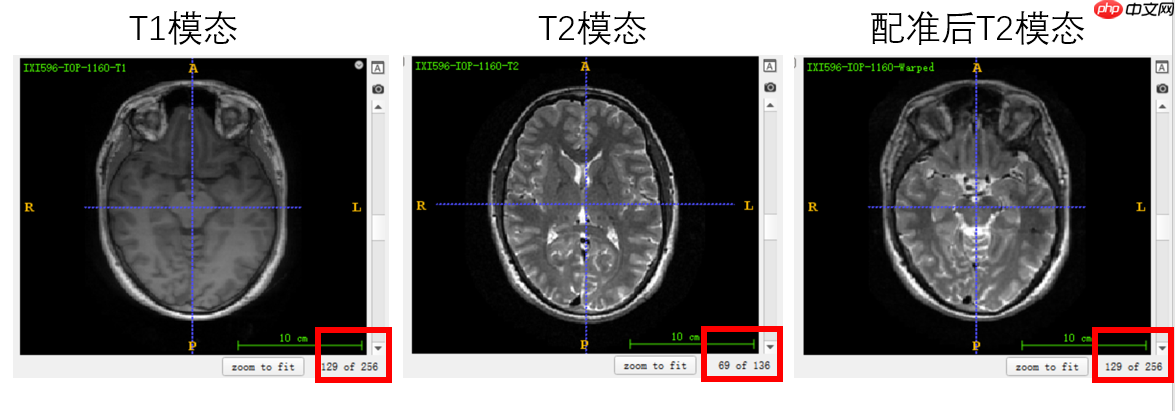

项是利用 Unet对像素数值进行回归预测。所以在进入网路之前,除了像素值的尺寸不同之外,原资料与标记资料的解剖学位置应当是相同的。因此,必须将原始资料与标签资料进行匹配,使二者重新整合。比如下面这张图片,T1有256层,T2有136层,显然是解剖位置不对,然后经过配准后的T2有256层,和T1层的解剖学位置一模一样。对齐后的数据可以作为标记输入到网络中。

网络工作室源码基于热腾CMS(RTCMS)定制,栏目全站自动调用,可设置生成为html静态文件。网站分类适合网络公司和工作室使用。程序中带有演示数据,如果全新安装,可将根目录下的/uploads 文件夹中的演示图片文件删掉。安装方式:上传upload_install中的文件上传到虚拟主机或服务器网站根目录下;访问 http://域名/ 即可安装,安装时可以选取“演示数据&

#因为配准花费的时间太久太久了,本项目的数据已经包含好了配准后的数据。#因此不用运行此代码path = '/home/aistudio/work/mydata/IXI-T1'for f in tqdm(os.listdir(path)):

f_path = os.path.join(path,f)

m_path = os.path.join('/home/aistudio/work/mydata/IXI-T2',f.replace('T1','T2'))

f_img = ants.image_read(f_path)

m_img = ants.image_read(m_path)

mytx = ants.registration(fixed=f_img, moving=m_img, type_of_transform='SyN') # 将形变场作用于moving图像,得到配准后的图像

warped_img = ants.apply_transforms(fixed=f_img, moving=m_img, transformlist=mytx['fwdtransforms'],

interpolator="linear") # 将配准后图像的direction/origin/spacing和原图保持一致

warped_img.set_direction(f_img.direction)

warped_img.set_origin(f_img.origin)

warped_img.set_spacing(f_img.spacing)

img_name = '/home/aistudio/work/mydata/IXI-T2-warped/'+ f.replace('T1','Warped') # ants.image_write(warped_img, img_name)print("End")3. 转换数据形式

原始数据格式是NIFIT,是属于医疗数据格式的一种。把NIFIT数据转换成numpy,并对窗宽窗位进行裁剪到0~255。并生成txt文档,用作构建DataSet使用。

random.seed(2022)

save_path = '/home/aistudio/work/npdata'save_origin_path = "/home/aistudio/work/npdata/origin/"save_label_path = "/home/aistudio/work/npdata/label/"if not os.path.exists(save_path):

os.mkdir(save_path)

os.mkdir(save_origin_path)

os.mkdir(save_label_path)

origin_data = '/home/aistudio/work/mydata/IXI-T1'f_list = os.listdir(origin_data)

random.shuffle(f_list)

split = int(len(f_list)*0.9)

t_f_list = f_list[:split]

v_f_list = f_list[split:]def gen_txt(f_list,name):

txt = open( os.path.join(save_path,name),'w') for f in f_list: if '.ipynb_checkpoints' in f: continue

f_path = os.path.join(origin_data,f)

tag_path = os.path.join(origin_data.replace('T1','T2-warped'),f.replace('T1','Warped'))

f_sitkData = sitk.ReadImage(f_path)

tag_sitkData = sitk.ReadImage(tag_path)

f_npData = sitk.GetArrayFromImage(f_sitkData)

tag_npData = sitk.GetArrayFromImage(tag_sitkData)

f.split('-')[0] for i in range(100,200):

f_slice = np.rot90(f_npData[:,i,:],-1)

tag_slice = np.rot90(tag_npData[:,i,:],-1)

f_slice = (f_slice - 0) / ((835 - 0) / 255)

np.clip(f_slice, 0, 255, out=f_slice)

tag_slice = (tag_slice - 0) / ((626 - 0) / 255)

np.clip(tag_slice, 0, 255, out=tag_slice)

f_save_path = save_origin_path+f.split('-')[0]+'_'+str(i) +'.npy'

tag_save_path = save_label_path+f.split('-')[0]+'_'+str(i) +'.npy'

np.save(f_save_path,f_slice)

np.save(tag_save_path,tag_slice)

txt.write(f_save_path + ' ' + tag_save_path+ '\n')

txt.close()

gen_txt(t_f_list,name='train.txt')

gen_txt(v_f_list,name='val.txt')print('完成')4. 构建Dataset

数据增强只采用 缩放到256x256。

from paddle.io import Dataset, DataLoaderfrom paddle.vision.transforms import Resizeclass MyDataset(Dataset):

def __init__(self, data_dir, txt_path, transform=None):

super(MyDataset, self).__init__()

self.data_list = [] with open(txt_path,encoding='utf-8') as f: for line in f.readlines():

image_path, label_path = line.split(' ')

image_path = image_path.strip()

label_path = label_path.strip()

image_path = os.path.join(data_dir, image_path)

label_path = os.path.join(data_dir,label_path)

self.data_list.append([image_path, label_path])

self.transform = transform def __getitem__(self, index):

image_path, label_path = self.data_list[index]

image = np.load(image_path)

label = np.load(label_path) if self.transform is not None:

image = self.transform(image)

label = self.transform(label)

image = image[np.newaxis,:].astype('float32')

label = label[np.newaxis,:].astype('float32') return image, label def __len__(self):

return len(self.data_list)

BatchSize = 24transform = Resize(size=(256,256))

t_dataset = MyDataset('/home/aistudio','work/npdata/train.txt',transform=transform)

v_dataset = MyDataset('/home/aistudio','work/npdata/val.txt',transform=transform)

train_loader = DataLoader(t_dataset,batch_size=BatchSize)

val_loader = DataLoader(v_dataset,batch_size=BatchSize)5. 评价指标 SSIM

SSIM (Structure Similarity Index Measure) 结构衡量指标

结构相似指标可以衡量图片的失真程度,也可以衡量两张图片的相似程度,SSIM指标感知模型,即更符合人眼的直观感受。SSIM 主要考量图片的三个关键特征:亮度(Luminance), 对比度(Contrast), 结构 (Structure)。SSIM取值约接近1,两张图片的相似度约接近。两张图片一模一样,SSIM=1

参考文章 https://zhuanlan.zhihu.com/p/399215180

def ssim(img1, img2):

C1 = (0.01 * 255)**2

C2 = (0.03 * 255)**2

img1 = img1.astype(np.float64)

img2 = img2.astype(np.float64)

kernel = cv2.getGaussianKernel(11, 1.5)

window = np.outer(kernel, kernel.transpose())

mu1 = cv2.filter2D(img1, -1, window)[5:-5, 5:-5] # valid

mu2 = cv2.filter2D(img2, -1, window)[5:-5, 5:-5]

mu1_sq = mu1**2

mu2_sq = mu2**2

mu1_mu2 = mu1 * mu2

sigma1_sq = cv2.filter2D(img1**2, -1, window)[5:-5, 5:-5] - mu1_sq

sigma2_sq = cv2.filter2D(img2**2, -1, window)[5:-5, 5:-5] - mu2_sq

sigma12 = cv2.filter2D(img1 * img2, -1, window)[5:-5, 5:-5] - mu1_mu2

ssim_map = ((2 * mu1_mu2 + C1) * (2 * sigma12 + C2)) / ((mu1_sq + mu2_sq + C1) *

(sigma1_sq + sigma2_sq + C2)) return ssim_map.mean()def calculate_ssim(img1, img2):

if not img1.shape == img2.shape: raise ValueError('Input images must have the same dimensions.') if img1.ndim == 2: return ssim(img1, img2) elif img1.ndim == 3: if img1.shape[2] == 3:

ssims = [] for i in range(3):

ssims.append(ssim(img1, img2)) return np.array(ssims).mean() elif img1.shape[2] == 1: return ssim(np.squeeze(img1), np.squeeze(img2)) else: raise ValueError('Wrong input image dimensions.')for data in t_dataset:

img1,img2 = data

plt.figure(figsize=(10,6))

plt.subplot(1,2,1)

plt.imshow(np.squeeze(img1),'gray')

plt.title("T1 mode")

plt.subplot(1,2,2)

plt.imshow(np.squeeze(img2),'gray')

plt.title("T2 mode") print(f'SSIM:{calculate_ssim(np.squeeze(img1),np.squeeze(img2))}') breakSSIM:0.4927141870957079

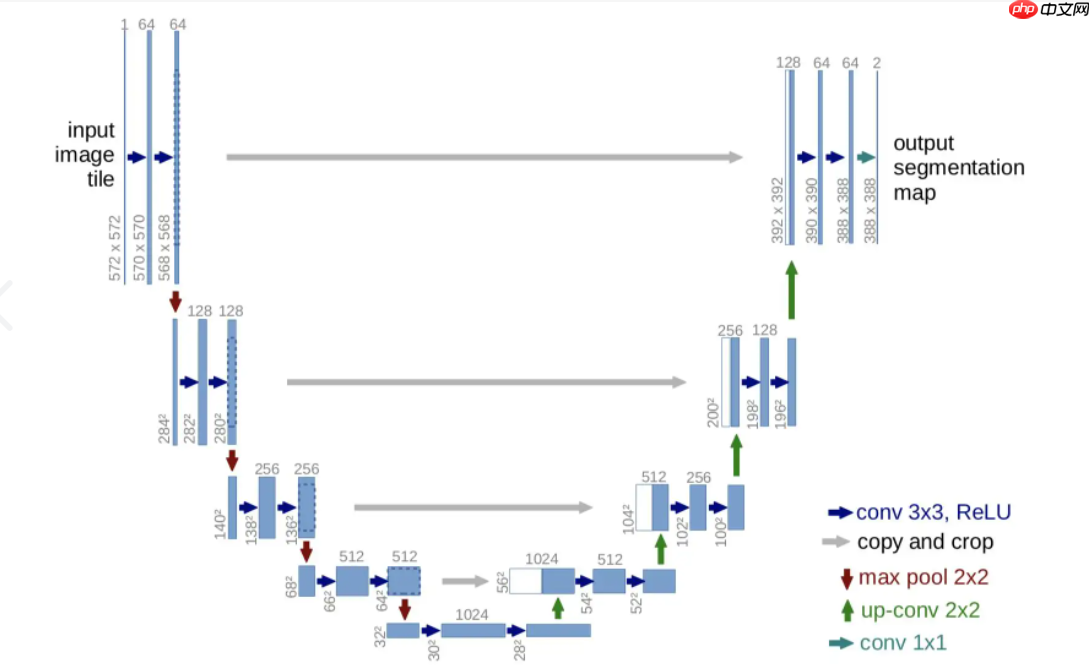

6. 导入Unet模型,开始训练

Unet网络是一种语义分割网络,输入原始图像和对应的标签Mask图像到Unet网络中进行分割,最终输出和标签Mask一致的图像。实际也是对像素值进行分类。把原始图像中的像素值进行分类,到底是属于Mask图像中像素值0,还是像素值1等等。这里是使用Unet网络进行回归,因此网络输出的类别是1。

from model.unet import UNet img = paddle.rand([2, 1, 256, 256]) model = UNet() out = model(paddle.rand([2, 1, 256, 256]))print(out.shape)

[2, 1, 256, 256]

def evaluation(model,val_dataset):

"""验证"""

model.eval()

ssims = [] for data in val_dataset:

image,label = data

image = image[np.newaxis,:].astype('float32')

image = paddle.to_tensor(image)

pre = model(image).numpy()

pre = np.squeeze(pre).astype('int32')

ssims.append(calculate_ssim(np.squeeze(label.astype('int32')),pre)) return np.array(ssims).mean()def train(model,epochs):

if not os.path.exists('/home/aistudio/save_model'):

os.mkdir('/home/aistudio/save_model')

steps = int(len(t_dataset)/BatchSize) * epochs

best_ssim = 0 #记录最优的ssim得分

model.train()

scheduler = paddle.optimizer.lr.PolynomialDecay(learning_rate=0.05, decay_steps=steps, verbose=False)

opt = paddle.optimizer.Adam(learning_rate=scheduler, parameters=model.parameters())

for epoch_id in range(epochs): for batch_id, data in enumerate(train_loader()):

images, labels = data

images = paddle.to_tensor(images)

labels = paddle.to_tensor(labels)

predicts = model(images)

mse_loss = paddle.nn.MSELoss()

l1_loss = paddle.nn.L1Loss()

loss = mse_loss(predicts, labels)+l1_loss(predicts,labels) if batch_id % 200 == 0: print("epoch: {}, batch: {}, loss is: {}".format(epoch_id, batch_id, loss.numpy()))

loss.backward()

opt.step()

opt.clear_grad() #训练过程中,保存模型参数

if epoch_id % 10 == 0:

paddle.save(model.state_dict(), '/home/aistudio/save_model/'+str(epoch_id)+'model.pdparams')

model.eval() #训练过程中,预览效果,输入T1图片,预测t2图片

data = t_dataset[150]

img,label = data input = img[np.newaxis,:].astype('float32') input = paddle.to_tensor(input)

pre = model(input).numpy()

pre = np.squeeze(pre)

plt.figure(figsize=(12,6))

plt.subplot(1,3,1)

plt.imshow(np.squeeze(img),'gray')

plt.title("input: T1 mode")

plt.subplot(1,3,2)

plt.imshow(np.squeeze(label),'gray')

plt.title("target:T2 mode")

plt.subplot(1,3,3)

plt.imshow(pre,'gray')

plt.title("pre: T2 mode")

plt.show()

v_ssim_mean = evaluation(model, v_dataset) if v_ssim_mean > best_ssim:

paddle.save(model.state_dict(), '/home/aistudio/save_model/best_model.pdparams')

best_ssim = v_ssim_mean

model.train() print(f'val_SSIM: {v_ssim_mean},Best_SSIM:{best_ssim}')

#启动训练过程train(model,epochs= 200)epoch: 0, batch: 0, loss is: [7316.7188]

val_SSIM: 0.39978080604275146,Best_SSIM:0.39978080604275146 epoch: 1, batch: 0, loss is: [2628.9192] epoch: 2, batch: 0, loss is: [2724.6853] epoch: 3, batch: 0, loss is: [2688.8508] epoch: 4, batch: 0, loss is: [2686.88] epoch: 5, batch: 0, loss is: [2608.186] epoch: 6, batch: 0, loss is: [2520.5952] epoch: 7, batch: 0, loss is: [2692.3694] epoch: 8, batch: 0, loss is: [2702.1028] epoch: 9, batch: 0, loss is: [2628.6016] epoch: 10, batch: 0, loss is: [2485.4382]

val_SSIM: 0.4802982366278921,Best_SSIM:0.4802982366278921 epoch: 11, batch: 0, loss is: [2507.683] epoch: 12, batch: 0, loss is: [2434.3452] epoch: 13, batch: 0, loss is: [2408.262] epoch: 14, batch: 0, loss is: [2332.7097] epoch: 15, batch: 0, loss is: [2270.0247] epoch: 16, batch: 0, loss is: [2179.795] epoch: 17, batch: 0, loss is: [2138.3933] epoch: 18, batch: 0, loss is: [2093.4106] epoch: 19, batch: 0, loss is: [1887.1506] epoch: 20, batch: 0, loss is: [2024.5569]

val_SSIM: 0.49954443953370115,Best_SSIM:0.49954443953370115 epoch: 21, batch: 0, loss is: [1973.8619] epoch: 22, batch: 0, loss is: [1956.6097] epoch: 23, batch: 0, loss is: [1909.3981] epoch: 24, batch: 0, loss is: [1875.7285] epoch: 25, batch: 0, loss is: [1837.553] epoch: 26, batch: 0, loss is: [1812.5168] epoch: 27, batch: 0, loss is: [1785.5492] epoch: 28, batch: 0, loss is: [1774.7295] epoch: 29, batch: 0, loss is: [1752.758] epoch: 30, batch: 0, loss is: [1756.8203]

val_SSIM: 0.5426780015544808,Best_SSIM:0.5426780015544808 epoch: 31, batch: 0, loss is: [1735.7894] epoch: 32, batch: 0, loss is: [1827.0814] epoch: 33, batch: 0, loss is: [1753.9219] epoch: 34, batch: 0, loss is: [1779.7365] epoch: 35, batch: 0, loss is: [1752.4994] epoch: 36, batch: 0, loss is: [1747.4668] epoch: 37, batch: 0, loss is: [1735.7844] epoch: 38, batch: 0, loss is: [1769.8733] epoch: 39, batch: 0, loss is: [1759.1631] epoch: 40, batch: 0, loss is: [1630.8364]

val_SSIM: 0.4373114577520071,Best_SSIM:0.5426780015544808 epoch: 41, batch: 0, loss is: [1611.3628] epoch: 42, batch: 0, loss is: [1715.6615] epoch: 43, batch: 0, loss is: [1763.4269] epoch: 44, batch: 0, loss is: [1737.9359] epoch: 45, batch: 0, loss is: [1772.5739] epoch: 46, batch: 0, loss is: [1738.1847] epoch: 47, batch: 0, loss is: [1775.006] epoch: 48, batch: 0, loss is: [1911.8531] epoch: 49, batch: 0, loss is: [2033.4453] epoch: 50, batch: 0, loss is: [1987.3456]

val_SSIM: 0.5579299673856584,Best_SSIM:0.5579299673856584 epoch: 51, batch: 0, loss is: [1836.5175] epoch: 52, batch: 0, loss is: [1861.1411] epoch: 53, batch: 0, loss is: [1845.1718] epoch: 54, batch: 0, loss is: [1740.5963] epoch: 55, batch: 0, loss is: [1660.3125] epoch: 56, batch: 0, loss is: [1726.9702] epoch: 57, batch: 0, loss is: [1635.8535] epoch: 58, batch: 0, loss is: [1610.6799] epoch: 59, batch: 0, loss is: [1568.5394] epoch: 60, batch: 0, loss is: [1617.876]

val_SSIM: 0.5634165131362056,Best_SSIM:0.5634165131362056 epoch: 61, batch: 0, loss is: [1561.1827] epoch: 62, batch: 0, loss is: [1597.7261] epoch: 63, batch: 0, loss is: [1525.599] epoch: 64, batch: 0, loss is: [1548.1874] epoch: 65, batch: 0, loss is: [1488.4364] epoch: 66, batch: 0, loss is: [1513.0366] epoch: 67, batch: 0, loss is: [1505.5546] epoch: 68, batch: 0, loss is: [1516.5098] epoch: 69, batch: 0, loss is: [1467.4851] epoch: 70, batch: 0, loss is: [1585.8317]

val_SSIM: 0.5709971328311436,Best_SSIM:0.5709971328311436 epoch: 71, batch: 0, loss is: [1457.6887] epoch: 72, batch: 0, loss is: [1467.6174] epoch: 73, batch: 0, loss is: [1471.8882] epoch: 74, batch: 0, loss is: [1459.0536] epoch: 75, batch: 0, loss is: [1458.9404] epoch: 76, batch: 0, loss is: [1634.24] epoch: 77, batch: 0, loss is: [1650.2292] epoch: 78, batch: 0, loss is: [1437.5302] epoch: 79, batch: 0, loss is: [1421.7593] epoch: 80, batch: 0, loss is: [1413.1609]

val_SSIM: 0.5701560896501561,Best_SSIM:0.5709971328311436 epoch: 81, batch: 0, loss is: [1409.3071] epoch: 82, batch: 0, loss is: [1404.0553] epoch: 83, batch: 0, loss is: [1420.5449] epoch: 84, batch: 0, loss is: [1398.2864] epoch: 85, batch: 0, loss is: [1488.5781] epoch: 86, batch: 0, loss is: [1401.356] epoch: 87, batch: 0, loss is: [1410.7175] epoch: 88, batch: 0, loss is: [1500.6272] epoch: 89, batch: 0, loss is: [1416.3497] epoch: 90, batch: 0, loss is: [1380.4263]

val_SSIM: 0.5465621052241267,Best_SSIM:0.5709971328311436 epoch: 91, batch: 0, loss is: [1372.7605] epoch: 92, batch: 0, loss is: [1367.5848] epoch: 93, batch: 0, loss is: [1376.7378] epoch: 94, batch: 0, loss is: [1370.2089] epoch: 95, batch: 0, loss is: [1365.8433] epoch: 96, batch: 0, loss is: [1369.1512] epoch: 97, batch: 0, loss is: [1369.3212] epoch: 98, batch: 0, loss is: [1404.3115] epoch: 99, batch: 0, loss is: [1354.4286] epoch: 100, batch: 0, loss is: [1382.9532]

val_SSIM: 0.5378666148453135,Best_SSIM:0.5709971328311436 epoch: 101, batch: 0, loss is: [1387.7471] epoch: 102, batch: 0, loss is: [1424.3396] epoch: 103, batch: 0, loss is: [1349.6024] epoch: 104, batch: 0, loss is: [1366.5181] epoch: 105, batch: 0, loss is: [1352.3512] epoch: 106, batch: 0, loss is: [1353.1865] epoch: 107, batch: 0, loss is: [1352.0631] epoch: 108, batch: 0, loss is: [1343.2163] epoch: 109, batch: 0, loss is: [1345.3413] epoch: 110, batch: 0, loss is: [1346.6847]

val_SSIM: 0.5586893791021966,Best_SSIM:0.5709971328311436 epoch: 111, batch: 0, loss is: [1343.9454] epoch: 112, batch: 0, loss is: [1349.0774] epoch: 113, batch: 0, loss is: [1337.5864] epoch: 114, batch: 0, loss is: [1429.8538] epoch: 115, batch: 0, loss is: [1349.8104] epoch: 116, batch: 0, loss is: [1335.1368] epoch: 117, batch: 0, loss is: [1337.9221] epoch: 118, batch: 0, loss is: [1352.1951] epoch: 119, batch: 0, loss is: [1347.4542] epoch: 120, batch: 0, loss is: [1336.236]

val_SSIM: 0.4977443843057142,Best_SSIM:0.5709971328311436 epoch: 121, batch: 0, loss is: [1341.1677] epoch: 122, batch: 0, loss is: [1325.7648] epoch: 123, batch: 0, loss is: [1322.5] epoch: 124124, batch: 0, loss is: [1322.8683] epoch: 125, batch: 0, loss is: [1319.6675] epoch: 126, batch: 0, loss is: [1319.3303] epoch: 127, batch: 0, loss is: [1320.0753] epoch: 128, batch: 0, loss is: [1316.222] epoch: 129, batch: 0, loss is: [1336.7915] epoch: 130, batch: 0, loss is: [1321.2736]

val_SSIM: 0.47030362428033146,Best_SSIM:0.5709971328311436 epoch: 131, batch: 0, loss is: [1339.2891] epoch: 132, batch: 0, loss is: [1318.2183] epoch: 133, batch: 0, loss is: [1325.5737] epoch: 134, batch: 0, loss is: [1308.886] epoch: 135, batch: 0, loss is: [1298.8752] epoch: 136, batch: 0, loss is: [1302.0266] epoch: 137, batch: 0, loss is: [1279.0824] epoch: 138, batch: 0, loss is: [1313.5026] epoch: 139, batch: 0, loss is: [1317.8335] epoch: 140, batch: 0, loss is: [1325.1211]

val_SSIM: 0.5129117285623543,Best_SSIM:0.5709971328311436 epoch: 141, batch: 0, loss is: [1307.1959] epoch: 142, batch: 0, loss is: [1312.2686] epoch: 143, batch: 0, loss is: [1320.1404] epoch: 144, batch: 0, loss is: [1326.3783] epoch: 145, batch: 0, loss is: [1308.4757] epoch: 146, batch: 0, loss is: [1318.8088] epoch: 147, batch: 0, loss is: [1297.8518] epoch: 148, batch: 0, loss is: [1296.301] epoch: 149, batch: 0, loss is: [1301.2318] epoch: 150, batch: 0, loss is: [1290.0143]

val_SSIM: 0.4650640222375466,Best_SSIM:0.5709971328311436 epoch: 151, batch: 0, loss is: [1320.3531] epoch: 152, batch: 0, loss is: [1311.9688] epoch: 153, batch: 0, loss is: [1302.6729] epoch: 154, batch: 0, loss is: [1302.4635] epoch: 155, batch: 0, loss is: [1286.9762] epoch: 156, batch: 0, loss is: [1284.86] epoch: 157, batch: 0, loss is: [1290.937] epoch: 158, batch: 0, loss is: [1274.3252] epoch: 159, batch: 0, loss is: [1280.6571] epoch: 160, batch: 0, loss is: [1286.4685]

val_SSIM: 0.4561319579342975,Best_SSIM:0.5709971328311436 epoch: 161, batch: 0, loss is: [1270.9604] epoch: 162, batch: 0, loss is: [1287.5771] epoch: 163, batch: 0, loss is: [1269.8563] epoch: 164, batch: 0, loss is: [1286.9226] epoch: 165, batch: 0, loss is: [1272.0933] epoch: 166, batch: 0, loss is: [1274.8392] epoch: 167, batch: 0, loss is: [1272.4178] epoch: 168, batch: 0, loss is: [1266.7671] epoch: 169, batch: 0, loss is: [1282.4298] epoch: 170, batch: 0, loss is: [1241247.015]

val_SSIM: 0.49646587443554935,Best_SSIM:0.5709971328311436 epoch: 171, batch: 0, loss is: [1250.8077] epoch: 172, batch: 0, loss is: [1287.3835] epoch: 173, batch: 0, loss is: [1241248.3782] epoch: 174, batch: 0, loss is: [1241249.7322] epoch: 175, batch: 0, loss is: [1273.4828] epoch: 176, batch: 0, loss is: [1253.0632] epoch: 177, batch: 0, loss is: [1281.6831] epoch: 178, batch: 0, loss is: [1280.6669] epoch: 179, batch: 0, loss is: [1241245.0092] epoch: 180, batch: 0, loss is: [1257.4398]

val_SSIM: 0.46512517332255776,Best_SSIM:0.5709971328311436 epoch: 181, batch: 0, loss is: [1241242.4584] epoch: 182, batch: 0, loss is: [1254.164] epoch: 183, batch: 0, loss is: [1238.1926] epoch: 184, batch: 0, loss is: [1241243.1198] epoch: 185, batch: 0, loss is: [1241248.0677] epoch: 186, batch: 0, loss is: [1241240.6064] epoch: 187, batch: 0, loss is: [1252.3843] epoch: 188, batch: 0, loss is: [1230.179] epoch: 189, batch: 0, loss is: [1237.1627] epoch: 190, batch: 0, loss is: [1232.43]

val_SSIM: 0.4939679476601408,Best_SSIM:0.5709971328311436 epoch: 191, batch: 0, loss is: [1241245.5471] epoch: 192, batch: 0, loss is: [1255.1567] epoch: 193, batch: 0, loss is: [1250.8704] epoch: 194, batch: 0, loss is: [1223.4425] epoch: 195, batch: 0, loss is: [1224.8658] epoch: 196, batch: 0, loss is: [1230.7142] epoch: 197, batch: 0, loss is: [1229.7573] epoch: 198, batch: 0, loss is: [1235.9465] epoch: 199, batch: 0, loss is: [1225.0635]